Senior AWS Data Engineer

Role details

Job location

Tech stack

Job description

- Design, develop, and optimise scalable, testable data pipelines using Python and Apache Spark.

- Implement batch workflows and ETL processes adhering to modern engineering standards.

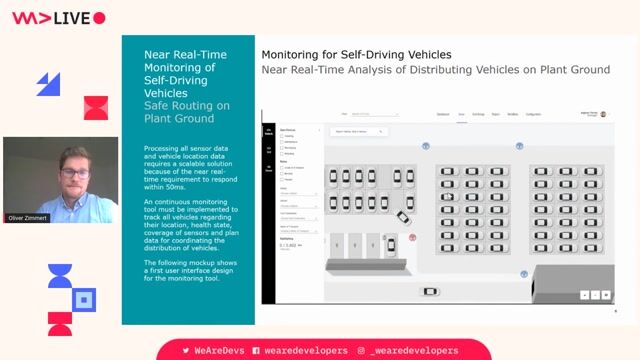

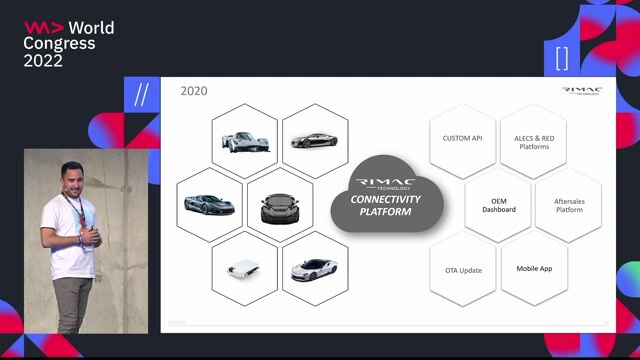

Develop Cloud-Based Workflows

- Orchestrate data workflows using AWS services such as Glue, EMR Serverless, Lambda, and S3.

- Contribute to the evolution of lakehouse architecture leveraging Apache Iceberg.

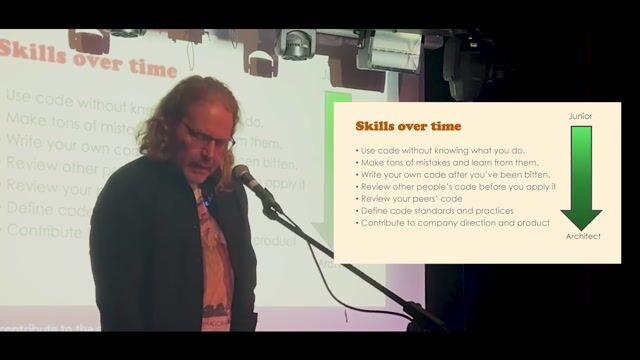

Apply Software Engineering Best Practices

- Use version control, CI/CD pipelines, automated testing, and modular code principles.

- Participate in pair programming, code reviews, and architectural design sessions.

Data Quality & Observability

- Build monitoring and observability into data flows.

- Implement basic data quality checks and contribute to continuous improvements.

Stakeholder Collaboration

- Work closely with business teams to translate requirements into data-driven solutions.

- Develop an understanding of financial indices and share domain insights with the team.

Requirements

Do you have experience in Spark?, We are developing a next-generation data platform and are looking for an experienced Senior Data Engineer to help shape its architecture, reliability, and scalability. The ideal candidate will have more than 10 years of hands-on engineering experience and a strong background in building modern data pipelines, working with cloud-native technologies, and applying robust software engineering practices., * Strong experience writing clean, maintainable Python code, ideally using type hints, linters, and test frameworks such as pytest.

- Solid understanding of data engineering fundamentals including batch processing, schema evolution, and ETL pipeline development.

- Experience with-or strong interest in learning-Apache Spark for large-scale data processing.

- Familiarity with AWS data ecosystem tools such as S3, Glue, Lambda, and EMR.

Ways of Working

- Comfortable working in Agile environments and contributing to collaborative team processes.

- Ability to engage with business stakeholders and understand the broader context behind technical requirements.

Nice-to-Have Skills

- Experience with Apache Iceberg or similar table formats (e.g., Delta Lake, Hudi).

- Familiarity with CI/CD platforms such as GitLab CI, Jenkins, or GitHub Actions.

- Exposure to data quality frameworks such as Great Expectations or Deequ.

- Interest or background in financial markets, index data, or investment analytics.

- 10+ years of experience is mandatory