Data Engineer

Role details

Job location

Tech stack

Job description

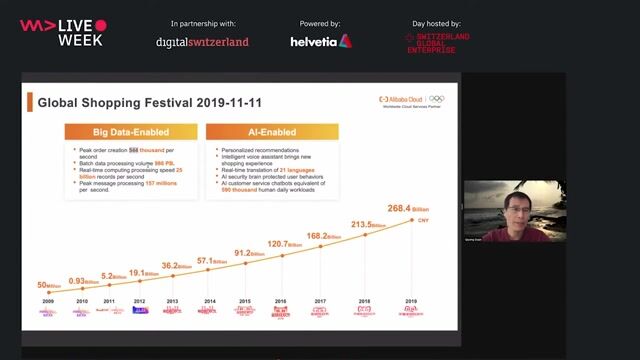

The Data team is responsible for providing business solutions aimed at extracting value from large amounts of data. It covers a broad range of activities such as collecting market data and building related analysis tools, processing of real-time data streams, data governance and data science. The role will focus on building the foundational data platform that will enable all other Data services., * Drive the implementations of a modern data platform. Play a key role, in collaboration with the broader Data team, in building a modern data platform on the principles of a data lakehouse but adapted to the company's requirements.

- Design and build data storage solutions to address different use cases. Be technology agnostic and favour open standards (e.g., Parquet, Apache Iceberg). Find the right balance between cost and performance of the proposed solutions.

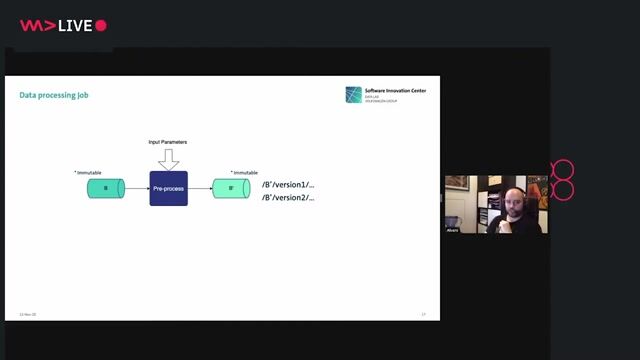

- Create scalable ingestion and transformation workflows, covering aspects such as observability and logging, using the defined standard technologies, .

- Capture and Manage Data Lineage: Implement lineage tracking for ingestion and transformation processes using the selected standard tools.

- Ensure Data Quality and Consistency: Apply validation, schema enforcement, and error handling across all built systems.

- Collaborate on Data Modeling: Work with analytics and business teams to design schemas that support reporting and advanced analytics.

- Adhere to Governance and Security Standards: Apply best practices for data governance, access control, and compliance.

- Stay Current with Emerging Technologies: Continuously evaluate and propose new tools and frameworks to improve data engineering workflows.

- Participate in agile processes and ceremonies as part of ongoing delivery

- Mentor and review code of other team members

- Contribute to the architectural direction of the platform

- Collaborate with the data support team to ensure the data platform is properly monitored and any incident resolved promptly

Requirements

- Data Lakehouse Standards: Hands-on experience with a data lakehouse implementation (either vendor based or open source) which is based open standards such as Parquet and Apache Iceberg. Experience in building data models, partition strategies and performance optimization (e.g. data compaction).

- Data Transformation & Lineage: Experience in performing data transformation and capturing data lineage using tools like dbt or Apache Spark.

- Distributed Data Processing: Solid understanding of Spark or similar frameworks for large-scale data operations.

- SQL Expertise: Strong knowledge of SQL for querying and data modeling.

- Version Control: Familiarity with Git and collaborative development workflows.

- Cloud & Storage Concepts: Understanding of object storage (e.g., Azure ADLSv2) and cloud-based data architectures.

Desirable Skills and Experience

- Query Engines: Experience with Trino, Dremio or similar systems for federated querying.

- Vendor Platforms: Exposure to Databricks or Microsoft Fabric for data engineering workflows.

- Analytical databases such as Clickhouse

- Containerization & Orchestration: Ability to set up and run data tools on Kubernetes.

- Workflow Orchestration: Familiarity with Airflow, Dagster, or similar tools.

- Streaming Data: Knowledge of Apache Kafka or other streaming platforms.

- Observability & Monitoring: Experience with monitoring data pipelines and performance tuning.

- Security & Governance: Understanding of data governance, access control, and compliance best practices., * Hands-on approach, flexible and positive attitude

- Ability to understand complex problems quickly

- Passion for building quality systems

- Strong communication and interpersonal skills

- Ability to fully participate in a multi-faceted team environment

Benefits & conditions

8.30am - 5.30pm Monday to Friday

Hybrid working arrangement