Senior Snowflake Data Engineer

Role details

Job location

Tech stack

Job description

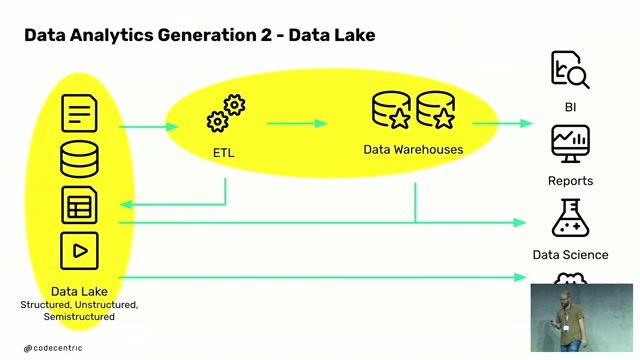

Design and develop data pipelines using Snowflake and Snow pipe for real-time and batch ingestion.

Implement CI/CD pipelines in Azure DevOps for seamless deployment of data solutions.

Automate DBT jobs to streamline transformations and ensure reliable data workflows.

Apply data modeling techniques including OLTP, OLAP, and Data Vault 2.0 methodologies to design scalable architectures.

Document data models, processes, and workflows clearly for future reference and knowledge sharing.

Build data tests, unit tests, and mock data frameworks to validate and maintain reliability of data solutions.

Develop Streamlit applications integrated with Snowflake to deliver interactive dashboards and self-service analytics.

Integrate SAP data sources into Snowflake pipelines for enterprise reporting and analytics.

Leverage SQL expertise for complex queries, transformations, and performance optimization.

Integrate cloud services across AWS, Azure, and GCP to support multi-cloud data strategies.

Develop Python scripts for ETL/ELT processes, automation, and data quality checks.

Implement infrastructure-as-code solutions using Terraform for scalable and automated cloud deployments.

Manage RBAC and enforce data governance policies to ensure compliance and secure data access.

Collaborate with cross-functional teams including business analysts, and business stakeholders to deliver reliable data solutions.

Requirements

Do you have experience in XML?, We are seeking a highly skilled Snowflake Data Engineer to design, build, and optimize scalable data pipelines and cloud-based solutions across AWS, Azure, and GCP. The ideal candidate will have strong expertise in Snowflake, ETL Tools like DBT, Python, visualization tools like Tableau and modern CI/CD practices, with a deep understanding of data governance, security, and role-based access control (RBAC). Knowledge of data modeling methodologies (OLTP, OLAP, Data Vault 2.0), data quality frameworks, Stream lit application development and SAP integration and infrastructure-as-code with Terraform is essential. Experience working with different file formats such as JSON, Parquet, CSV, and XML is highly valued., Must have

Strong proficiency in Snowflake (Snowpipe, RBAC, performance tuning).

Hands-on experience with Python , SQL , Jinja , JavaScript for data engineering tasks.

CI/CD expertise using Azure DevOps (build, release, version control).

Experience automating DBT jobs for data transformations.

Experience building Streamlit applications with Snowflake integration.

Cloud services knowledge across AWS (S3, Lambda, Glue), Azure (Data Factory, Synapse), and GCP (BigQuery, Pub/Sub).

Nice to have

Cloud certifications is a plus

About the company

Luxoft, a DXC Technology Company, (NYSE: DXC), is a digital strategy and software engineering firm providing bespoke technology solutions that drive business change for customers the world over. Luxoft uses technology to enable business transformation, enhance customer experiences, and boost operational efficiency through its strategy, consulting, and engineering services. Luxoft combines a unique blend of engineering excellence and deep industry expertise, specializing in automotive, financial services, travel and hospitality, healthcare, life sciences, media and telecommunications.