GenAI Engineering Lead - Apple Services Engineering

Role details

Job location

Tech stack

Job description

As a core member of our GenAI team, you will lead transformative progress in the application large-scale foundational models with a strong focus on bringing to life new and innovative customer experiences that delight millions of customers across the globe. Your mission is to push the boundaries of planning, reasoning, and agentic intelligence. You will design and implement large-scale AI/ML solutions integrating the latest state of the art research in our global products. While collaborating with partner teams across Apple, you'll contribute by sharing your experience, delivering architectural proposals that account for international market needs, and maintaining an ethos of continual learning from your counterparts. This position is at the intersection of Generative AI, Machine Learning and Software Engineering. The ideal candidate will have expertise with LLMs and deep learning models, machine learning lifecycle management, data generation methods, model training & validation coupled with strong fundamentals and passion in software engineering and system architecture.

Requirements

- Comprehensive experience leading ML/AI initiatives and teams in a fast-paced environment, particularly in generative AI, traditional ML, and knowledge graphs

- Demonstrated ability to work cross-functionally and influence product development through a combination of technical leadership and user-centered thinking.

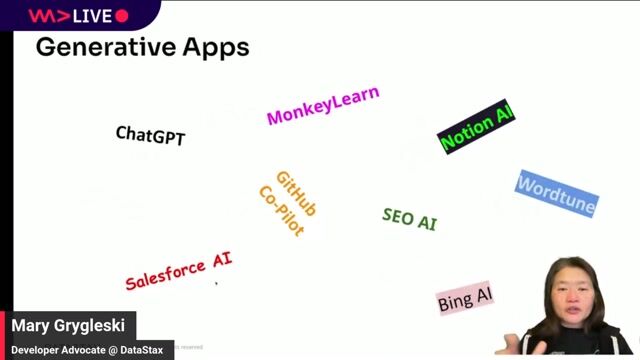

- Hands-on experience designing and building GenAI platforms that allow users to experience AI applications supporting features like agent orchestration, multi-step reasoning, prompt engineering, RAG integration, and model selection

- Strong programming skills in Python, Java, or similar languages, with an emphasis on AI/ML systems development and platform engineering. Experience with TensorFlow, PyTorch or Keras, * B.S, M.S. or PhD Degree in Computer Science/Engineering, or equivalent work experience

- Experience working in multinational or distributed teams, especially across Europe and the U.S.

- In-depth understanding of large language models (LLMs) and their application in AI-driven solutions, including inferencing, embedding, and knowledge base integration (RAG) for improved data retrieval and contextualization

- Deep knowledge of LLM inference optimization techniques, including prompt tuning, caching, quantization, and latency reduction across different model families

- Hands-on experience with building or fine-tuning AI agents and deploying models in production environments

- Familiarity with research papers, implementing state-of-the-art methods, and adapting them to practical applications

Published research in the field of Machine Learning, AI or Computer Vision