LLM / RAG Engineer (Contractor, 6-12 months)

Role details

Job location

Tech stack

Job description

This is not a routine IC position: it's a hands-on role at the intersection of research, technology, and product impact. You will collaborate with our technical advisors, including the CEO of explaino, to design and implement the core AI systems behind the EQx and VCr Chatbots.

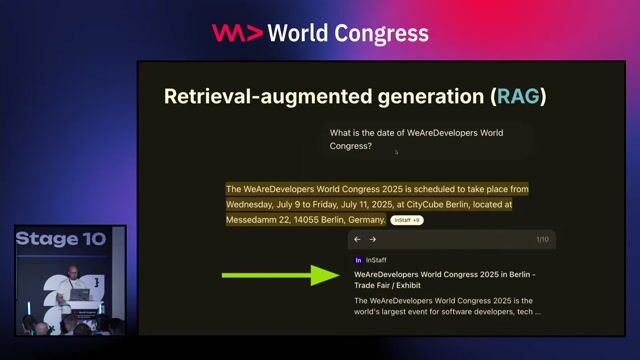

- Design and implement the end-to-end AI pipeline for retrieval-augmented generation (RAG) and structured query answering.

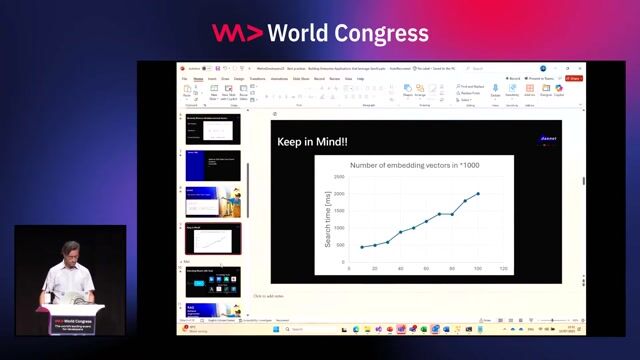

- Apply state-of-the-art NLP and LLM methods, including embedding generation, retrieval optimization, and advanced prompting strategies.

- Integrate Azure OpenAI models with document and metric databases (Weaviate, PostgreSQL/pgvector) through FastAPI endpoints.

- Evaluate and improve model quality, using frameworks such as PromptFlow, RAGAS, or DeepEval for hallucination tracking and citation accuracy.

- Collaborate with data and frontend developers to surface firm-level insights through explainable, transparent outputs.

- Contribute to architecture discussions around scaling, caching, and fine-tuning evaluation metrics.

Requirements

- 3+ years of experience as an AI Engineer, Data Scientist, or Applied Researcher.

- Solid Python skills and experience in one or more of: Hugging Face Transformers, LangChain, LlamaIndex, or Weaviate.

- Familiarity with Azure cloud architectural services and FastAPI (Key Vault, Blob Storage, AI Search, or ML Studio).

- "Working knowledge of CI/CD and containerized deployment (e.g., Docker, GitHub Actions, or Azure DevOps)."

- Working knowledge of vector databases and embedding pipelines (Weaviate, pgvector, Pinecone, Milvus, etc.).

- Ability to evaluate and improve model performance with classic NLP and modern transformer-based techniques.

- Experience with at least one of: PyTorch, Optuna, Peft & LoRA, Scikit-learn, Numpy, Pandas, or NLTK.

- Understanding of data analytics and reproducible evaluation practices.

- Comfortable using AI-assisted coding tools (e.g., GitHub Copilot, Claude, or ChatGPT), responsibly to accelerate delivery.

Nice to have:

-

Academic or research background (e.g., in NLP, information retrieval, or data science).

-

Familiarity with visualization libraries, data lineage systems, or basic React development.

-

Prior experience deploying AI applications in Azure or other regulated cloud environments.

-

Opportunity to build real-world AI tools grounded in academic research and social impact.

-

Work closely with experienced engineers and researchers from the University of St. Gallen ecosystem.

-

Authorship credit on public research and technical outputs.

-

Flexible, mission-driven environment emphasizing open collaboration and responsible AI practices.