Senior Data Engineer

intro

31 days ago

Role details

Contract type

Permanent contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

EnglishJob location

Tech stack

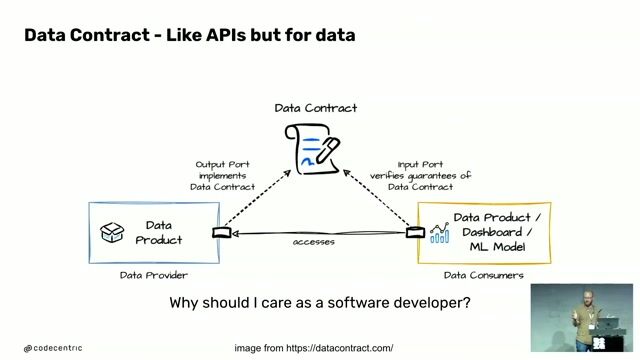

API

Airflow

Amazon Web Services (AWS)

Big Data

Google BigQuery

Cloud Computing

Code Review

Databases

Information Engineering

Data Governance

ETL

Data Transformation

Data Systems

Dimensional Modeling

Github

Python

Monte Carlo Methods

Software Engineering

SQL Databases

Technical Debt

Containerization

Data Delivery

Looker Analytics

Software Version Control

Docker

Job description

- Designing, developing, and optimising scalable ETL/ELT pipelines, ensuring reliable data delivery from diverse sources such as APIs, transactional databases, and file based endpoints.

- Building and maintaining a robust Data Platform using tools like Airflow , dbt , and GCP services such as Cloud Functions, Cloud Run, and BigQuery.

- Collaborating with product and engineering teams to design data models and workflows that drive decision making and analytics across the company.

- Mentoring junior team members and championing best practices in data engineering, including code reviews, testing, and pipeline orchestration.

- Tackling technical debt by modernising outdated code and improving efficiency within the data stack.

- Implementing data governance policies, including standards for data quality, access controls, and classification., * Be part of a company that's leading innovation in FinTech, with ambitious plans for 2025 and beyond.

- Shape a brand new Engineering team and contribute to building a cutting edge Data Platform.

- Join an organisation that prioritises diversity, inclusion, and equity, fostering a supportive workplace culture.

- Work on high impact projects with the latest technologies in data and analytics.

Requirements

This is an opportunity to work on cutting edge data driven solutions as part of a collaborative, distributed team. If you're a skilled Data Engineer with a passion for designing high quality systems and mentoring others, this could be the perfect role for you., * Extensive experience in Data Engineering or analytics engineering, with a track record of building and optimising complex pipelines in big data environments.

- Proficiency in Python and SQL for data transformation and manipulation.

- Strong knowledge of modern data stack tools, including Airflow , dbt , and cloud platforms like GCP or AWS .

- Familiarity with data modelling concepts and warehousing best practices, including dimensional modelling.

- Clear and concise communication skills in English , with the ability to work effectively as part of a global, distributed team.

- Experience delivering data solutions using software engineering principles, including version control (GitHub), CI/CD pipelines, and containerisation (Docker).

- A proactive approach to problem-solving, with the ability to identify optimisation opportunities and drive continuous improvement.

Desirable Skills

- Experience with additional tools like Looker , MonteCarlo , or Hevodata .

- Prior experience in Financial Services or a similar industry.

About the company

intro Consulting Ltd are proud to represent this forward thinking FinTech client. We act as their trusted recruitment partner, connecting top talent with meaningful opportunities.