ML Security Tools & Threat Modeling Engineer

Role details

Job location

Tech stack

Job description

Join our Innovation Team, where we explore cutting-edge concepts at the intersection of Machine Learning and Security.

Our mission is to develop forward-looking solutions-such as model protection, privacy-preserving ML, security for agentic AI, and anomaly detection-that will later be integrated into our Edge products. This requires high-level innovation skills combined with a hands-on mindset.

Develop security tools and frameworks for Bring Your Own Model (BYOM) workflows and perform threat modeling for ML pipelines. Ensure proactive detection of vulnerabilities and compliance with emerging ML security standards.

Responsibilities:

-

Build security scanning tools for ML artifacts and deployment workflows.

-

Design secure APIs for model integration on embedded platforms.

-

Perform threat modeling for ML systems (poisoning, evasion, prompt injection).

-

Implement monitoring solutions for model integrity and anomaly detection.

-

Ensure compliance with NIST AI Risk Management Framework and similar standards.

-

Collaborate with internal teams to integrate security checks into development pipelines.

Requirements

-

Msc. degree or PhD in Computer Science, Cybersecurity, or Cryptography and a strong interest in applied ML, OR

-

Msc. degree or PhD in Machine Learning and an interest in cybersecurity.

-

Strong Python development skills.

-

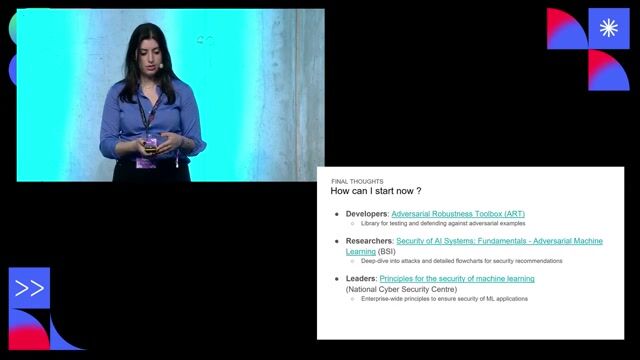

Experience with threat modeling methodologies adapted for ML systems.

-

Knowledge of adversarial ML attacks and defenses.

-

Familiarity with secure API design and integration.

-

Understanding of compliance frameworks (NIST AI RMF, ISO/IEC AI security standards).

Please note: The successful candidate may/will be responsible for security related tasks. The assignment may/will be in scope of security certifications, therefore a conscious and reliable way of working is necessary.