PhD - Urban navigation solution based on an optimized fusion of data from video and inertial sensors

Role details

Job location

Tech stack

Job description

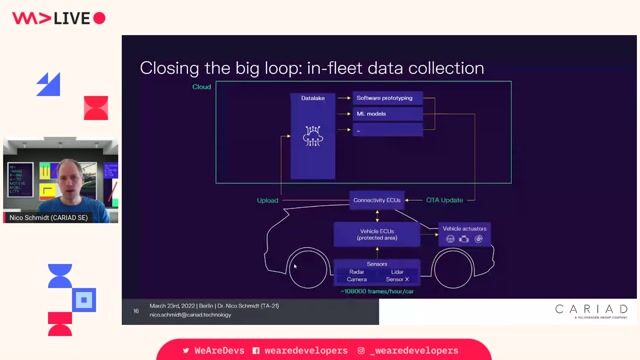

Navigation for autonomous and airport vehicles in urban or complex environments faces significant challenges due to the unavailability or degradation of GNSS (Global Navigation Satellite System) signals. Such degradations can result from environmental factors (urban canyons, tunnels, underground parking), intentional interference (jamming, spoofing), or a complete absence of signals in specific contexts. To address these limitations, integrating data from complementary sensors, such as video cameras and Inertial Navigation Systems (INS), has emerged as a promising solution to compensate for the shortcomings of traditional GNSS navigation systems. An alternative in urban environments is to use video sensors and rely on SLAM (Simultaneous Localization And Mapping) algorithms for road vehicle navigation. In the previously described case of GNSS signal degradation or unavailability, we propose to focus on a solution that integrates video and inertial measurements in a robust and reliable manner.

This thesis aims to study and develop an urban navigation solution based on an optimized fusion of data from video and inertial sensors. The objective is to design a high-performance algorithm capable of addressing the specific constraints of urban and airport environments while ensuring the level of accuracy and integrity required by the targeted application.

In the first phase, a Visual-Inertial SLAM (Simultaneous Localization And Mapping) algorithm will be developed based on an existing algorithm. This algorithm will integrate visual information captured by one camera with inertial data to simultaneously estimate position, orientation, and environmental mapping. Particular attention will be given to managing uncertainties, ensuring robust data fusion, and enabling the system to function in dynamic and complex environments.

The second phase of the thesis will focus on developing an integrity monitoring system. This module will ensure the reliability of the navigation outputs by detecting and assessing errors or anomalies in sensor data. A methodology will be implemented to detect unacceptable errors (monitors) and to compute protection levels adapted to this type of solution.

A significant aspect of this research will involve defining the performance specifications of the proposed solution. These specifications will be tailored to meet the operational needs of autonomous road vehicles and airport applications (e.g., runway navigation or taxiing). An evaluation and validation framework will be established to assess the system's ability to meet these specifications in various scenarios.

The core of the thesis will focus on the integrity analysis of video-based navigation and the prediction of its performance. The key tasks will include analyzing failure modes that induce large errors, nonlinearities, error correlation, and scenarios with a high number of biased measurements. These analyses are particularly challenging due to the complexity of the processing applied to video observations. The magnitude of errors at each stage of this complex processing must be quantified, and their propagation must be well understood. In particular, quantifying errors in feature localization from the observed video stream will be essential.

Additionally, the accuracy and integrity performance of the Video/INS solution will be compared with that of a GNSS/INS solution in different contexts. A detailed analysis will be conducted to evaluate the strengths and limitations of each approach, focusing on scenarios where GNSS is unavailable or degraded. These scenarios will include jamming and environments with blocked GNSS signals. The benefits of video in such cases will be thoroughly examined to highlight the added value of this hybrid solution.

This thesis, positioned at the intersection of inertial navigation, computer vision, and integrity monitoring research, offers an innovative scientific and technological contribution to addressing the challenges of navigation in urban and complex environments. The expected outcomes include a high-performance Video/INS hybridization algorithm, a tailored integrity monitoring method, and a rigorous evaluation framework for hybrid systems in diverse operational conditions., 1. Development of a Visual-Inertial SLAM (Simultaneous Localization And Mapping) solution for road vehicle applications:

- Definition of an algorithm integrating Video (monocular cameras) and inertial data (accelerometers and gyroscopes).

- Validation and performance assessment of the solution in urban environments.

- Development of an integrity monitoring solution:

- Definition of performance criteria (accuracy, continuity, availability, robustness) based on proposed specifications for urban road navigation.

- Study and identification of anomalies and failure modes of video and inertial sensors.

- Definition of an integrity monitoring algorithm for the Video/INS navigation solution.

- Development of a method for computing protection levels.

Requirements

- Performance analysis in various urban environments (suburban, dense, highly dense, tunnels) and across multiple scenarios (degraded GNSS, spoofed GNSS, absent GNSS).

- Study of the benefits of video-based navigation in these environments and scenarios.

- Expected Scientific Contribution:

- An innovative sensor fusion method combining video and inertial data, specifically designed for complex urban environments.

- A robust and accurate Visual-Inertial SLAM algorithm with a strong focus on integrity.

- A comparative analysis of Video/INS and GNSS/INS solutions.

- A methodological framework for evaluating the performance of hybrid Video/INS systems in degraded environments.

Master's degree in electrical/telecommunications/electronic engineering (or equivalent) with background in estimation theory and signal processing.

Knowledge in GNSS and aerospace systems will be appreciated.