Azure Cloud I Databricks Specialist

Role details

Job location

Tech stack

Job description

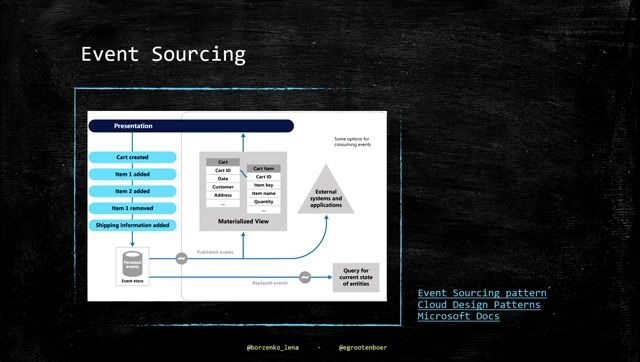

The Data Transport team, contributes to building and maintaining the Service Buses and integration connectors that enable reliable and secure data exchange between source and target systems. This includes developing new interfaces, optimizing existing ones, and ensuring robust monitoring and error handling. From Data Layer perspective we build a standardized layer that facilitates all operational communication between applications. The connectors between applications and the layer are built in C# and the layer itself consists of a Postgres database., The Data Transport Module (DTM) is a core component within our data platform ecosystem, designed to facilitate secure, reliable, and efficient delivery of data from the platform to consuming applications. As organizations increasingly adopt a product-based approach to data, the DTM ensures that curated data streams and data products can be seamlessly transported to their intended destinations while preserving quality, lineage, and governance standards.

The primary business purpose of the Data Transport Module is to enable controlled and standardized movement of data products from the platform to applications that rely on them for decision-making, reporting, analytics, or operational processes.

By abstracting transport complexity, the module:

- Ensures consistent data delivery across different applications and business domains.

- Reduces integration effort and accelerates time-to-value for new data products.

- Strengthens trust through governance, monitoring, and compliance with security standards.

The Central Data & Analytics department at Vanderlande is tasked with extending, operating and further maturing the data platform of Vanderlande. We provide Data & Analytics related products and services to Vanderlande, primarily catering to internal customers, but supporting our digital service portfolio. Following a hub & spoke model, we establish centralized standards that are then adopted and implemented by decentralized teams within the business.

At the heart of our efforts lies the Data Platform, the core product we are diligently enhancing. This Azure cloud enabled platform based on the Data Mesh principle empowers us to deliver valuable Data Products by transforming, processing, combining, and enriching data from various source systems, ultimately creating a reliable source of truth for our customers. To enable efficient data analysis and visualization, we actively govern, and maintain the Vanderlande Qlik Servers and Power BI Premium capacity. In addition, we provide templates, establish a clear workflow, and foster a community of experts.

Our work centers around our Federated Governance model, with the Central Data & Analytics Organization as the hub serving the spokes. Our scope encompasses Data Governance, Platform Operations, Core Platform Development, and Full stack Development. The technologies we use span across the Azure stack and Databricks ecosystem, ensuring seamless integration and optimal utilization of resources.By utilizing advanced analytics techniques and the latest technology, our focus on innovation empowers us to continuously improve and innovate the Data Platform.

What we offer you:

- Challenging assignments where you can make an impact and fully leverage your technical expertise.

- Personal guidance from both your consultant and the YER back office, ensuring you always have the right support.

- Growth and development opportunities, including participation in the YER Talent Development Programme with your own dedicated coach.

- International support such as Dutch language lessons, assistance with taxes, and housing guidance, helping you feel at home quickly.

- An open and collaborative culture focused on teamwork, results, and knowledge sharing.

- Networking opportunities with other tech professionals from leading multinationals.

- Inspiring events and masterclasses featuring renowned speakers and top companies.

At YER, you don't just get a job, you gain an environment where you can grow, innovate, and truly make a difference.

You will play a key role in designing, developing, and deploying, and integrating cloud-native applications and data solutions that support our business goals by:

- Design and develop scalable applications using Azure Serverless Functions, App Services, and .NET/C#.

- Implement messaging and event-driven architectures using Azure Service Bus and Event Grid.

- Deploy applications using Azure Container Apps or Docker.

- Maintain APIs using API Manager

- Develop and maintain data pipelines and analytics solutions using Databricks, Python, PySpark and Postgres.

- Manage data governance and access using Unity Catalog

- Collaborate with cross-functional teams in an Agile environment.

- Take initiative and work independently to drive tasks to completion.

- Collaborate with architects, DevOps engineers, and business stakeholders to ensure seamless and scalable data transport across the enterprise landscape, Match deze functie met jouw persoonlijkheid en ambities? Solliciteer dan direct. Heb je vragen? Wesley helpt je graag.

Wesley Jeoffrey

Requirements

Technically skilled and self-driven engineers with hands-on experience in Azure cloud services and Databricks to join our Data Layer & Data Transport team, Azure Cloud

- Azure Service Bus

- Azure Event Grid

- Azure Serverless Functions

- API Manager

- Azure App Service (API Management)

- .NET/C# application development

- Application deployment via Azure Container Apps or Docker

Databricks & Data Engineering

- Python

- Postgres

- Unity Catalog

- Lakebase (optional but a plus)

Other Requirements

- Available to work on-site in Veghel at least Tuesday, Wednesday, and Thursday

- Experience working in Agile teams

- Strong sense of ownership and ability to work independently