Apache Spark Application Developer

Role details

Job location

Tech stack

Requirements

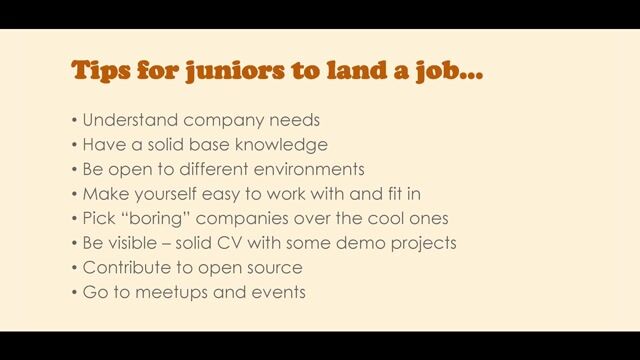

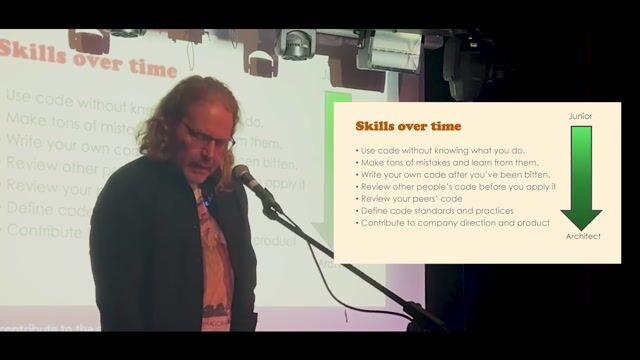

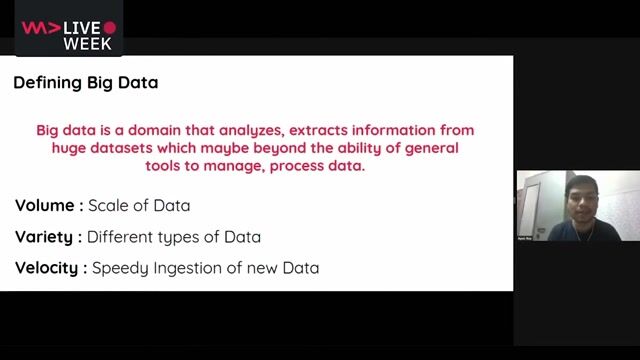

Project Role :Application DeveloperProject Role Description :Design, build and configure applications to meet business process and application requirements.Management Level :10Work Experience :4-6 yearsWork location :GurugramMust Have Skills :Good To Have Skills :Job Requirements : Key Responsibilities : A Building robust data pipelines that can execute at scale BProviding expertise to team and adapting best practices C Optimising ETL pipelines for performance D Solving problem with the use of Data Engineering E Assist with the development of target-state data pipelines using big data technologies under appropriate governance and approved framework F Assist in the writing and maintenance of process, technical, and business rules documentation G Proactive application of continuous improvement inTechnical Experience : A 5 years experience in software development, solution architecture and data engineering experience in multiple environments B 3 years professional experience of utilising Apache Spark C Experience using analytic tools including Jupyter Notebooks, Apache Superset D Experience in Python and PySpark or SparkSQL, Scala or Bash/Shell scripting E Expert with CI/CD, TDD, BDD F Cloud experience AWS and Azure or GCPProfessional Attributes : A Excellent communication skills and eagerness for personal developmentCan work under pressure, B can work independently with client.Additional Information : A:Able to Report Project status, Risk, Issues, dependencies B:Must have experience leading teams 15 years of full time education ]]>