Senior Data Engineer (Python, Spark, Kafka)

Role details

Job location

Tech stack

Job description

The mission of Roku's Data Engineering team is to develop a world-class big data platform that empowers both internal and external partners to leverage data and drive business growth. The team works closely with business stakeholders and engineering colleagues to collect, transform and surface metrics that are critical to the success of new and existing initiatives.

As a Senior Data Engineer in the Viewer Product Device & Themed Experiences team, you'll play a pivotal role in designing data models and building scalable pipelines to capture business metrics across Roku devices, Roku Powered TVs, web, and mobile clients. This work is essential to helping Roku understand which features resonate most with users and how we can continue to improve their experience. About the Role

Roku pioneered TV streaming. We connect users to the content they love, enable publishers to build and monetise large audiences, and give advertisers unique opportunities to engage consumers. Roku streaming players and Roku TV models are available worldwide through direct retail and licensing partnerships with TV brands and pay-TV operators.

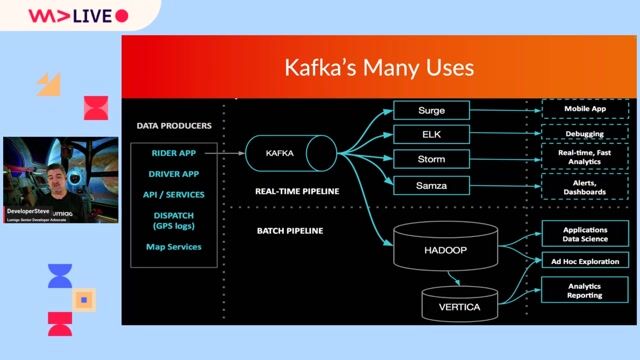

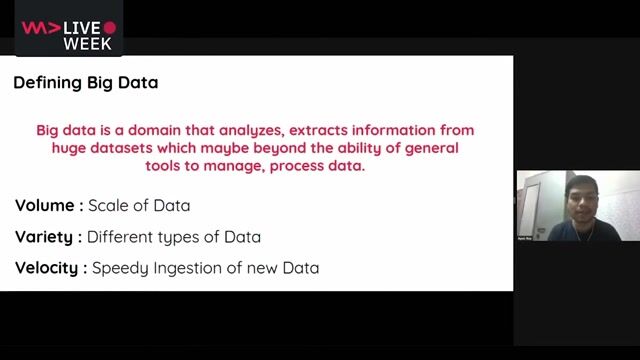

With tens of millions of devices sold across multiple countries, thousands of streaming channels, and billions of hours watched, a scalable, reliable and fault-tolerant big data platform is critical to our continued success.

This role is offered on a hybrid basis, based from our Cambridge Office, UK. What You'll Be Doing

- Building highly scalable, fault-tolerant distributed data processing systems (batch and streaming) that handle tens of terabytes of data each day, supporting a petabyte-scale data warehouse.

- Designing and developing robust data solutions, streamlining complex datasets into simplified, self-service models.

- Developing pipelines that ensure high data quality and resilience to imperfect source data.

- Defining and maintaining data mappings, business logic, transformations and data quality standards.

- Debugging low-level systems, measuring performance and optimising large production clusters.

- Taking part in architecture discussions, influencing the product roadmap, and owning new initiatives from concept to delivery.

- Maintaining and evolving existing platforms, introducing modern technologies and architectures where appropriate.

Requirements

- Strong SQL skills.

- Proficiency in at least one scripting language - Python is required.

- Proficiency in at least one object-oriented language.

- Experience with big data technologies such as HDFS, YARN, MapReduce, Hive, Kafka, Spark, Airflow, or Presto.

- Experience with AWS, GCP, or Looker (advantageous but not essential).

- Solid background in data modelling, including the design, implementation and optimisation of conceptual, logical, and physical models for scalable architectures.

- A degree in Computer Science (BS required; MS preferred).