Data Engineer I

Role details

Job location

Tech stack

Job description

The Data Track exists to provide high-quality, trustworthy payments data that powers business decisions and enables great experiences for travelers and partners, aligning Fintech foundations with robust governance and reliable delivery across reporting and analytics consumers.

Mission: Provide high-quality payments data that powers impactful decisions and enables exceptional experiences for travelers and partners.

Vision: Achieve "Flawless Payments Analysis and Reporting" across Fintech by ensuring stakeholders can trust and act on data with confidence.

Scope: Govern, acquire, cleanse, enrich, store, and distribute comprehensive, reliable transactional data; additionally manage card data and ensure PCI DSS compliance.

Core capabilities: Operate and evolve the data platform (consumption, transformation, enrichment), deliver reports/dashboards/alerts, improve data quality and lineage, and support analytics and machine learning use cases

The Junior Data Engineer is responsible for supporting development and delivery of end-to-end data solutions. The incumbent supports solution envisaging and technical designs, and drives hands-on implementation. Supports the influencing, differentiation, and guidance to business and technology strategies, as they relate to data, through constant cross-functional interaction., * Drive efficiency and resilience by mapping data flows between systems and workflows across the company

- Ensure standardisation by following design patterns in line with global and local data governance requirements

- Support real-time internal and customer-facing actions by developing real-time event-based streaming data pipelines

- Enable rapid data-driven business decisions by supporting development of efficient and scalable data ingestion solutions

- Drive high-value data by connecting different disparate datasets from different systems

- Monitor and follow relevant SLIs and SLOs

- Handle, mitigate and learn from incidents in a manner that improves the overall system health

- Ensure ongoing resilience of data processes by monitoring system performance and acting proactively identifying bottlenecks, potential risks, and failure point that might degrade overall quality

- Write readable and reusable code by applying standard patterns and using standard libraries

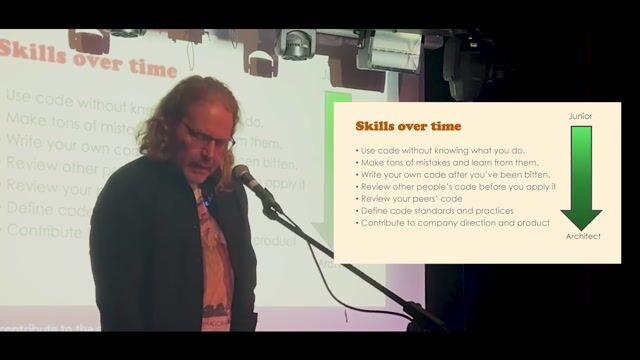

- Continuously evolve own craft by keeping up to date with the latest developments in data engineering and related technologies, and upskilling on these as needed.

Requirements

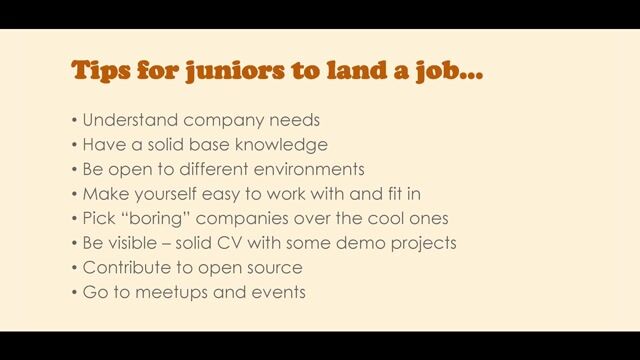

Do you have experience in TOGAF?, Do you have a Master's degree?, * Bachelor's or Master's degree in Computer Science or related field

- 1-3 years of relevant job experience

- entry level exposure to a data engineering or related field using a server side programming languages, preferably Scala, Java, Python, or Perl

- entry level exposure to building data pipelines and transformations at scale, with technologies such as Hadoop, Cassandra, Kafka, Spark, HBase, MySQL

- Basic knowledge of data modeling methods based on best practices, e.g. TOGAF

- Basic knowledge of data quality requirements and implementation methodologies

- Excellent English communication skills, both written and verbal.