Google Cloud Platform

Role details

Job location

Tech stack

Job description

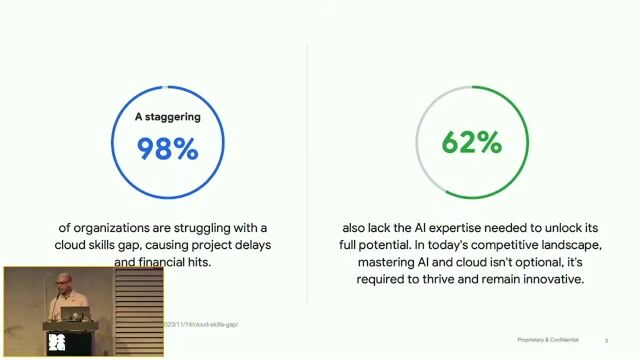

We are seeking a skilled and strategic Data Engineer to take ownership of our machine learning projects on the Google Cloud Platform (GCP). The ideal candidate is a hands-on expert in data science who also possesses a strong ability to bridge the gap between technical teams and business stakeholders.

You will be responsible for the entire lifecycle of data science projects, from conceptualization and stakeholder communication to model development, deployment on GCP, and performance monitoring. You will be expected to bring a senior-level perspective to the team and help set the standard for analytical excellence within the company., * Project Leadership & Mentorship: Drive data science projects from inception to deployment, providing technical guidance and mentorship to other team members.

- Business Communication: Act as the primary point of contact between the data science team and business units. Translate business challenges into data science problems and clearly communicate complex technical results and their business implications to non-technical stakeholders.

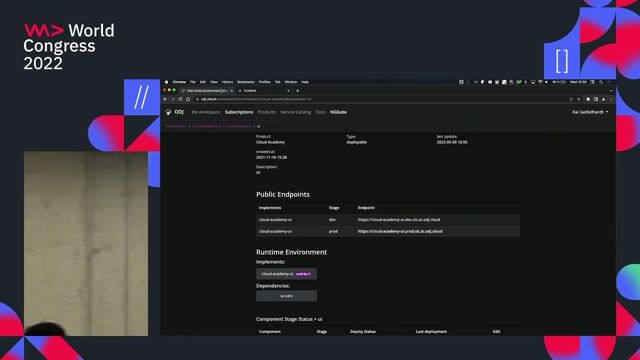

- End-to-End Solution Design: Architect, build, and deploy scalable machine learning models and data-driven solutions using Google Cloud Platform services (e.g., Vertex AI, BigQuery, Cloud Storage, Dataflow).

- Hands-On Development: Write high-quality, production-ready code in Python for data analysis, feature engineering, model training, and evaluation.

- Project Management: Define project scopes, timelines, and deliverables. Manage project priorities and ensure the timely delivery of high-impact solutions.

- Technical Strategy: Stay current with the latest advancements in machine learning, AI, and Google Cloud technologies, and drive the adoption of best practices in MLOps and data science.

Requirements

Do you have experience in Terraform?, * Proven Experience: A strong track record of successfully leading complex data science projects from concept to production.

- Google Cloud Expertise: Demonstrable, hands-on experience with the GCP data and ML stack. Essential services include:

- Vertex AI (Pipelines, Training, Endpoints)

- BigQuery & BigQuery ML

- Cloud Storage, Pub/Sub, Dataflow/Dataproc

- Technical Proficiency:

- Expert-level programming skills in Python and proficiency with data science libraries (Pandas, NumPy, Scikit-learn, etc.).

- Strong experience with deep learning frameworks such as TensorFlow or PyTorch.

- Solid understanding of machine learning algorithms (e.g., classification, regression, clustering, NLP) and statistical principles.

- Leadership & Communication:

- Demonstrated experience in managing the full lifecycle of a data science project.

- Excellent verbal and written communication skills, with a proven ability to present complex technical concepts to both technical and non-technical audiences.

- Preferred Qualifications (Nice-to-Have)

- Google Cloud Professional Machine Learning Engineer or Professional Data Engineer certification.

- Experience with MLOps principles and tools (e.g., CI/CD for models).

- Experience with Infrastructure as Code (e.g., Terraform).

- Experience working in an Agile/Scrum development environment