Edge Deployment Engineer (AI & Embedded Systems)

European Tech Recruit

Municipality of Madrid, Spain

4 days ago

Role details

Contract type

Temporary contract Employment type

Full-time (> 32 hours) Working hours

Shift work Languages

English Experience level

IntermediateJob location

Municipality of Madrid, Spain

Tech stack

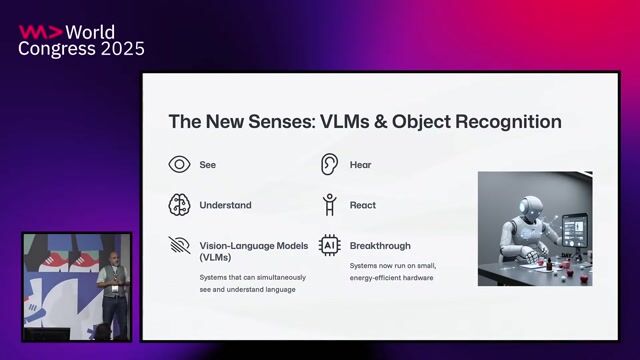

Artificial Intelligence

Artificial Neural Networks

C++

Memory Management

Linux on Embedded Systems

Firmware

Python

Machine Learning

Open Source Technology

Performance Tuning

Real-Time Operating Systems

System Programming

Graphics Processing Unit (GPU)

Delivery Pipeline

Large Language Models

GIT

Information Technology

Hardware Acceleration

Software Version Control

Programming Languages

Job description

As an Edge Deployment Engineer, you will be instrumental in bridging the gap between cutting-edge AI research and efficient, real-world execution. You will specialise in optimising and deploying highly compressed Machine Learning and Large Language Models onto resource-constrained, low-latency devices.

As a Quality Control Engineer, you will:

- Implement and optimise deep-learning models for edge hardware.

- Reduce model size and latency using compression/quantisation.

- Work hands-on with embedded systems and systems programming.

- Utilise key inference optimisation frameworks (e.g., TensorRT, vLLM).

- Write high-performance code in Python, C, or C++.

- Conduct performance profiling on diverse embedded architectures (ARM, GPUs).

- Integrate ML models into final products through team collaboration.

- Maintain development standards: Git, testing, and CI/CD pipelines.

Requirements

- Bachelor's degree or higher in Computer Science, Electrical Engineering, Physics, or related field; or equivalent industry experience

- 3-5 years of hands-on experience in embedded systems, firmware development, or systems programming

- Demonstrated experience optimizing machine learning models for deployment on constrained devices

- Strong proficiency in Python, C, or C++; experience with system-level programming languages is essential

- Solid understanding of quantization techniques and model compression strategies Experience with inference optimization frameworks (TensorRT, ONNX Runtime, LLM, vLLM, or equivalent)

- Familiarity with embedded architectures: ARM processors, mobile GPUs, and AI accelerators

- Strong fundamentals in computer architecture, memory management, and performance optimization

- Experience with version control (Git), testing frameworks, and CI/CD pipelines

- Excellent communication and collaboration skills in cross-functional teams, * Master's degree in Computer Science, Electrical Engineering, or related field

- Hands-on experience with large language model inference and deployment

- Experience optimizing neural networks using mixed-precision computation or dynamic quantization

- Familiarity with edge computing frameworks such as NVIDIA's Triton Inference Server or similar platforms

- Background in mobile or IoT development

- Knowledge of hardware acceleration techniques and specialized instruction sets (SIMD, NPU-specific optimizations)

- Contributions to open-source embedded AI or ML optimization projects

- Experience with real-time operating systems or embedded Linux environments

Benefits & conditions

- Compensation: Competitive salary, with a signing bonus and a retention bonus at the end of the contract.

- Flexibility: This is a hybrid role with flexible working hours. A relocation package is available if needed.

- Culture: We are a fast-scaling company committed to equal pay, diversity, and an inclusive culture. You'll gain international exposure in a multicultural, cutting-edge environment.