Job location

Municipality of Vitoria-Gasteiz, Spain

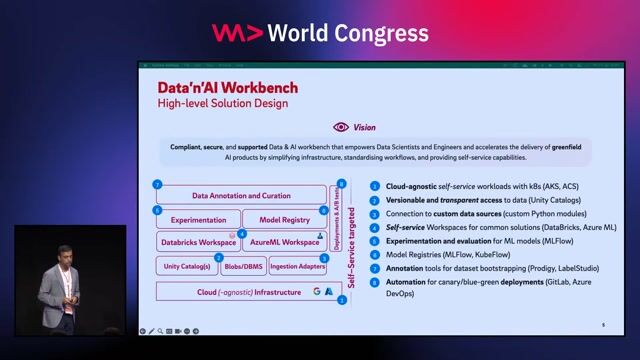

Tech stack

Data analysis

Continuous Integration

Data Architecture

ETL

Data Vault Modeling

Data Warehousing

DevOps

SQL Databases

Data Ingestion

Azure

Snowflake

Data Management

Stream Analytics

Software Version Control

Requirements

Storage Strong command of data modeling techniques (e.g. Inmon, Kimball, Data Vault etc.). Deep expertise in dbt, SQL, and Snowflake (or similar cloud data platforms) Strong understanding of data architecture, ETL/ELT design, and data warehouse principles Ability to grasp complex business processes and translate them into clean, scalable data models. Data Ingestion & Processing (Azure Data Factory, Functions / Batch Ingestion, Stream Analytics etc) Experience with DevOps (CI/CD, version control, testing automation, workflow orchestration) Proactive mindset, strong problem-solving skills, and the ability to work independently and lead technical initiatives. Additional Information medmix is an equal opportunity employer, committed to the strength of a diverse workforce. 93% of our employees would go above and beyond to deliver results - do you have the drive to succeed? Join us and boost your career, starting today!

About the company

Company Description medmix is a global leader in high-precision delivery devices. We occupy leading positions in the healthcare, consumer, and industrial end-markets. Our customers benefit from our dedication to innovation and technological advancement that has resulted in over 900 active patents. Our 14 production sites worldwide, together with our highly motivated and experienced team of nearly 2'700 employees provide our customers with uncompromising quality, proximity, and agility. medmix is headquartered in Baar, Switzerland. Our shares are traded on the SIX Swiss Exchange (SIX: MEDX). Job Description Responsible for the design and implementation of reusable and optimized end-to-end data pipelines partnering with internal technical and business stakeholders. You will work closely with the business teams to gather functional requirements, develop data models & design proposals, implement, and test solutions. Tasks & duties: Design and develop robust data pipelines from various source