Databricks Data Engineer

Role details

Job location

Tech stack

Job description

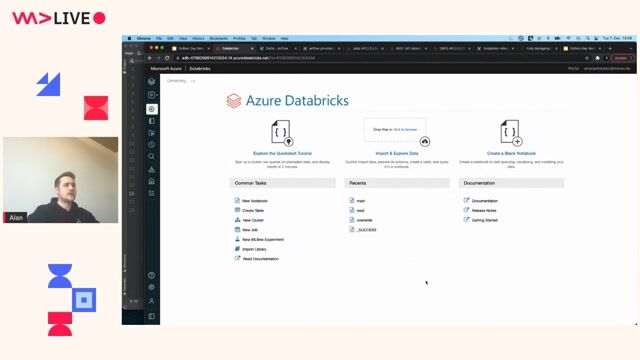

libraries to accelerate ingestion, transformation, and data serving across the business. Work closely with data scientists, analysts, and product teams to create performant and cost-efficient analytics solutions. Drive the adoption of Databricks Lakehouse architecture and ensure that data pipelines conform to governance, access, and documentation standards defined by the CDAO office. Ensure compliance with data privacy and protection standards (e.g., GDPR). Actively contribute to the continuous improvement of our platform in terms of scalability, performance, and usability. What You Bring & Who You Are A university degree in Computer Science, Data Engineering, Information Systems, or a related field. Strong experience with Databricks, Spark, Delta Lake, and SQL/Scala/Python. Proficiency in dbt, ideally with experience integrating it into Databricks workflows. Familiarity with Azure cloud services (Data Lake, Blob Storage, Synapse, etc.). Hands-on experience with Git-based workflows

Requirements

CI/CD pipelines, and data orchestration tools like Dragster and Airflow. Deep understanding of data modeling, streaming & batch processing, and cost-efficient architecture. Ability to work with high-volume, heterogeneous data and APIs in production-grade environments. Experience working within enterprise data governance frameworks, and implementing metadata management and observability practices in alignment with governance guidance. Strong interpersonal and communication skills, with a collaborative, solution-oriented mindset. Fluency in English. Technologies You'll Work With Core: Databricks, Spark, Delta Lake, Python, dbt, SQL Cloud: Microsoft Azure (Data Lake, Synapse, Storage) DevOps: Bitbucket/GitHub, Azure DevOps, CI/CD, Terraform Orchestration & Observability: Dragster, Airflow, Grafana, Datadog, New Relic Visualization: Power BI Other: Confluence, Docker, Linux Nice to Have Experience with Unity Catalog and Databricks Governance Frameworks Exposure to Machine Learning workflows on Databricks (e.g., MLflow) Knowledge of Microsoft Fabric or Snowflake Experience with low-code analytics tools like Dataiku Familiarity with PostgreSQL or MongoDB Front-end development skills (e.g., for data product interfaces) Benefits Working Hours: Flexible hours with 60% remote and 40% at our offices in Madrid, Torre Europa. Meal allowances and option to use them for public transportation or childcare Internet compensation to cover home internet costs Microsoft ESI Certifications: Access to the Enterprise Skills Initiative program Training courses and learning resources to support professional growth Gym coverage with substantial support for staying active Health insurance with extended options for dependents Seniority level Not Applicable Employment type Full-time Job function Information Technology Industries Utilities #J-18808-Ljbffr