Software Engineer - Data Infrastructure - Kafka

Canonical Ltd.

6 days ago

Role details

Contract type

Permanent contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

EnglishJob location

Remote

Tech stack

Data as a Services

Data Infrastructure

Linux

Distributed Systems

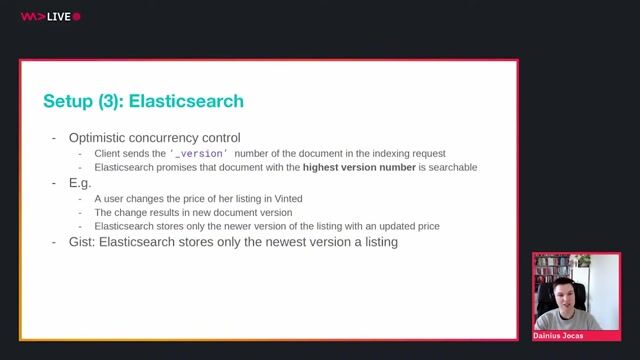

Elasticsearch

Python

PostgreSQL

MongoDB

MySQL

NoSQL

OpenStack

Oracle Applications

Package Management Systems

Redis

Software Engineering

SQL Databases

Spark

Kubernetes

Information Technology

Kafka

Requirements

- Proven hands-on experience in software development using Python

- Proven hands-on experience in distributed systems, such as Kafka and Spark

- Have a Bachelor's or equivalent in Computer Science, STEM, or a similar degree

- Willingness to travel up to 4 times a year for internal events

Additional skills that you might also bring

- Experience operating and managing other data platform technologies, SQL (MySQL, PostgreSQL, Oracle, etc) and/or NoSQL (MongoDB, Redis, ElasticSearch, etc), similar to DBA level expertise

- Experience with Linux systems administration, package management, and infrastructure operations

- Experience with the public cloud or a private cloud solution like OpenStack

- Experience with operating Kubernetes clusters and a belief that it can be used for serious persistent data services

Benefits & conditions

- Fully remote working environment - we've been working remotely since 2004!

- Personal learning and development budget of 2,000USD per annum

- Annual compensation review

- Recognition rewards

- Annual holiday leave

- Parental Leave

- Employee Assistance Programme

- Opportunity to travel to new locations to meet colleagues twice a year

- Priority Pass for travel and travel upgrades for long haul company events

About the company

Canonical is building a comprehensive automation suite to provide multi-cloud and on-premise data solutions for the enterprise. The data platform team is a collaborative team that develops managed solutions for a full range of data stores and data technologies, spanning from big data, through NoSQL, cache-layer capabilities, and analytics; all the way to structured SQL engines (similar to Amazon RDS approach). We are facing the interesting problem of fault-tolerant mission-critical distributed systems and intend to deliver the world's best automation solution for delivering managed data platforms. We are looking for candidates from junior to senior level with interests, experience and willingness to learn around Big Data technologies, such as distributed event-stores (Kafka) and parallel computing frameworks (Spark). Engineers who thrive at Canonical are mindful of open-source community dynamics and equally aware of the needs of large, innovative organisations.

Location: This role can be filled in European, Middle East and African time zones.

What your day will look like

* Collaborate proactively with a distributed team

* Write high-quality, idiomatic Python code to create new features

* Debug issues and interact with upstream communities publicly

* Work with helpful and talented engineers including experts in many fields

* Discuss ideas and collaborate on finding good solutions

* Work from home with global travel for 2 to 4 weeks per year for internal and external events, Canonical is a pioneering tech firm that is at the forefront of the global move to open source. As the company that publishes Ubuntu, one of the most important open source projects and the platform for AI, IoT and the cloud, we are changing the world on a daily basis. We recruit on a global basis and set a very high standard for people joining the company. We expect excellence - in order to succeed, we need to be the best at what we do. Canonical has been a remote-first company since its inception in 2004. Work at Canonical is a step into the future, and will challenge you to think differently, work smarter, learn new skills, and raise your game. Canonical provides a unique window into the world of 21st-century digital business.