Data Engineer | Legal AI Tech Start-up | Full Remote

Role details

Job location

Tech stack

Job description

As a Data Engineer (m/f/d), you support the development of our legal data and search infrastructure end to end. You will design, maintain and optimize robust ETL pipelines that clean and normalize XML-based legal data from multiple jurisdictions while developing scalable data models and metadata enrichment strategies to maximize searchability, semantic relevance and usability of legal information for downstream AI agents and products. A key part of your role will be leveraging generative AI where appropriate to enhance data processing and metadata generation, while continuously benchmarking and tuning database and search performance to ensure efficient, low-latency querying at scale. Working closely with product teams, AI researchers, and legal domain experts, you will deliver high-quality, reliable data solutions that unlock the value of complex, multilingual legal content., You will join our Data Team, working closely with a group of approximately 5 data experts. This highly collaborative team focuses on pushing the boundaries of generative AI, natural language processing, and privacy-preserving machine learning legal solutions. Your Hiring Manager

Felix, our Director of AI & Data Engineering, will guide you through your journey at Noxtua. With deep expertise in AI systems, Felix leads with a passion for innovation and a collaborative approach, ensuring every team member thrives., * Design, build and optimize end-to-end ETL pipelines for legal data from multiple jurisdictions including ingestion, validation, cleaning, transformation, chunking, embedding, and ingestion into vector databases.

- Work extensively with XML-based legal data feeds: parse, validate, normalize, and transform complex XML structures into scalable internal schemas and unified document formats.

- Develop and maintain data models and storage schemas that support continuously updated datasets while ensuring consistency, scalability, and accuracy across diverse datasets and large amounts of data.

- Coordinate data handover and integration from multiple internal and external data providers, including official sources, APIs, and web scraping pipelines, ensuring reliable and timely updates.

- Implement and continuously refine metadata enrichment strategies to maximize searchability, ranking quality, and relevance of legal information in vector databases.

- Build and maintain high-performance search and retrieval infrastructure enabling agent-based systems to call search functions and retrieve the most relevant legal information efficiently.

- Explore and integrate generative AI techniques to enhance data processing workflows such as structured field extraction, metadata generation and document normalization.

- Experiment with different embedding and chunking strategies including evaluation.

- Conduct database performance benchmarking and tuning to ensure efficient query execution and scalability.

- Collaborate with product, AI, and legal domain experts to deliver high-quality, reliable data solutions.

Unser Tech Stack

- Programming Languages: Python

- Data format: XML, parquet

- Vector Search: ElasticSearch, Qdrant

- Graph Databases: Neo4j, Amazon Neptune

- Libraries: HuggingFace, Transformers, NumPy, Pandas, Pydantic, FastAPI, OpenAI & PyTorch

- Deployment Tools: Docker

- Cloud Infrastructure: OTC, AWS

- Pipeline Orchestration: Apache Airflow

- Ticket System: Atlassian JIRA

- Repository: Github

- CI/CD System: GitHub Actions

- Documentation: Confluence

Requirements

Do you have experience in XML?, * Working Permission: EU

- Residence: must be within the EU

- Language: English proficiency at C2 level.

- Experience: in AI development or data engineering with successfully deployed projects

- Data: Expertise in data processing, filtering, and augmentation

- Databases: Expertise in vector databases, data embedding, benchmarking and management

- Programming: Strong Python skills and experience with AI pipelines, * Experience in deploying graph databases

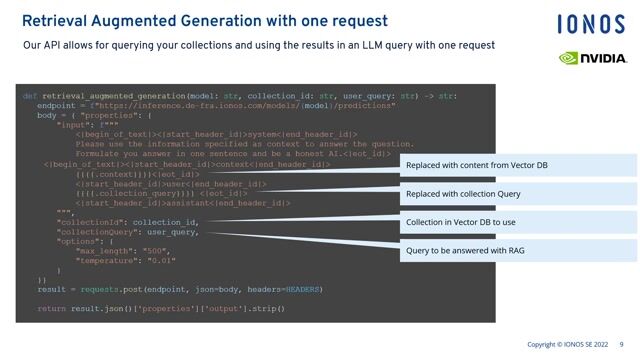

- RAG Systems: Experience in building up AI specific RAG pipelines

- NLP & Generative AI: Familiarity with developing and deploying NLP, generative AI models

- Familiarity with Kubernetes deployments

- Legal background knowledge

Benefits & conditions

- Working hours: Flexible working hours

- Vacation: 26 days + December 24th & 31st off, +1 day for each year of service (up to a maximum of 30 days)

- Remote: 100% remote work possible (given a EU residence), with the flexibility to use our offices in Berlin, Fribourg (Switzerland), Munich, Paris or Zagreb,

- Home Office Setup Budget: €1,000 paid with your first salary to create your ideal remote workspace

- Equipment: Laptop (Lenovo or Mac)

- Discounts: e.g. Urban Sports Club Membership