Data Engineer

Role details

Job location

Tech stack

Job description

- Data Acquisition & Preparation: Source, create, collect, clean, transform, and structure data for analysis and operational use, including synthetic data generation.

- Build and productionise ML/NLP solutions for legal and business use cases to enable better decision making.

- Feature Engineering & Integration: Develop new features, integrate data from internal/external sources, and create unified views to enhance performance and outcomes.

- Pipeline Development & Automation: Build, optimise, and automate scalable data pipelines using tools like Azure, Fabric, and Databricks; develop reusable components and templates.

- Governance, Quality & Security: Ensure data quality, governance, classification, retention, and robust security measures across the data lifecycle, supporting compliance and auditability. Contribute to standards, code reviews and communities of practice.

- Metadata & Semantic Services: Provide metadata management, define categories and relationships, and enable semantic capabilities for data discovery and interoperability.

- Monitoring & Issue Resolution: Monitor health and performance of data systems, identify and resolve quality issues, bottlenecks, anomalies, and validate data across environments.

- Analytics & Machine Learning Support: Apply analytics techniques, visualise data, prepare and serve data for machine learning, and collaborate with data scientists to operationalise models.

- Collaboration, Documentation & Standards: Work with stakeholders to deliver scalable data products, implement DataOps, telemetry, quality checks and CI/CD practices, maintain documentation, and promote adherence to architecture standards.

Requirements

- Ideally degree educated in computer science, data analysis or similar

- Strategic and operational decision-making skills

- Ability and attitude towards investigating and sharing new technologies

- Ability to work within a team and share knowledge

- Ability to collaborate within and across teams of different technical knowledge to support delivery to end users

- Problem-solving skills, including debugging skills, and the ability to recognise and solve repetitive problems and root cause analysis

- Ability to describe business use cases, data sources, management concepts, and analytical approaches

- Experience in data management disciplines, including data integration, modeling, optimisation, data quality and Master Data Management

- Excellent business acumen and interpersonal skills; able to work across business lines at all levels to influence and effect change to achieve common goals.

- Proficiency in the design and implementation of modern data architectures (ideally Azure / Microsoft Fabric / Data Factory) and modern data warehouse technologies (Databricks, Snowflake)

- Experience with database technologies such as RDBMS (SQL Server, Oracle) or NoSQL (MongoDB)

- Knowledge in Apache technologies such as Spark, Kafka and Airflow to build scalable and efficient data pipelines

- Ability to design, build, and deploy data solutions that explore, capture, transform, and utilise data to support AI, ML, and BI

- Proficiency in data science languages / tools such as R, Python, SAS

- Awareness of ITIL (Incident, Change, Problem management)

#LI-JC1

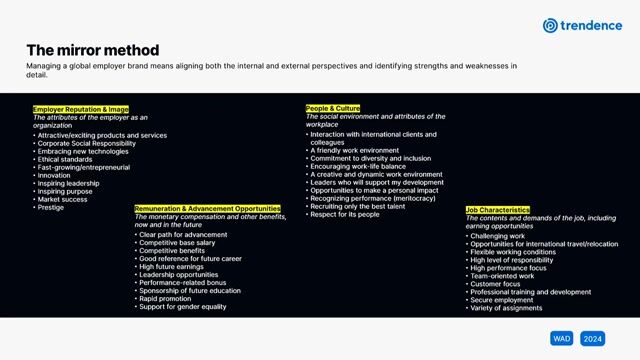

Diversity, Equity and Inclusion

To attract the best people, we strive to create a diverse and inclusive environment where everyone can bring their whole selves to work, have a sense of belonging, and realize their full career potential. Our new enabled work model allows our people to have more flexibility in the way they choose to work from both the office and a remote location, while continuing to deliver the highest standards of service. We offer a range of family friendly and inclusive employment policies and provide access to programmes and services aimed at nurturing our people's health and overall wellbeing. Find more about Diversity, Equity and Inclusion here.