AI Solutions Engineer

Role details

Job location

Tech stack

Job description

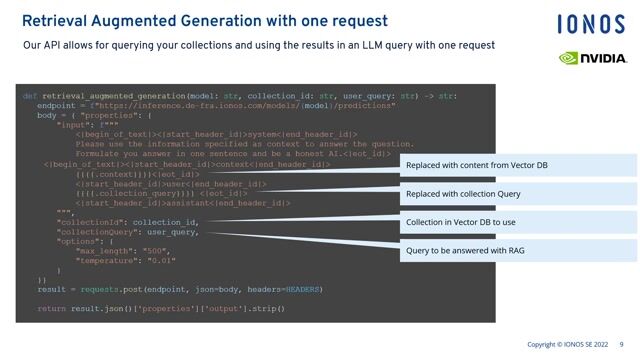

- Own technical onboarding for new customers: data ingestion, indexing, metadata mapping (jurisdiction, authority, recency), and validation.

- Configure and adapt retrieval + reranking pipelines to customer needs (practice area focus, doc structure, internal taxonomies, "what good looks like").

- Implement customer-specific workflows: templates, filters, jurisdiction defaults, citation behavior, permission-aware retrieval, and custom result layouts.

LLM workflows that are production-safe

- Tailor prompting / context engineering to customer requirements (traceability, citation style, explanation depth, fallback behavior).

- Implement safeguards: provenance, source grounding, "no-citation * no-claim" behaviors, and "confidence/uncertainty" patterns aligned with legal risk.

Evaluation & iteration in the field

- Build lightweight customer-specific eval sets (gold questions, acceptance criteria, "must-not-fail" cases).

- Run fast error analyses and ship fixes: query understanding tweaks, reranker tuning, chunking strategy, dedupe suppression, caching, and retrieval routing.

Performance, cost, reliability

- Keep latency and costs under control with caching, batching, early exit, and sensible fallbacks.

- Monitor quality + usage signals; turn customer feedback into concrete improvements and measurable acceptance checks.

Collaboration & knowledge transfer

- Work closely with Customer Success + legal experts to translate pain points into system changes.

- Document integrations and "deployment recipes" so solutions become reusable product capabilities over time.

Requirements

Do you enjoy taking a strong AI product and making it work beautifully inside a customer's real-world environment; messy data, unique workflows, strict permissions, and high expectations? Are you hands-on, pragmatic, and happiest when you can ship an improvement that a specific legal team immediately feels?

You're comfortable being the "technical bridge" between customers, legal experts, and the core product team: you diagnose issues, propose solutions, implement them, and leave behind clean playbooks so the next deployment gets easier., * Strong hands-on experience building or adapting search/retrieval systems in production (hybrid retrieval, reranking, query understanding, indexing).

- Proven experience taking LLM workflows from prototype to reliable production use.

- Proficiency in TypeScript/Node.js (our core stack).

- Experience with one or more of: Azure AI Search, pgvector/PostgreSQL, OpenSearch/Elasticsearch (or similar).

- Practical engineering instincts: debugging, performance tuning, careful handling of edge cases, and clear operational thinking.

- Strong communication skills and comfort working directly with customers (technical deep-dives, explaining trade-offs, writing playbooks).

- Proficiency in English; full-time availability.

- Hybrid presence: on-site in Zurich at least two days per week., * German proficiency (many sources and customer interactions are German-speaking).

- Experience integrating customer data sources / document pipelines (connectors, ETL, access controls).

- Experience with pragmatic eval pipelines (human-in-the-loop labeling, inter-annotator agreement, lightweight dashboards).

- Familiarity with sparse + dense retrieval methods (BM25 variants).

- Experience operating services (Docker is a plus).

- Familiarity with our stack: Azure / NestJS / Next.js.

- Knowledge of Swiss / German / US legal systems is a plus.

Benefits & conditions

- Customer-visible impact: your work directly determines whether customers trust the product in daily legal workflows.

- Autonomy & ownership: you'll own deployments end-to-end and shape repeatable "solution patterns."

- Fast learning loop: see real-world failure modes early; help steer product priorities with evidence.

- Compensation: CHF 7'000-11'000 per month + ESOP, depending on experience and skills.