Data Architect

Role details

Job location

Tech stack

Job description

The Data Architect - Databricks is responsible for designing and implementing scalable, secure, and high-performance data architectures leveraging the Databricks Lakehouse platform. The role requires deep expertise in data engineering, cloud integration, data modeling, and distributed data processing frameworks such as Apache Spark., * Design and implement scalable data architectures using Databricks Lakehouse principles.

- Develop and optimize ETL/ELT pipelines for batch and streaming data ingestion.

- Build and manage data solutions using Delta Lake and medallion architecture.

- Develop data pipelines using PySpark, Scala, and SQL.

- Implement structured streaming solutions using Azure Databricks.

- Build and query Delta Lake storage solutions.

- Flatten nested structures and process complex datasets using Spark transformations.

- Optimize Spark jobs for performance and cost efficiency.

- Establish reusable frameworks, templates, and best practices for enterprise data engineering.

Cloud & Integration

- Integrate Databricks with cloud platforms such as:

- Microsoft Azure

- Amazon Web Services

- Google Cloud Platform

- Work with Azure services including:

- Azure Data Factory

- Azure Data Lake Storage

- Azure Synapse Analytics

- Azure SQL Data Warehouse

- Implement orchestration using Azure Data Factory and Databricks Jobs.

- Transfer and process data using Spark pools and PySpark connectors.

Security, Governance & Operations

- Design secure Databricks environments including RBAC and Unity Catalog.

- Ensure data security, compliance, and enterprise governance standards.

- Implement CI/CD pipelines and Git-based version control for Databricks workflows.

- Perform cluster sizing, cost optimization, and performance tuning.

- Monitor and troubleshoot Databricks environments for reliability.

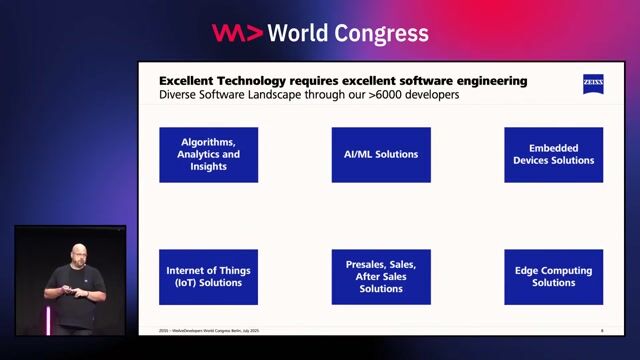

Strategy, Leadership & Presales

- Define enterprise data strategies and roadmaps.

- Evaluate emerging tools and technologies for adoption.

- Architect solution frameworks aligned with business goals.

- Collaborate with sales teams on presales activities including solution design and customer discussions.

- Engage stakeholders and provide technical leadership across cross-functional teams.

Requirements

Do you have experience in Unity?, Do you have a Bachelor's degree?, The ideal candidate brings strong technical leadership, hands-on architecture experience, and the ability to support solutioning and presales initiatives while driving enterprise data strategy., * 12+ years of experience in data engineering, including hands-on experience with Databricks or Apache Spark-based platforms.

- Strong expertise in PySpark, Spark SQL, and Scala.

- Experience processing streaming data and working with Apache Kafka (preferred).

- Proven experience building batch and real-time ingestion frameworks.

- Deep knowledge of Delta Lake, Delta Live Tables, and medallion architecture.

- Familiarity with enterprise Databricks setups including Unity Catalog and governance models.

- Experience with CI/CD, DevOps, DataOps, and AIOps frameworks.

- Knowledge of MLOps, data observability, and BI tools.

- Experience with Snowflake and/or Microsoft Fabric (added advantage).

- Strong understanding of data modeling, distributed systems, and cloud integration.

Eligibility Criteria

- Bachelor's degree in Computer Science, Information Technology, or related field.

- Proven experience as a Databricks Architect or similar senior data engineering role.

- Complete understanding of Databricks platform architecture.

- Databricks certification (e.g., Certified Data Engineer).

- Expertise in Python, Scala, SQL, and/or R.

- Experience with AWS, Azure, GCP, or Alibaba Cloud.

- Experience in security implementation and cluster configuration.

- Strong analytical, problem-solving, and communication skills.

Preferred Competencies

- Experience working in large-scale enterprise environments.

- Strong stakeholder management and consulting skills.

- Ability to drive innovation and organizational data transformation initiatives.