Matthias Niehoff

Modern Data Architectures need Software Engineering

#1about 2 minutes

The evolution from data warehouses to data lakes

Data architectures evolved from centralized data warehouses for BI reporting to data lakes that accommodate unstructured data for data science and machine learning.

#2about 2 minutes

Understanding the modern cloud data platform

Cloud data warehouses like Snowflake and Databricks enabled the shift from ETL to ELT and introduced the data lakehouse concept using open table formats like Apache Iceberg.

#3about 3 minutes

Solving centralization bottlenecks with Data Mesh

Data Mesh applies domain-driven design principles to data, promoting decentralized ownership, data as a product, a self-serve platform, and federated governance to avoid central team bottlenecks.

#4about 1 minute

Why data engineering needs software engineering discipline

As data systems become production-critical, the Python-heavy data ecosystem requires rigorous software engineering practices beyond simple scripting to build reliable, maintainable software.

#5about 1 minute

Implementing unit, integration, and data quality tests

Effective data pipelines require a multi-layered testing strategy, including unit tests for logic, integration tests for system connections, and runtime tests to validate data content and quality.

#6about 3 minutes

Managing complex data environments for development and testing

Creating separate dev, test, and prod environments for data is challenging because development often requires access to production-like data, raising issues of data replication, cost, and anonymization.

#7about 5 minutes

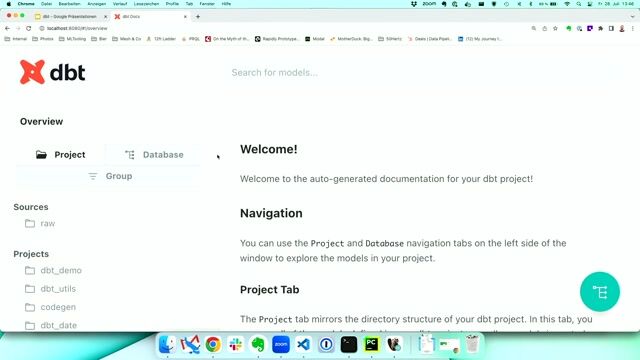

Using the Modern Data Stack and DBT for transformations

The Modern Data Stack applies DevOps principles to data, with tools like DBT (Data Build Tool) enabling engineers to manage data transformations with version-controlled SQL, automated testing, and CI/CD.

#8about 4 minutes

Using data contracts to stabilize data integration

Data contracts act as a formal API-like agreement between data producers and consumers, ensuring schema stability and data quality by making breaking changes explicit and enforceable in CI/CD pipelines.

#9about 2 minutes

Building a company-wide data culture and literacy

Fostering a strong data culture through initiatives like data bootcamps helps all employees, including non-technical ones, understand the value of data and the importance of data quality.

#10about 4 minutes

Modern data architectures and the reality of team size

Modern data architectures can range from simple setups using DuckDB to complex cloud platforms like Databricks, but it's crucial to remember that data teams are typically much smaller than software teams.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

06:27 MIN

Addressing the core failures of traditional data approaches

The Data Mesh as the end of the Datalake as we know it

01:48 MIN

Understanding data mesh as a concept, not a technology

The Data Mesh as the end of the Datalake as we know it

04:59 MIN

The challenges of a centralized data lake architecture

A Data Mesh needs Open Metadata

01:55 MIN

Merging data engineering and DevOps for scalability

Software Engineering Social Connection: Yubo’s lean approach to scaling an 80M-user infrastructure

05:05 MIN

Using DataWorks as a unified IDE for big data

Alibaba Big Data and Machine Learning Technology

03:47 MIN

Applying software engineering practices to data pipelines

Enjoying SQL data pipelines with dbt

02:08 MIN

Understanding traditional data architecture before Microsoft Fabric

Data Analytics with Microsoft Fabric: End-to-End Use Case with Data Agents

04:29 MIN

Building a distributed and domain-driven data architecture

The Data Mesh as the end of the Datalake as we know it

Featured Partners

Related Videos

26:53

26:53Enjoying SQL data pipelines with dbt

Matthias Niehoff

37:21

37:21The Data Mesh as the end of the Datalake as we know it

Mario Meir-Huber

48:10

48:10Data Science on Software Data

Markus Harrer

26:22

26:22Modern software architectures

David Tielke

30:34

30:34How building an industry DBMS differs from building a research one

Markus Dreseler

28:09

28:09The AI-Ready Stack: Rethinking the Engineering Org of the Future

Jan Oberhauser, Mirko Novakovic, Alex Laubscher & Keno Dreßel

19:44

19:44Blueprints for Success: Steering a Global Data & AI Architecture

Dominik Schneider

22:41

22:41Empowering Retail Through Applied Machine Learning

Christoph Fassbach & Daniel Rohr

Related Articles

View all articles

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Devoteam

Canton de Levallois-Perret, France

Senior

Python

Devoteam

Canton de Levallois-Perret, France

Intermediate

JIRA

Scrum

Machine Learning

Agile Methodologies

Westhouse Consulting GmbH

Frankfurt am Main, Germany

Intermediate

API

XML

JSON

Azure

Scala

+7

Datamics Gmbh

€52K

API

Python

Microservices

Continuous Integration

Deutsche Wohnen AG

Berlin, Germany

Remote

Azure

T-SQL

Python

Data Lake

+4

Deichmann

Essen (Oldenburg), Germany

Senior

ETL

Azure

Scala

Spark

Python

+1

Deichmann

Essen (Oldenburg), Germany

Senior

ETL

Azure

Scala

Spark

Python

+1