Data Integrator

Role details

Job location

Tech stack

Job description

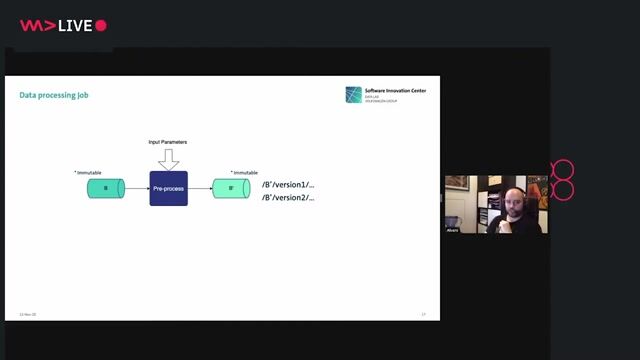

Based on the defined Data Architecture, the Data Integrator develops and documents ETL solutions, which translate complex business data into usable physical data models. These solutions support enterprise information management, business intelligence, machine learning, data science, and other business interests.

The Data Integrator uses ETL ("Extract, Transform, Load") which is the process of loading business data into a data warehousing environment, testing it for performance, and troubleshooting it before it goes live.

The Data Integrator is responsible for the development of the physical data models designed to improve efficiency and outputs, of the Data Vault and Data Marts, of the Operational Data Store (ODS), and of the Data Lakes on the target platforms (SQL/NoSQL).

Primary Tasks and responsibilities:

-

Design, implement, maintain and extend physical data models and data pipelines

-

Gather data model integration requirements and process knowledge in order to integrate the data so that it corresponds to the needs of end users

-

Ensure that the solution is scalable, performant, correct, high quality, maintainable and secure

-

Propose standards, tools, and best practices

-

Implement according to standards, tools, and best practices

-

Maintain and improve existing processes

-

Investigate potential issues, notify end-users and propose adequate solutions

-

Prepare and maintain the documentation

Requirements

Do you have experience in UNIX?, Do you have a Master's degree?, * Strong knowledge of ETL tools and development (DATASTAGE)

-

Strong knowledge of Database Platforms (DB2 & Netezza)

-

Strong knowledge of SQL Databases (DDL)

-

Strong knowledge of SQL (Advanced querying, optimization of queries, creation of stored procedures)

-

Relational databases (IBM DB2 LUW)

-

Data warehouse appliances (NETEZZA)

-

Atlassian Suite: JIRA / CONFLUENCE / BITBUCKET

-

TWS (IBM Workload Management)

-

UNIX Scripting (KSH / SH / PERL / PYTHON)

-

Knowledge of AGILE principles

Nice to have

-

Knowledge of Data Modeling Principles / Methods including Conceptual, Logical & Physical Data Models, Data Vault, Dimensional Modelling

-

Knowledge of Test Principles (Test Scenarios / Test Use Cases and of Testing)

-

Knowledge of ITIL

-

Knowledge of OLAP

-

Knowledge of NoSQL databases

-

Knowledge of Hadoop Components - HDFS, Spark, Hbase, Hive, Sqoop

-

Knowledge of Big Data

-

Knowledge of Data Science / Machine Learning / Artificial Intelligence

-

Knowledge of Data Reporting Tools (TABLEAU)

Non-technical profile requirements

-

Interpersonal and team collaboration skills

-

Analytical and problem-solving

-

Motivated

-

Autonomous

-

Work on simultaneous tasks with limited supervision

-

Quick learner

-

Communicate complex technical ideas, regardless of the technical capacity of the audience

-

Learn and implement new and different techniques

-

Understand business requirements

-

Customer satisfaction oriented

-

Respect the change management procedures, and the internal processes

Methodology/Certification requirements

-

Master's degree in computer science OR equivalent through experience.

-

Advanced course work in technical systems plus continued education in technical disciplines is preferred.

Language proficiencies

-

Native Dutch or French with a good knowledge of the other language

-

Fluent in English