Álvaro Martín Lozano

Implementing continuous delivery in a data processing pipeline

#1about 4 minutes

From research concepts to production-ready data products

The Volkswagen Data Lab shifted its focus from demonstrating proof-of-concepts to building and deploying real-world data solutions for its clients.

#2about 7 minutes

Core concepts of continuous delivery for data

Continuous delivery for data pipelines requires adapting standard CI/CD principles, where data is the deliverable, by progressing through version control, integration, and deployment stages.

#3about 11 minutes

Implementing a pipeline with immutable, versioned data

The five-step pipeline relies on treating data as immutable, creating a new versioned output for each run to enable simple rollbacks and reproducibility.

#4about 6 minutes

The challenge of orchestrating chained data jobs

Managing dependencies between jobs becomes complex when each job consumes versioned, immutable data inputs from upstream processes.

#5about 5 minutes

Pros and cons of the immutable data approach

While this method offers powerful benefits like reproducibility and instant rollbacks, it introduces challenges in orchestration complexity and increased storage costs.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

VECTOR Informatik

Stuttgart, Germany

Senior

Kubernetes

Terraform

+1

Raiffeisen Bank International AG

Vienna, Austria

Intermediate

Linux

DevOps

Matching moments

00:36 MIN

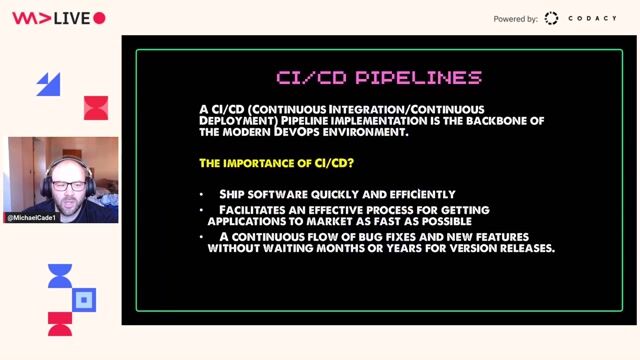

The distinct roles of CI and CD pipelines

#90DaysOfDevOps - The DevOps Learning Journey

06:04 MIN

Using continuous delivery to enable business agility

The Affordances of Quality

00:51 MIN

Defining continuous integration, delivery, and deployment

CI/CD with Github Actions

05:34 MIN

Tracing the evolution of DevOps from silos to superhighways

Navigating the AI Wave in DevOps

09:45 MIN

Implementing an AI-in-the-loop continuous learning cycle

A solution to embed container technologies into automotive environments

01:57 MIN

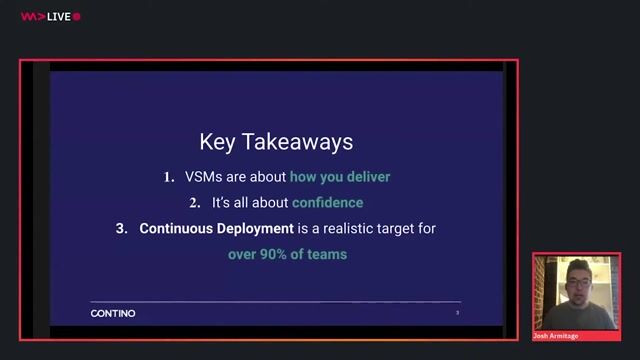

Core principles for achieving continuous deployment

Charting the Journey to Continuous Deployment with a Value Stream Map

03:49 MIN

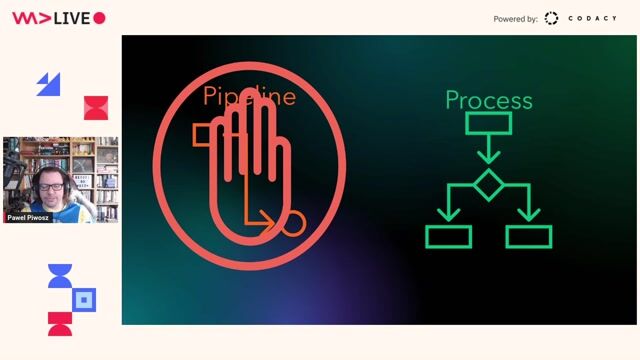

Moving beyond tools to architect CI/CD processes

Plan CI/CD on the Enterprise level!

03:31 MIN

Differentiating continuous delivery and continuous deployment

Plan CI/CD on the Enterprise level!

Featured Partners

Related Videos

30:46

30:46Charting the Journey to Continuous Deployment with a Value Stream Map

Josh Armitage

43:47

43:47Industrializing your Data Science capabilities

Dubravko Dolic & Hüdaverdi Cakir

56:19

56:19Plan CI/CD on the Enterprise level!

Pawel Piwosz

44:40

44:40DevOps for Machine Learning

Hauke Brammer

25:24

25:24Integrating backups into your GitOps Pipeline

Florian Trieloff

46:06

46:06CD2CF - Continuous Deployment to Cloud Foundry

Dominik Kress

39:15

39:15Database DevOps with Containers

Rob Richardson

54:46

54:46Navigating the AI Wave in DevOps

Raz Cohen

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Siemens AG

Berlin, Germany

C++

GIT

CMake

Linux

DevOps

+7

IT Partner España

Municipality of Madrid, Spain

€38K

Intermediate

REST

Azure

Spark

DevOps

+4

knowmad Mood

Municipality of Madrid, Spain

Senior

Java

JIRA

DevOps

Python

Docker

+5

Dataport

Hannover, Germany

DevOps

Openshift

Agile Methodologies

Continuous Integration

Configuration Management

+1

Dataport

Altenholz, Germany

DevOps

Openshift

Agile Methodologies

Continuous Integration

Configuration Management

+1

Atruvia AG

Karlsruhe, Germany

Remote

€70-102K

Senior

GIT

Bash

Scrum

+6

knowmad Mood

Municipality of Madrid, Spain

API

Azure

Maven

DevOps

Gradle

+13