SMTS Software Development Eng.

Role details

Job location

Tech stack

Job description

We are seeking a talented Machine Learning Kernel Developer to design, develop, and optimize low-level machine learning kernels for AMD GPUs using the ROCm software stack. In this role, you will work on high-impact projects to accelerate AI frameworks and libraries, with a focus on emerging technologies like Large Language Models (LLMs) and other generative AI workloads. THE PERSON: The ideal candidate will have hands-on experience with GPU programming (ROCm or CUDA) and a passion for pushing the boundaries of AI performance., * Design and implement highly optimized ML kernels (e.g., matrix operations, attention mechanisms) for AMD GPUs using ROCm.

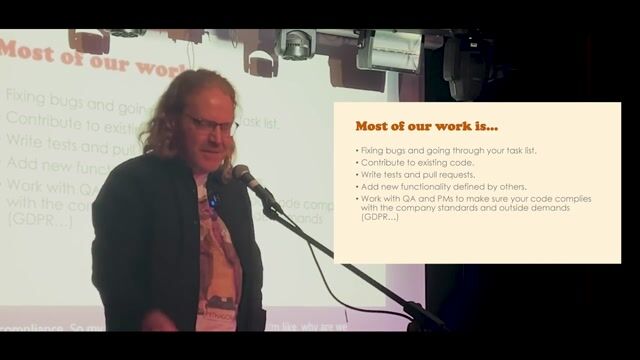

- Profile, debug, and tune kernel performance to maximize hardware utilization for AI workloads.

- Collaborate with ML researchers and framework developers to integrate kernels into AI frameworks (e.g., PyTorch, TensorFlow) and inference engines (e.g., vLLM, SGLang).

- Contribute to the ROCm software stack by identifying and resolving bottlenecks in libraries like MIOpen, BLAS, or Composable Kernel.

- Stay updated on the latest AI/ML trends (LLMs, quantization, distributed inference) and apply them to kernel development.

- Document and communicate technical designs, benchmarks, and best practices.

- Troubleshoot and resolve issues related to GPU compatibility, performance, and scalability.

Requirements

Do you have a Master's degree?, * 2+ years of experience in GPU kernel development for machine learning (ROCm or CUDA).

- Proficiency in C/C++ and Python, with experience in performance-critical programming.

- Strong understanding of ML frameworks (PyTorch, TensorFlow) and GPU-accelerated libraries.

- Basic knowledge of modern AI technologies (LLMs, transformers, inference optimization).

- Familiarity with parallel computing, memory optimization, and hardware architectures.

- Problem-solving skills and ability to work in a fast-paced environment.

PREFERRED EXPERIENCE:

- Direct experience with AMD ROCm development (HIP, MIOpen, Composable Kernel).

- Knowledge of LLM-specific optimizations (e.g., FlashAttention, PagedAttention in vLLM).

- Experience with distributed training/inference or model compression techniques.

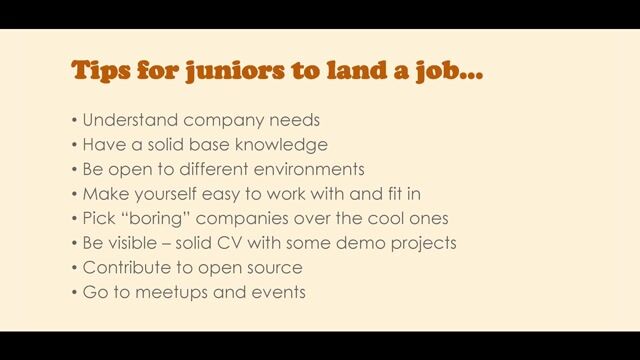

- Contributions to open-source ML projects or GPU compute libraries.

ACADEMIC CREDENTIALS:

- Bachelor's/Master's in Computer Science, Electrical Engineering, or related field.