AI Engineer

Role details

Job location

Tech stack

Job description

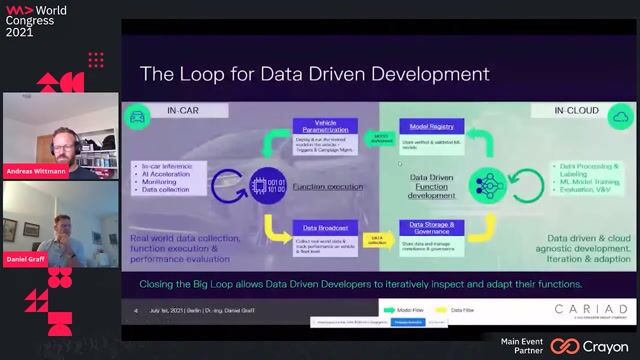

As an AI Engineer, you possess a strong engineering background with a passion for building and deploying intelligent, end-to-end AI systems. You have a comprehensive understanding of the entire AI development lifecycle, from data processing and model prototyping to production deployment and monitoring. You are fluent in modern AI concepts, including Generative AI, LLMs, and agentic workflows, and are familiar with the operational cycles of LLMOps/MLOps (e.g., CI/CD/CT loops).

You will spend most of your time embedded within the Data Science Team, where you will be instrumental in transforming innovative models into robust, scalable products. You will also partner with multiple internal teams to build AI solutions that directly enhance data integration, analysis, reporting, and operational efficiency., * Design, build, and deploy end-to-end AI solutions, including generative AI applications and intelligent agentic systems.

- Develop and maintain the infrastructure for building, training, and serving AI models at scale.

- Collaborate closely with Data Scientists and ML Engineers to productionize AI prototypes, ensuring they are performant, reliable, and scalable.

- Architect and implement complex AI workflows, orchestrating multiple agents for tasks involving advanced retrieval (RAG), code generation, and function calling.

- Own and manage the full AI lifecycle, implementing best practices for CI/CD, testing, model versioning, and monitoring (MLOps/LLMOps).

- Partner with Software and Data Engineering teams to ensure seamless data and runtime integration for AI services.

- Act as a key resource on AI system architecture, advising teams on best practices for building robust and scalable solutions from project inception.

- Identify, evaluate, and implement new technologies and tools to advance the capabilities and efficiency of our AI systems.

- Help define and standardize our frameworks for LLM evaluation, testing, and observability.

Requirements

We are looking for a motivated AI Engineer to join our Data Science team and help drive Cardo AI's rapid growth., * LLM Application Development: Proven experience designing and building LLM-powered workflows, including structured outputs, tool/function calling, and agentic systems.

- RAG: Deep understanding of Retrieval-Augmented Generation techniques, including vector search (e.g., Elasticsearch), hybrid search, and advanced chunking strategies.

- Evaluation Mindset: A strong focus on quality and reliability, with experience designing task-level evaluations, implementing guardrails, and writing robust regression tests for prompts and agents.

Infrastructure & Operations (MLOps/AIOps)

- MLOps Principles: Strong knowledge of the CI/CD/CT framework and experience operationalizing AI models.

- Model Serving: Understanding of model serving paradigms (e.g., serverless scaling, multi-tenant serving) and hands-on experience with frameworks like KServe, FastAPI, or AWS Lambda.

- Tooling: Familiarity with MLOps orchestration frameworks (e.g., Kubeflow), model tracking tools (e.g., MLflow), and monitoring solutions (e.g., Prometheus).

Cloud & Software Engineering

- Cloud Proficiency: Solid understanding of IaaS principles and networking in a major cloud environment (AWS, GCP, or Azure).

- Containerization & Orchestration: Deep knowledge of Docker and Kubernetes fundamentals (CKAD or equivalent knowledge).

- Infrastructure as Code (IaC): Experience with Terraform or equivalent tools.

- Software Engineering: Strong Python programming skills and a firm grasp of software design principles.

- GitOps: Good understanding of GitOps principles and technologies (e.g., Argo CD, Git pipelines).

- Solid understanding of data engineering principles and experience with ETL processes in Apache Spark or SQL., * Experience with Deep Learning frameworks like PyTorch or Keras, particularly concerning infrastructure (e.g., GPU integration).

- Hands-on experience with Dockerized GPU deployment (CUDA) for both single and multi-GPU setups.

- Familiarity with semi-structured data models (e.g., Elasticsearch).

- Professional experience in Fintech or credit, especially in private credit, banking, or insurance.

- Experience with advanced techniques like model fine-tuning or retrieval training (e.g., contrastive learning).

- Expertise with AWS services (e.g., Bedrock, SageMaker, EC2, S3, Glue, CloudWatch).

- Experience fine-tuning and deploying models from ecosystems like Hugging Face.