Apache Iceberg Developer - Remote Work

Role details

Job location

Tech stack

Job description

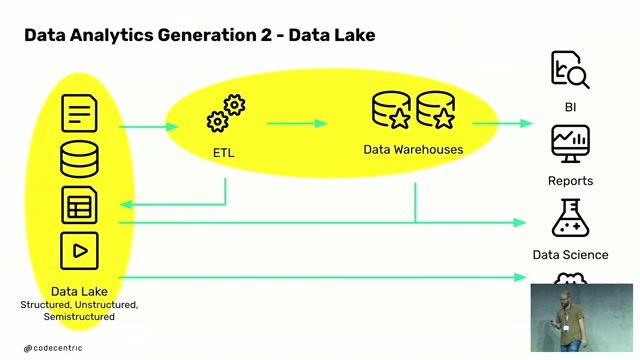

We are seeking an Apache Iceberg Developer to work on distributed data table formats and metadata management systems. You'll contribute to Apache Iceberg and related ecosystem projects (Spark, Flink, Hive), focusing on schema evolution, partitioning, and scalable table architecture.

What You'll Do:

-

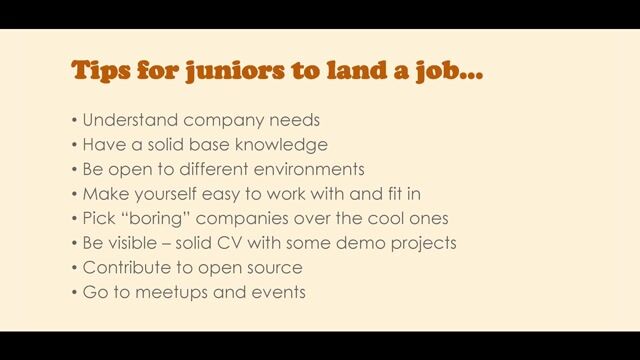

Contribute actively to Apache Iceberg and related open source projects as a core contributor.

-

Implement and optimize table formats, snapshots, partitioning, and metadata management.

-

Support integrations with processing engines like Spark, Flink, and Hive for batch and streaming workloads.

Requirements

10+ years of experience in software development.

-

Strong expertise in distributed computing and large-scale data processing systems.

-

Understanding of Apache Iceberg table architecture, schema evolution, snapshots, partitioning, and incremental reads.

-

Proficiency in Java (primary), and Scala or Python for ecosystem integrations.

-

Experience in performance tuning of metadata-heavy systems.

-

Core contributions to Apache open source projects (Iceberg, Spark, Hive, Flink, or similar).

-

Advanced level of English.

Benefits & conditions

Excellent compensation in USD or your local currency if preferred.

-

Paid parental leave, vacation, & national holidays.

-

Innovative and multicultural work environment.

-

Collaborate and learn from the global Top 1% of talent in each area.

-

Supportive environment with mentorship, promotions, skill development, and diverse growth opportunities.

Join a global team where your unique talents can truly thrive and make a significant impact!