Senior Data Scientist - LLM Expert

Role details

Job location

Tech stack

Job description

As a Senior Data Scientist specialized in Large Language Models at Kyndryl's AI Innovation Hub, you'll help shape the frontier of intelligent and agentic systems - turning cutting-edge research into real-world impact for some of the world's most forward-thinking enterprises. You'll work with state-of-the-art technologies, foundation models, and emerging agentic frameworks to design, fine-tune, and deploy powerful LLM-based solutions that understand, reason, and generate language with purpose. In this role, you'll collaborate closely with architects, ML engineers, and product teams to transform ideas into production-ready systems that redefine how organizations interact with knowledge and automation. You'll operate in an environment that values experimentation, precision, and creativity - where you'll be encouraged to explore the limits of generative AI, drive technical excellence, and contribute to solutions used by top global clients. If you're passionate about transforming language models into intelligent agents that can think, act, and assist - this is where your work will make a lasting mark.

Your Mission

- Design, train, fine-tune, and evaluate large language models and their distilled or specialized variants (SLMs).

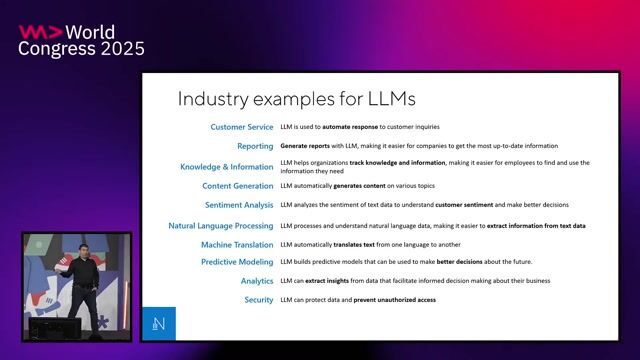

- Develop and integrate Generative AI and Agentic AI solutions that combine reasoning, retrieval, and language understanding.

- Implement and optimize RAG (Retrieval-Augmented Generation) pipelines, ensuring precision, traceability, and scalability.

- Collaborate with architects and ML engineers to bring LLM-based solutions into production environments.

- Define and apply robust evaluation frameworks and metrics for alignment, safety, hallucination control, and factual consistency.

- Contribute to the Hub's innovation roadmap by exploring new models, architectures, and methods for fine-tuning and instruction learning.

- Advise on model optimization, including quantization, distillation, and memory-efficient inference.

- Promote best practices in documentation, traceability, and Responsible AI across all LLM-based initiatives. Who You Are

Requirements

- 4+ years of experience in AI model development, with at least 2 years focused on LLMs or advanced NLP systems.

- Proven expertise in fine-tuning, instruction tuning, and evaluation of foundation models (GPT, Llama, Claude, Mistral, Gemma, etc.).

- Hands-on experience developing RAG pipelines and LLM-based reasoning architectures.

- Solid understanding of Python and major libraries for LLM and AI development (Transformers, LangChain, LlamaIndex, PEFT, BitsAndBytes, LoRA, Accelerate).

- Experience training or serving models on GPU/TPU environments (PyTorch, DeepSpeed, vLLM, Ollama).

- Strong background in vector databases and embedding models.

- Familiarity with multi-agent frameworks and orchestration systems (CrewAI, AutoGen, LangGraph, Google ADK).

- Knowledge of Responsible AI and evaluation principles - safety, bias mitigation, factual alignment, and model interpretability.

- Experience deploying models in MLOps environments (MLflow, Vertex AI, Azure ML, OpenShift AI)., * Bachelor's or Master's degree in Computer Engineering, Data Science, Mathematics, Computational Linguistics, or related field.

- Postgraduate studies (Master's or PhD) in Natural Language Processing (NLP), Deep Learning, or Artificial Intelligence are highly valued.

- Additional training in Generative AI, Prompt Engineering, or Responsible AI is a plus.

- Demonstrated commitment to continuous learning and staying current with the latest research in LLMs and generative AI.

Preferred Skills

-

Expertise in prompt optimization, context management, and hallucination detection/mitigation.

-

Understanding of LLMOps practices and observability tools for model monitoring and evaluation.

-

Experience with distillation, quantization, and efficient inference strategies for scaling LLMs in production.

-

Familiarity with semantic search, retrieval frameworks, and knowledge-grounded generation.

-

Background in reinforcement learning from human feedback (RLHF) or preference optimization.

-

Ability to analyze research papers, prototype rapidly, and transfer innovation into production-ready systems.

-

Comfort working with hybrid architectures, integrating models across cloud and on-premise environments. Soft Skills

-

Clear and articulate communicator, able to explain complex model behavior and design choices to diverse audiences.

-

Analytical and experimental mindset, combining scientific rigor with creativity and problem-solving agility.

-

Collaborative and cross-functional approach, working closely with architects, engineers, and designers to deliver cohesive AI solutions.

-

Curiosity and innovation-driven attitude, always exploring new models, datasets, and emerging techniques in the LLM space.

-

Business awareness, able to translate functional requirements into measurable AI outcomes.

-

Commitment to quality and reproducibility, ensuring experiments, metrics, and models are well-documented and traceable.