Generate erroneous reasoning to enhance explainable AI H/F

Role details

Job location

Tech stack

Job description

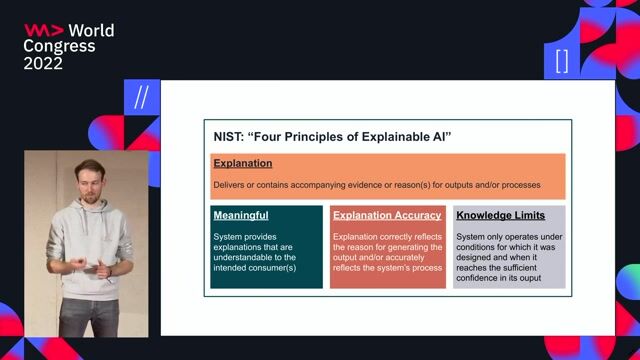

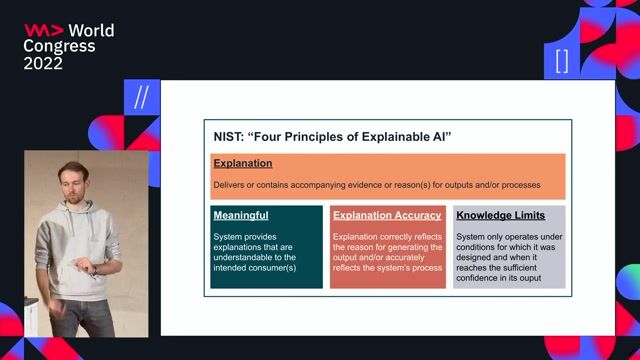

This internship aims to improve human-AI collaboration, where accuracy alone is insufficient as trust requires understanding the AI's reasoning. Explainable AI (XAI) focuses on generating clear, faithful, concise, and user-adapted explanations, since a poor explanation can harm cooperation. The work concerns ExpressIF®, a symbolic fuzzy-logic AI from CEA. Though interpretable, it may be hard to select the most relevant rules for users.

The project addresses human-AI disagreement: the AI must detect possible human reasoning errors (lack of knowledge, confirmation or attention bias, illusory correlation, or uncertainty differences) to adapt its explanations. Goals: study fuzzy logic, ExpressIF®, and cognitive biases; design a model of user reasoning errors; integrate and evaluate it within ExpressIF®.

Durée du contrat (en mois)

Requirements

We are looking for a second-year Master's student or third-year engineering school student specializing inComputer Science and/or Mathematics, with the following profile :

- A strong interest in artificial intelligence topics, particularly fuzzy logic ;

- A strong interest in scientific and technological research ;

- A genuine interest in cognitive sciences ;

- Solid object-oriented programming skills, ideally in C# ;

- Strong English skills., Diplôme préparé

Bac+5 - Master 2

Possibilité de poursuite en thèse