Senior GCP Scala Data engineer - parttime

Role details

Job location

Tech stack

Job description

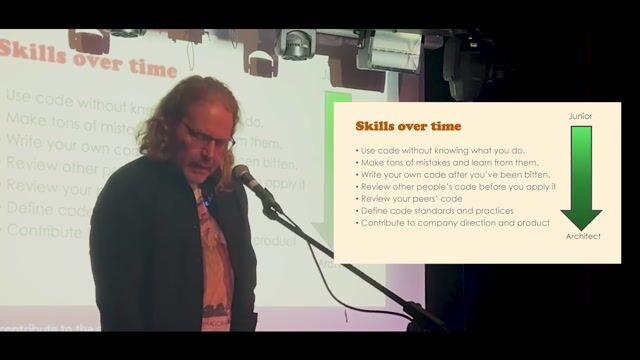

We are looking for a Senior Data Engineer to help our teams make the best possible data solutions according to the company standards. You inspire your team to improve quality and performance of existing and new solutions. Next to this you discuss the requirements with your stakeholders and explain them possible solutions or help them understand technical problems you phase. You coach your junior teammates on a technical level. You are always looking for opportunities to improve the quality of your solutions or try out new technologies. Besides all of this you have

Requirements

-

Preferably bachelor's degree or higher in Computer Science, Software Engineering or other relevant fields (less important in light of work experience)

-

At least 4+ years of experience building production-grade data processing systems as a Data Engineer using cloud technology (GCP is preferred)

-

In-depth knowledge of:

-

The Basic understanding of Hadoop ecosystem (good to have).

-

Building applications with Apache Spark using GCP.

-

Experience with Event Streaming Platforms like Kafka and Kafka connect.

-

Proficient in using Google Cloud Storage for storing and managing data.

-

Experience with Google Big Query for data warehousing and analytical querying.

-

Knowledge of Google Dataform and dbt (data build tool) for data transformation and modelling.

-

Familiarity with Google Cloud Pub/Sub for real-time messaging and event-driven systems.

-

Experience with Google Dataproc serverless for running Apache Spark jobs.

-

Understanding of Google Cloud Scheduler and Google Workflows for orchestrating and automating tasks.

-

Proficiency in using Google Cloud Functions for serverless computing.

-

Familiarity with Google Cloud SQL and Google Bigtable for managed database services. (nice to have)

-

Knowledge of IAM (Identity and Access Management) for securing data and managing permissions.

-

Experience with Google Kubernetes Engine (GKE) for container orchestration and deployment.

-

Experience with Terraform for infrastructure as code on GCP from development prespective.

-

Hands on Expertise in designing and implementing batch and near real-time data processing solutions on GCP.

-

Hands on expertise in working with GitHub as a data engineer with code repositories.

-

Hands on expertise with unit testing of data applications.

-

Hands on expertise with working with REST APIs in the context of data extractions and transformations using data engineering frameworks.

-

Experience with Scala and at least 1 more server-side programming languages, e.g. Python, Java, etc.

-

Understanding of common algorithms and data structures

-

Experience with databases and SQL

-

Knowledge of continuous integration/continuous deployment techniques