Senior Solutions Architect, HPC and AI

Role details

Job location

Tech stack

Job description

- Collaborating with NVIDIA's training framework developers and product teams to stay ahead of the latest features and help partners to adopt them effectively.

- Assisting with deployment, debugging, and improving the efficiency of AI workloads on extensive NVIDIA platforms.

- Benchmarking new framework features, analyzing performance, and sharing actionable insights with both customers and internal teams.

- Working directly with external customers to solve cluster performance and stability issues, identify bottlenecks, and implement effective solutions.

- Build expertise and guide customers in scaling workloads efficiently and reliably on the latest generation of NVIDIA GPUs.

- Contributing to Europe's Sovereign AI initiative by helping customers implement advanced resiliency features within AI training pipelines.

Requirements

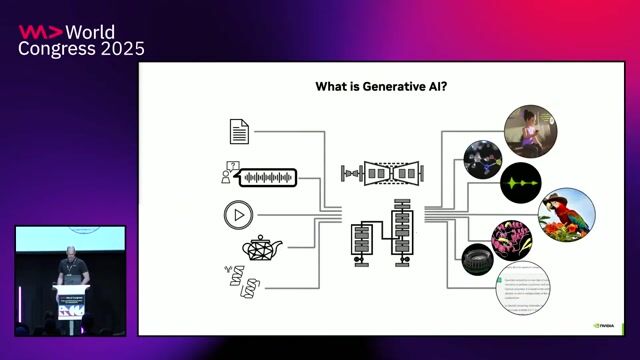

We are seeking a Senior Solutions Architect with strong hands-on experience in deploying, debugging, and optimizing training and inference workloads on large-scale GPU clusters. As we support customers and partners across Europe in training models on ground breaking GPU infrastructure, we are looking for someone who enjoys solving complex challenges at the intersection of High Performance Computing and AI. Similarly, inference is increasing in its complexity with explosion of MOE models and disaggregated execution making inference truly a HPC workload. You don't need to have expertise in every skill we mention, but we are especially interested in candidates who bring deep knowledge in at least few key areas to enable large scale AI workloads. If you can demonstrate hands-on experience, we would love to hear from you., * BS, MS, PhD or equivalent experience in Computer Science, Electrical/Computer Engineering, Physics, Mathematics, or a related engineering field-or equivalent practical experience.

- 8+ years of experience in accelerated computing technologies at cluster scale, ideally including work with NVIDIA platforms.

- Strong programming skills in at least one of the following languages: C, C++, or Python.

- Practical experience identifying and resolving bottlenecks in large-scale training workloads or parallel applications.

- Hands-on experienced in profiling and debugging large parallel applications.

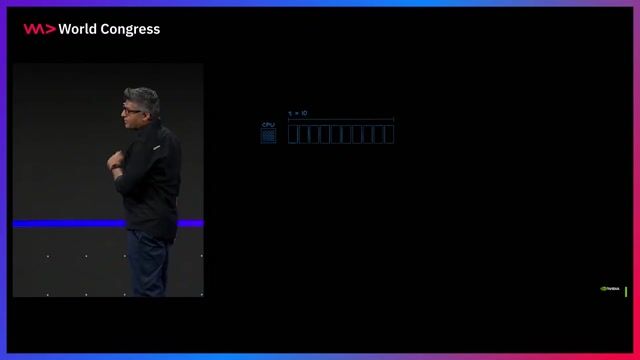

- Solid understanding of CPU and GPU architectures, CUDA, parallel filesystems, and high-speed interconnects.

- Experienced in working with large compute clusters with an understanding of their internal scheduling and resource management mechanisms (e.g. SLURM or Cloud based clusters).

- Proficient knowledge of training pipelines and frameworks, encompassing their internal operations and performance attributes.

Ways To Stand Out From The Crowd

- Experience in debugging training pipelines running on thousands of GPUs in production environment.

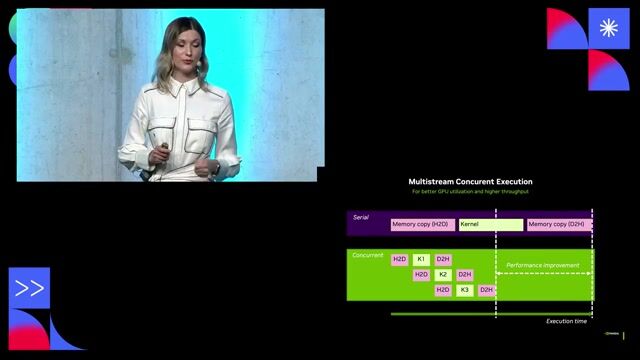

- Hands-on experience with performance profiling and optimizations using tools like Nsight Systems, Nsight Compute and good understanding of NCCL, MPI and low-level communication libraries.

- Ability to debug stability issues across the entire stack: parallel application, training frameworks, runtime libraries, schedulers, and hardware.

- Solid understanding of the internal workings of LLM frameworks such as PyTorch, Megatron-LM, or NeMo, and how they affect compute layers like CPUs, GPUs, network and storage or understanding of inference tools such as vLLM, Dynamo, TensorRT-LLM, RedHat Inference Server or SGLang.