Stephen Jones

Coffee with Developers - Stephen Jones - NVIDIA

#1about 2 minutes

Gaining perspective by using the products you build

Transitioning from a creator to a user of CUDA provides critical insights and humility by revealing the incorrect assumptions made during development.

#2about 3 minutes

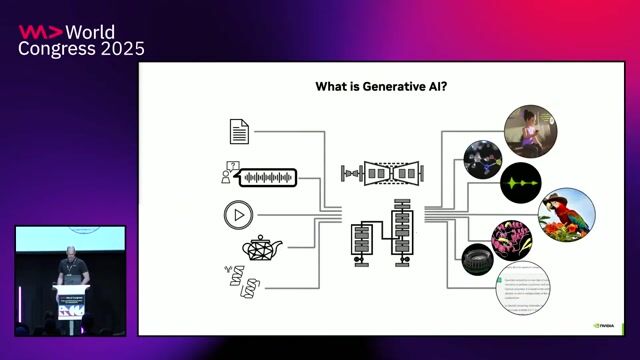

Understanding CUDA as a complete computing platform

CUDA has evolved from a low-level language into a comprehensive platform of compilers, libraries, and SDKs that enable GPU access for multiple languages.

#3about 2 minutes

Supporting legacy languages like Fortran for scientific computing

CUDA supports languages like Fortran to accelerate existing codebases in supercomputing for fields such as physics and weather forecasting.

#4about 4 minutes

Why Python became the dominant language for AI

Python's large ecosystem, developer productivity, and vast talent pool made it the de facto language for AI, creating new challenges for parallel computing platforms.

#5about 3 minutes

The challenge of aligning long hardware and short software cycles

Developing new chips takes years of predictive work, creating a challenge to meet the rapidly changing demands of software, especially in the AI space.

#6about 3 minutes

How unexpected user adoption drives technological evolution

Technology evolves organically as users find novel applications for existing tools, such as using gaming GPUs for scientific computing and AI.

#7about 3 minutes

Why AI optimizations increase the demand for compute

Advances that make AI models cheaper or more efficient don't reduce overall compute demand; instead, they enable the creation of even larger and more powerful models.

#8about 3 minutes

The end of Moore's Law is a power consumption problem

While transistor density still doubles, the power per transistor is not halving, creating a thermal and power delivery bottleneck for chip performance.

#9about 6 minutes

The future of computing requires scaling out to data centers

Overcoming power limitations requires moving from single-chip optimization to building large, networked, data-center-scale systems with specialized hardware.

#10about 4 minutes

The rise of neural and quantum computing paradigms

The future of computing will be a hybrid model combining classical, neural, and quantum approaches to solve complex problems using the best tool for each task.

#11about 3 minutes

How developers can contribute to the open source CUDA ecosystem

While low-level drivers are proprietary, the vast majority of CUDA's higher-level libraries like Rapids and Cutlass are open source and welcome community contributions.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

05:12 MIN

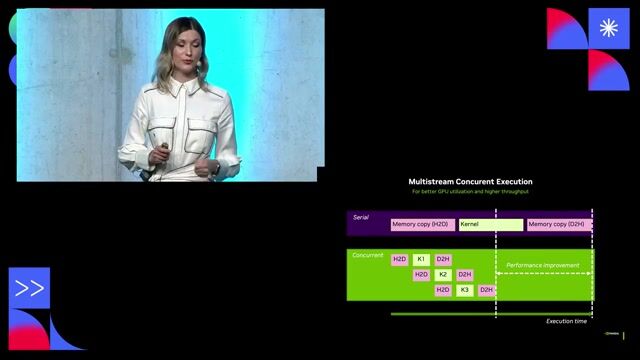

Boosting Python performance with the Nvidia CUDA ecosystem

The weekly developer show: Boosting Python with CUDA, CSS Updates & Navigating New Tech Stacks

06:37 MIN

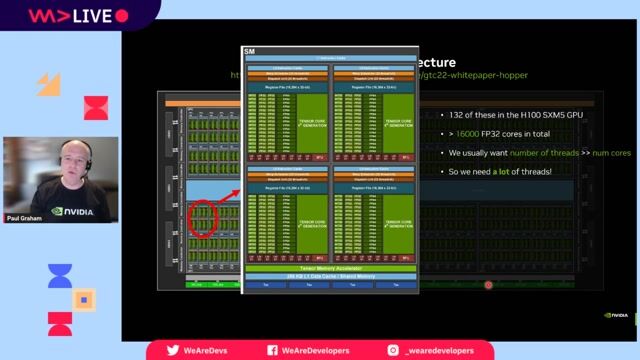

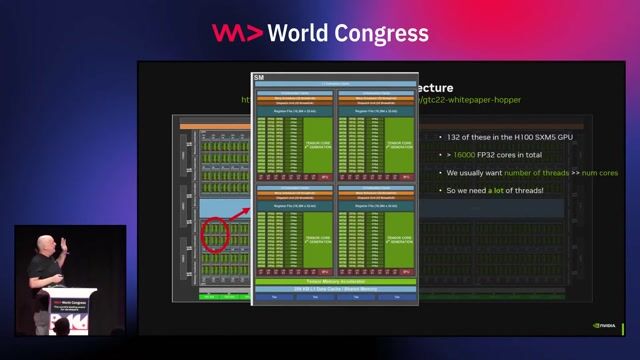

Introducing the CUDA parallel computing platform

Accelerating Python on GPUs

05:33 MIN

Understanding the CUDA platform stack for Python developers

CUDA in Python

02:21 MIN

How GPUs evolved from graphics to AI powerhouses

Accelerating Python on GPUs

01:04 MIN

NVIDIA's platform for the end-to-end AI workflow

Trends, Challenges and Best Practices for AI at the Edge

01:40 MIN

The rise of general-purpose GPU computing

Accelerating Python on GPUs

02:28 MIN

Navigating the CUDA Python software ecosystem

Accelerating Python on GPUs

01:49 MIN

Understanding the CUDA software ecosystem stack

Accelerating Python on GPUs

Featured Partners

Related Videos

21:02

21:02CUDA in Python

Andy Terrel

59:43

59:43Accelerating Python on GPUs

Paul Graham

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

1:09:10

1:09:10The weekly developer show: Boosting Python with CUDA, CSS Updates & Navigating New Tech Stacks

Chris Heilmann, Daniel Cranney & Nicole Jeschko

24:39

24:39Accelerating Python on GPUs

Paul Graham

22:18

22:18Accelerating Python on GPUs

Paul Graham

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

45:09

45:09Engineering Mindset in the Age of AI - Gunnar Grosch, AWS

Gunnar Grosch

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Nvidia

Remote

Senior

C

Bash

Linux

Python

+2

Avantgarde Experts GmbH

München, Germany

Junior

C++

GIT

CMake

Linux

DevOps

+3

NVIDIA

Zwolle, Netherlands

Senior

Linux

DevOps

Python

OpenCL

Docker

Nvidia

Central Milton Keynes, United Kingdom

£221K

Senior

C++

Python

Docker

Ansible

+4