Principal Data Architect

Role details

Job location

Tech stack

Job description

As a Principal Data Architect, you will play a pivotal role in designing and implementing modern, scalable data solutions for our clients. Working closely with colleagues across the Data & Analytics Engineering teams, you will help architect, build, and optimise new data platforms or migrate existing solutions to Google Cloud.

This is an exciting opportunity for a highly-experienced data professional who is passionate about leveraging cloud technologies to drive innovation and efficiency. You will consult with our clients to understand their business needs and objectives, gather requirements, and define and deliver robust, high-performance data architectures. If you thrive in a fast-paced, technology-driven, consulting environment and are eager to make a tangible impact on transformative projects, this role is for you.

What You'll Do

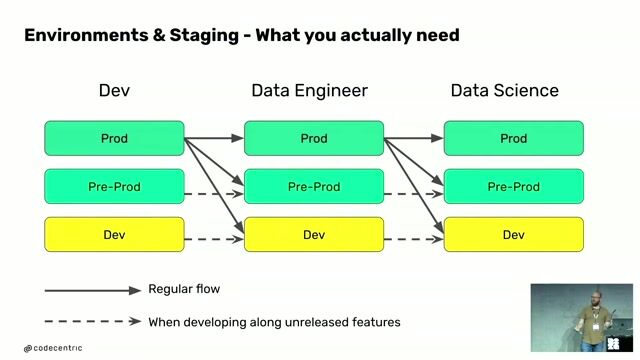

- Design & Deliver Cutting-Edge Data Solutions : Lead the analysis, design, and execution of state-of-the-art, data-driven solutions to meet our client's business needs, leveraging the best of Google Cloud technologies.

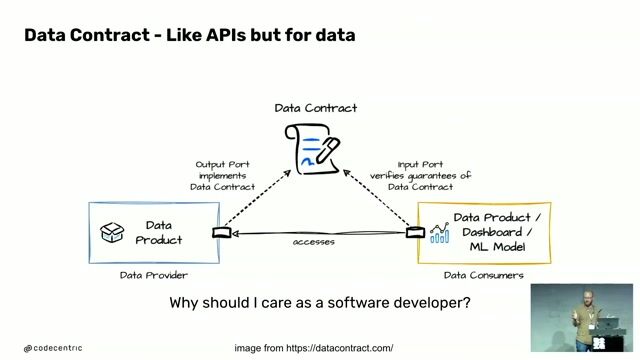

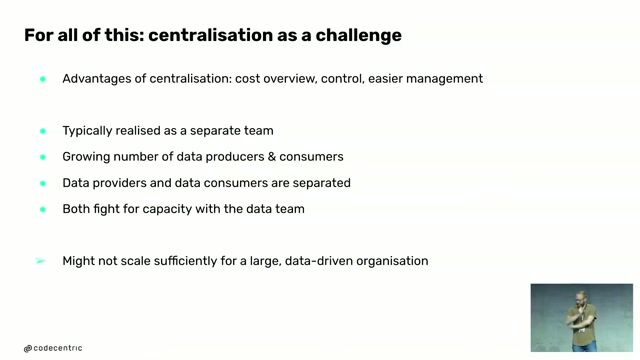

- Data Architecture & Governance: Serve as an expert in data transformation, storage, retrieval, security, and governance, ensuring scalable, secure, and efficient data solutions.

- Guide & Mentor Engineers: Provide architectural direction to engineers, ensuring they build robust, high-performance solutions aligned with your target data architecture.

- Master Data Modeling Techniques: Apply expertise in various data modeling approaches, including 3NF, Data Vault, Star Schema, and One Big Table (OBT). Clearly articulate the benefits and trade-offs of each method and optimize their implementation within columnar databases such as BigQuery.

- Shape Data Strategy: Collaborate with the client to define and refine data strategy, covering:

- Data governance and compliance

- Scalable and efficient data modeling techniques

- Ensuring data quality and integrity

- Data management, security, and privacy best practices

- Establishing optimal workflows and operational efficiencies

- Develop Fully Integrated Solutions: Work alongside Architecture, Engineering, and Data Science teams to design comprehensive, production-ready solutions that incorporate:

- Cloud best practices

- Scalable and efficient ingestion strategies

- Feature engineering methodologies

- End-to-end production readiness

- Leverage Leading Technologies: Design and implement solutions using key partner technologies, including:

- Google Cloud - BigQuery, Dataflow, Vertex AI, and more

- dbt Labs - Modern analytics engineering and transformation

- Snowflake - Cloud-native data warehousing

- Fivetran - Automated data pipelines for seamless integration

Requirements

- Data Architecture: Proven experience designing and building data warehouse / lakehouse solutions using technologies like BigQuery, Azure Synapse, Snowflake, Databricks

- Data Modeling: Strong expertise in data modeling and solution architecture, optimizing for performance and scalability

- Data Governance: Experience with data platforms with data quality, security, privacy, and governance controls built-in

- Ownership Mindset: Ability to take projects from concept to completion, driving creative and effective solutions

- Analytical & Technical Excellence: Demonstrated problem-solving skills with a strong technical foundation and an innovative approach

- Communication & Presentation: Exceptional written and verbal communication skills with great attention to detail, capable of presenting complex concepts clearly to customers

- Stakeholder Management: Ability to build and maintain strong relationships with key external stakeholders across different business levels

- Programming Proficiency : Hands-on experience with Python, Java, and SQL for data engineering and solution development