Data Engineer

Role details

Job location

Tech stack

Job description

Our team is at the forefront of applying Machine Learning (ML) to interpret and process complex chemical data, specifically chromatograms and environmental testing results. We're seeking a skilled Data Engineer to design, build, and maintain the robust data pipelines and infrastructure necessary to support our ML model training, deployment, and analysis workflows. The ideal candidate has a strong foundation in data engineering, understands the unique challenges of scientific data, and is eager to work closely with both Machine Learning Engineers (MLE) and Chemists., * Data Pipeline Development: Design, construct, and manage scalable and reliable ETL/ELT pipelines to ingest, clean, transform, and store raw chemistry data (e.g., CSV, JSON, and proprietary instrument formats).

- Data Modeling & Warehousing: Develop optimized data models and manage a data warehouse (or data lake) to support fast querying and ML feature engineering on complex datasets, including time-series and spectral data from chromatograms.

- ML Infrastructure: Collaborate with MLE to containerize and deploy ML models and build automated model retraining and monitoring pipelines.

- Data Quality & Governance: Implement robust data quality checks, validation, and monitoring to ensure the integrity and reproducibility of chemical experiment data used for ML.

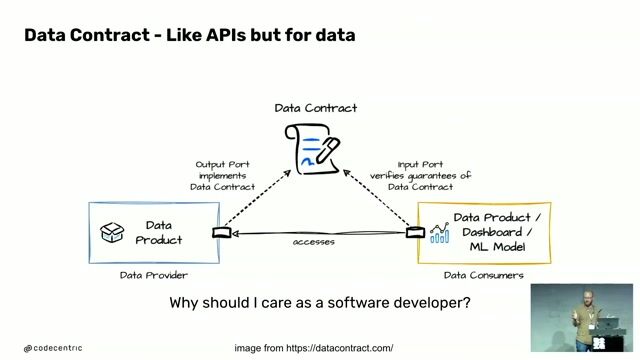

- Tooling: Develop internal tools and APIs to facilitate data access for MLE and provide standardized interfaces for data submission from chemistry lab systems.

Requirements

Education: Bachelor's or Master's degree in Computer Science, Data Engineering, or a related technical field (or equivalent practical experience), * Programming: Expert-level proficiency in Python (including packages like Pandas, NumPy, and familiarity with data engineering libraries).

- Cloud & Infrastructure: Hands-on experience with at least one major cloud provider (AWS, Azure, or GCP, preferred Azure), including services related to computing, storage, and serverless functions (e.g., Azure Data Lake Storage (ADLS), Azure Compute VMs, Azure Functions).

- Data Orchestration: Proven experience building and managing data workflows using an orchestration tool like Apache Airflow, Prefect, or Dagster.

- Databases: Strong knowledge of SQL and experience working with both relational (e.g., PostgreSQL) and NoSQL databases.

- Version Control: Proficient with Git and standard DevOps practices

- Scientific Data Experience: Prior experience handling and processing complex, large-volume scientific data (e.g., mass spectrometry, chromatography, LIMS/ELN integration).

- MLOps: Familiarity with MLOps platforms and tools such as Azure ML Studio, MLflow, Kubeflow, or Sagemaker.

- Chemistry Domain: Basic understanding of analytical chemistry concepts, such as chromatography fundamentals (e.g., retention time, peak integration) and relevant file formats.

- Authorization to work in the United States without restriction or sponsorship

- Professional working proficiency in English is a requirement, including the ability to read, write and speak in English.

Required General Skills

- Problem-Solving: Strong analytical and problem-solving skills with a focus on delivering high-quality, reproducible data solutions.

- Communication: Excellent verbal and written communication skills, with the ability to bridge the gap between technical infrastructure, data science models, and chemical applications.

Benefits & conditions

As a Eurofins employee, you will become part of a company that has received national recognition as a great place to work. We offer excellent full-time benefits including comprehensive medical coverage, life and disability insurance, 401(k) with company match, paid holidays, paid time off, and dental and vision options.