Platform / DevOps Engineer (Hybrid)

Role details

Job location

Tech stack

Job description

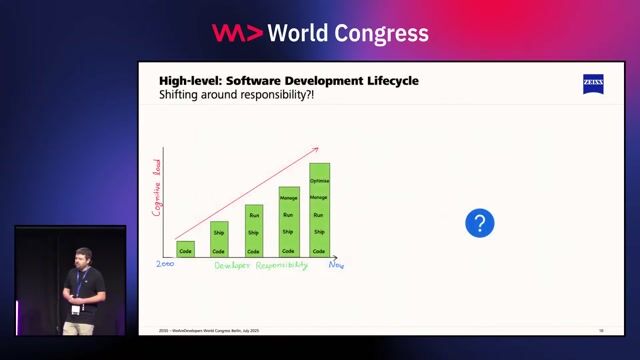

high-impact team responsible for creating the platform of the future - from greenfield infrastructure design to continuous improvement and automation at scale. This role demands deep expertise in Terraform, Kubernetes, and GitOps (ArgoCD or Flux), with a strong bias for action, performance, and reliability. If you love building cloud-native systems from scratch, iterating fast, and enabling engineering teams to move at high velocity while maintaining world-class security and compliance - this is your playground. Key Responsibilities * Architect, build, and maintain scalable cloud infrastructure using Terraform across GCP (preferred) and multi-cloud environments (AWS, Azure). * Design, deploy, and manage Kubernetes clusters for production-grade workloads, ensuring resilience, security, and observability. * Implement GitOps workflows using ArgoCD or Flux, enabling fully automated, declarative deployments. * Develop and manage CI/CD pipelines to support continuous delivery of

Requirements

microservices, data pipelines, and AI workloads. * Collaborate closely with data, AI, and backend teams to design secure, performant environments for experimentation and deployment. * Automate infrastructure operations, monitoring, and scaling through tools such as Terraform Cloud, Helm, and service meshes (e.g., Istio). * Continuously improve platform performance, security, and developer experience through innovation and feedback loops. * Support security, compliance, and auditability across environments - aligned with SOC 2, ISO 27001, HIPAA, GDPR, etc. * Lead or contribute to internal platform documentation, standards, and best practices. Required Qualifications * 5+ years of experience in DevOps, Platform Engineering, or Infrastructure roles. * Proven expertise in Terraform (Infrastructure as Code) and modular, reusable architectures. * Strong hands-on experience with Kubernetes (GKE preferred) - including cluster design, scaling, networking, service mesh and observability. * Practical experience with GitOps principles using ArgoCD or Flux for automated deployments. * Solid understanding of CI/CD pipelines (GitLab, GitHub Actions, or similar). * Familiarity with monitoring and logging stacks (Prometheus, Grafana, ELK/EFK, Cloud Logging). * Strong scripting skills in Python, Bash, or Go. * Experience managing cloud infrastructure in GCP, including IAM, VPC, CloudSQL, and Artifact Registry. * Knowledge of container security, policy enforcement, and secret management (e.g., HashiCorp Vault, SOPS). * Bachelor's or Master's degree in Computer Science, Engineering, or equivalent experience. Preferred Qualifications * Experience with multi-environment setups (dev, stage, prod) and blue/green or canary deployments. * Exposure to service meshes (Istio) and messaging systems (NATS). * Familiarity with Bitbucket pipelines. * Understanding of compliance and security automation in regulated environments (SOC 2, ISO 27001, GxP). * Experience integrating with AI/ML pipelines and data platforms (Vertex AI, Data Fusion, BigQuery). * Contributions to open-source or internal DevOps tooling projects. Why Join Us? * Be part of a greenfield platform initiative - build from the ground up, design the architecture, and define the standards. * Work in a fast-paced, iterative environment where experimentation and continuous improvement are core values. * Collaborate with brilliant engineers and scientists building the future of AI in healthcare. * Comprehensive private health coverage to support your physical and mental well-being. * Company-sponsored gym membership and wellness benefits. * Hybrid work model offering flexibility to balance your personal and professional life. * Coffee, tea, beverages, and snacks to keep you fueled throughout the day. * Company events and retreats to celebrate milestones together. Job Details * Seniority level: Mid-Senior level * Employment type