Data Engineer - Scala (Zurich, Switzerland)

Masento

Zürich, Switzerland

3 days ago

Role details

Contract type

Contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

EnglishJob location

Zürich, Switzerland

Tech stack

Airflow

Amazon Web Services (AWS)

Azure

Continuous Integration

Data Architecture

Information Engineering

ETL

DevOps

Distributed Data Store

DataOps

SQL Databases

Spark

GIT

Containerization

Data Lake

Kubernetes

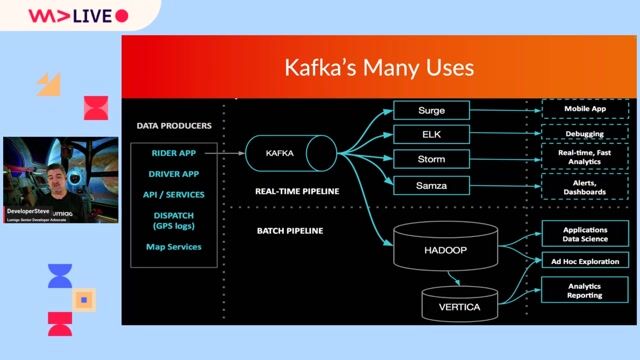

Kafka

Spark Streaming

Data Pipelines

Docker

Databricks

Job description

Location: Zurich, Switzerland (Hybrid - 2-3 days onsite per week) - Please do not apply if you cannot commit to hybrid working in Zurich

Contract: 12 months, with strong view to extend

Hours: Full time

Overview: Seeking an experienced Data Engineer with strong Scala expertise to design, build, and optimise scalable data pipelines within a modern cloud data environment. This hybrid role requires regular onsite collaboration in Zurich.

Key Responsibilities:

- Build and maintain scalable data pipelines using Scala and distributed data processing frameworks.

- Develop and optimise ETL/ELT workflows across diverse datasets.

- Collaborate with data scientists, analysts, and engineering teams on data product delivery.

- Ensure data quality, governance, and reliability across the data life cycle.

- Contribute to cloud-based data architectures, including batch and streaming solutions.

- Troubleshoot and improve pipeline performance.

Required Skills:

- Strong hands-on experience with Scala for data engineering.

- Experience with distributed processing frameworks (eg, Apache Spark using Scala).

- Solid programming fundamentals and strong SQL skills.

- Experience with data lake/lakehouse platforms (eg, Databricks, Delta Lake).

- Familiarity with cloud platforms (Azure, AWS, or GCP).

- Experience with orchestration tools (Airflow, Databricks Jobs, Prefect, etc.).

- Good understanding of ETL/ELT, data modelling, and data architecture.

Nice to Have:

- Experience with streaming technologies (Kafka, Spark Structured Streaming, etc.).

- Knowledge of CI/CD, Git, and DevOps/DataOps practices.

- Exposure to ML pipelines or containerisation (Docker/Kubernetes).

- Experience in regulated industries.

Requirements

- Strong hands-on experience with Scala for data engineering.

- Experience with distributed processing frameworks (eg, Apache Spark using Scala).

- Solid programming fundamentals and strong SQL skills.

- Experience with data lake/lakehouse platforms (eg, Databricks, Delta Lake).

- Familiarity with cloud platforms (Azure, AWS, or GCP).

- Experience with orchestration tools (Airflow, Databricks Jobs, Prefect, etc.).

- Good understanding of ETL/ELT, data modelling, and data architecture.

Nice to Have:

- Experience with streaming technologies (Kafka, Spark Structured Streaming, etc.).

- Knowledge of CI/CD, Git, and DevOps/DataOps practices.

- Exposure to ML pipelines or containerisation (Docker/Kubernetes).

- Experience in regulated industries.