SC Cleared Databricks Data Engineer - Azure Cloud

Montash Limited

Liverpool, United Kingdom

3 days ago

Role details

Contract type

Temporary contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

English Compensation

£ 104KJob location

Liverpool, United Kingdom

Tech stack

Azure

Continuous Integration

ETL

Data Intelligence

Metadata

Power BI

Azure

Workflow Management Systems

Spark

Data Lake

PySpark

Data Lineage

Deployment Automation

Data Pipelines

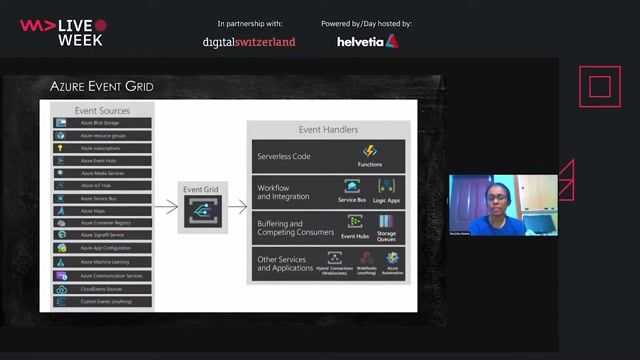

Serverless Computing

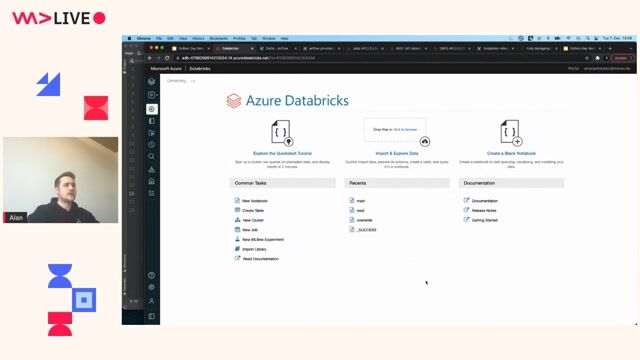

Databricks

Job description

- Build and orchestrate Databricks data pipelines using Notebooks, Jobs, and Workflows

- Optimise Spark and Delta Lake workloads through cluster tuning, adaptive execution, scaling, and caching

- Conduct performance benchmarking and cost optimisation across workloads

- Implement data quality, lineage, and governance practices aligned with Unity Catalog

- Develop PySpark-based ETL and transformation logic using modular, reusable coding standards

- Create and manage Delta Lake tables with ACID compliance, schema evolution, and time travel

- Integrate Databricks assets with Azure Data Lake Storage, Key Vault, and Azure Functions

- Collaborate with cloud architects, data analysts, and engineering teams on end-to-end workflow design

- Support automated deployment of Databricks artefacts via CI/CD pipelines

- Maintain clear technical documentation covering architecture, performance, and governance configuration

Requirements

- Strong experience with the Databricks Data Intelligence Platform

- Hands-on experience with Databricks Jobs and Workflows

- Deep PySpark expertise, including schema management and optimisation

- Strong understanding of Delta Lake architecture and incremental design principles

- Proven Spark performance engineering and cluster tuning capabilities

- Unity Catalog experience (data lineage, access policies, metadata governance)

- Azure experience across ADLS Gen2, Key Vault, and serverless components

- Familiarity with CI/CD deployment for Databricks

- Solid troubleshooting skills in distributed environments, * Experience working across multiple Databricks workspaces and governed catalogs

- Knowledge of Synapse, Power BI, or related Azure analytics services

- Understanding of cost optimisation for data compute workloads

- Strong communication and cross-functional collaboration skills