Fili Wiese

Command the Bots: Mastering robots.txt for Generative AI and Search Marketing

#1about 2 minutes

Why robots.txt is crucial for managing AI and search bots

The robots.txt file is a foundational tool for controlling how AI models and search engines use your website's content, with incorrect usage risking financial loss.

#2about 2 minutes

The history and formalization of the robots.txt protocol

Originally a de facto standard from 1994, Google formalized the robots exclusion protocol into an official RFC in 2019 to standardize its use across the web.

#3about 3 minutes

What robots.txt can and cannot do for your website

The file controls crawling but not indexing, offers no legal protection against scraping or security for private files, and requires IP blocking for disobedient bots.

#4about 3 minutes

Correctly placing the robots.txt file on every origin

A robots.txt file must be placed at the root of each unique origin, which includes different schemes (HTTP/HTTPS), subdomains, and port numbers.

#5about 4 minutes

How server responses and HTTP status codes affect crawling

Server responses like 4xx codes are treated as 'allow all' for crawling, while 5xx errors on the robots.txt path are treated as 'disallow all' and can de-index your site.

#6about 3 minutes

File format specifications and caching behavior

Bots may cache robots.txt for up to 24 hours, only guarantee reading the first 512 kilobytes, and require UTF-8 encoding without a byte order mark (BOM).

#7about 3 minutes

Common syntax mistakes and group rule validation

Directives must start with a slash, query parameters require careful wildcard use, and rules like sitemaps must be placed outside user-agent groups to apply globally.

#8about 3 minutes

Optimizing crawl budget and managing affiliate links

Block affiliate tracking parameters in robots.txt to prevent search penalties and disallow low-value pages like Cloudflare challenges to preserve your crawl budget for important content.

#9about 1 minute

Using robots.txt to verify cross-domain sitemap ownership

By including a sitemap directive in your robots.txt file, you can prove ownership and instruct crawlers to find sitemaps located on different subdirectories or even different domains.

#10about 3 minutes

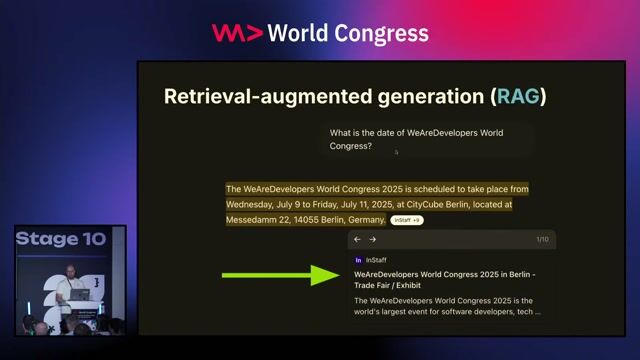

Controlling AI and LLM access to your website content

Use specific user-agent tokens like 'Google-Extended' and 'CCBot' in robots.txt to control which AI models can use your content for training.

#11about 4 minutes

Directives for Bing, AI Overviews, and images

Bing uses 'noarchive' meta tags instead of robots.txt for AI control, while opting out of Google's AI Overviews requires a 'nosnippet' tag, which is separate from blocking training.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

CARIAD

Berlin, Germany

Junior

Intermediate

Python

C++

+1

Matching moments

02:02 MIN

Strategies for preventing AI bots from scraping content

WeAreDevelopers LIVE - Is AI replacing developers?, Stopping bots, AI on device & more

02:22 MIN

Cloudflare's new default to block AI training crawlers

Fireside Chat with Cloudflare's Chief Strategy Officer, Stephanie Cohen (with Mike Butcher MBE)

10:51 MIN

Comparing AI search results and the impact of AI crawlers

WeAreDevelopers Live: Browser Extensions, Honey Scam, Jailbreaking LLMs and more

06:58 MIN

Technical SEO strategies for LLMs and new crawlers

WeAreDevelopers LIVE – SEO, GEO, AI Slop & More

05:16 MIN

Navigating regional availability and data ethics

What’s New with Google Gemini?

06:35 MIN

Wikipedia's resilience and the future of SEO with AI

WeAreDevelopers LIVE – AI and Privacy, On-Device AI and More

01:35 MIN

The challenge of AI agents on the human-centric web

WebMCP - Making Agents a First-Class Citizen of the Web - Andre Cipriani Bandarra & François Beaufort

10:41 MIN

The rise of AI chatbots and dead internet theory

WeAreDevelopers LIVE – Inclusion, Accessibility & Automation

Featured Partners

Related Videos

36:44

36:44ChatGPT vs Google: SEO in the Age of AI Search - Eric Enge

Eric Enge

WeAreDevelopers LIVE – SEO, GEO, AI Slop & More

Chris Heilmann, Daniel Cranney & Simon Cox

31:12

31:12Level-Up Your Global Reach: Mastering HREFLANG for Developers

Fili Wiese

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

24:15

24:15Fireside Chat with Cloudflare's Chief Strategy Officer, Stephanie Cohen (with Mike Butcher MBE)

Stephanie Cohen & Mike Butcher

19:20

19:20How to scrape modern websites to feed AI agents

Jan Curn

33:51

33:51Exploring the Future of Web AI with Google

Thomas Steiner

57:47

57:47Cracking the Code: Decoding Anti-Bot Systems!

Fabien Vauchelles

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Amazon.com Inc.

XML

HTML

JSON

Python

Data analysis

+1

Amazon.com, Inc

Shoreham-by-Sea, United Kingdom

XML

HTML

JSON

Python

Data analysis

+1

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

Amazon.com Inc.

The Hague, Netherlands

Data analysis

Amazon.com Inc.

The Hague, Netherlands

Data analysis