Tomislav Tipurić

Exploring LLMs across clouds

#1about 3 minutes

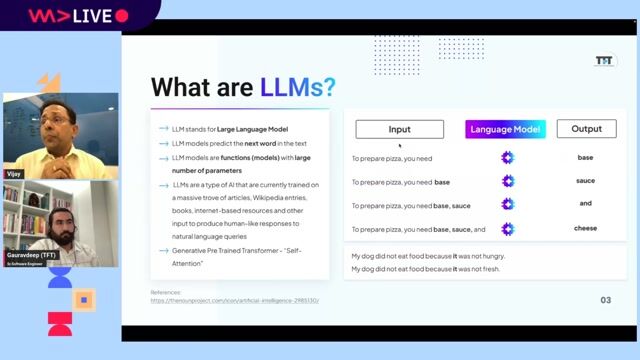

Understanding the fundamentals of large language models

Large language models function by predicting the next most probable word in a sequence, with a "temperature" setting controlling randomness.

#2about 4 minutes

Tracing the evolution from LLMs to agentic AI

The journey from text-only models to multimodal interfaces and reasoning models has led to the development of autonomous, event-triggered agents.

#3about 2 minutes

Comparing the LLM strategies of major cloud providers

Microsoft leverages its partnership with OpenAI, Google develops its own Gemini models, and Amazon is building out its Nova family of models.

#4about 4 minutes

A detailed breakdown of foundational models by vendor

Each cloud provider offers a suite of specialized models for tasks like text embedding, multimodal input, reasoning, and image or audio generation.

#5about 3 minutes

Comparing LLM performance benchmarks and pricing models

While Google and OpenAI consistently top performance leaderboards, cloud vendors are evening out their pricing for input and output tokens.

#6about 6 minutes

Understanding retrieval-augmented generation (RAG)

RAG enhances LLM capabilities by grounding them in private data, retrieving relevant information to provide accurate, context-specific answers.

#7about 1 minute

How vector search enables semantic information retrieval

Vector search works by representing text as numerical vectors, where proximity in the vector space indicates a closer semantic meaning.

#8about 3 minutes

Comparing the RAG ecosystem across cloud platforms

Each major cloud offers a complete ecosystem for RAG, including proprietary search solutions, vector databases, storage, and integrated AI studio environments.

#9about 2 minutes

Exploring practical industry use cases for LLMs

Enterprises are already implementing LLMs for document processing automation, contact center analytics, media analysis, and retail recommendation engines.

#10about 1 minute

Implementing generative AI in development teams effectively

Successfully integrating AI tools into development workflows requires a structured change management process, including planning, testing, and documentation.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel, Guillaume Laforge

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá, Cedric Clyburn

26:30

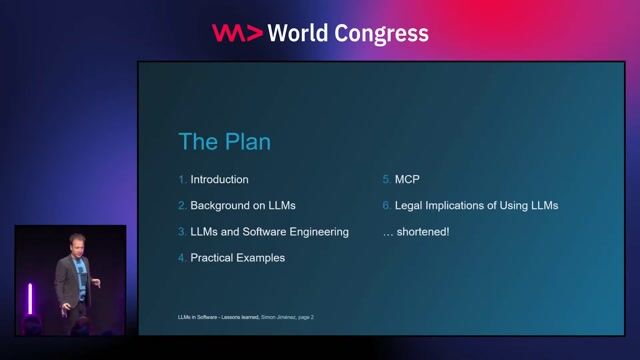

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

31:12

31:12Using LLMs in your Product

Daniel Töws

24:24

24:24Best practices: Building Enterprise Applications that leverage GenAI

Damir

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

27:11

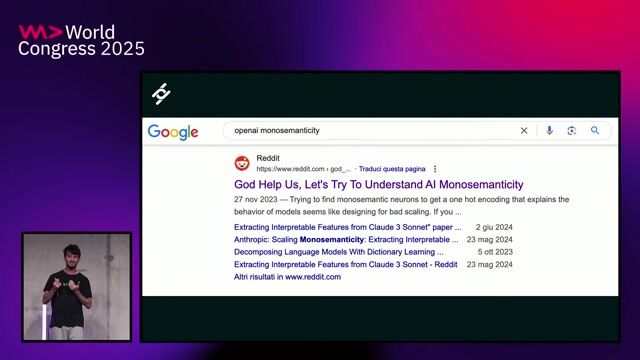

27:11Inside the Mind of an LLM

Emanuele Fabbiani

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

From learning to earning

Jobs that call for the skills explored in this talk.

Senior Backend Engineer – AI Integration (m/w/x)

chatlyn GmbH

Vienna, Austria

Senior

JavaScript

AI-assisted coding tools

AI/ML Team Lead - Generative AI (LLMs, AWS)

Provectus

Canton de Saint-Mihiel, France

Remote

€96K

Senior

Python

PyTorch

TensorFlow

+4

AI/ML Team Lead - Generative AI (LLMs, AWS)

Provectus

Canton de Saint-Mihiel, France

Remote

€96K

Senior

Python

PyTorch

TensorFlow

+4

Agentic AI Architect - Python, LLMs & NLP

FRG Technology Consulting

Intermediate

Azure

Python

Machine Learning

AI Evaluation Data Scientist - AI/ML/LLM - (Hybrid (Hybrid) - Barcelona

European Tech Recruit

Barcelona, Spain

Intermediate

GIT

Python

Pandas

Docker

PyTorch

+2

Cloud Solution Architect - Cloud + AI Infrastructure

Microsoft B.V.

Schiphol, Netherlands

Azure

Linux

Terraform

PostgreSQL

Amazon Web Services (AWS)