Meta Atamel & Guillaume Laforge

How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

#1about 2 minutes

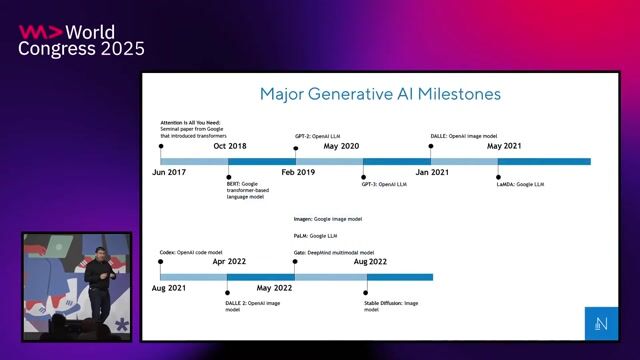

The exciting and overwhelming pace of AI development

The rapid evolution of AI creates both excitement for new possibilities and anxiety about keeping up with new models and papers.

#2about 2 minutes

Choosing the right AI-powered developer tools and IDEs

Developers are using a mix of IDEs like VS Code and browser-based environments like IDX, enhanced with AI assistants like Gemini Code Assist.

#3about 4 minutes

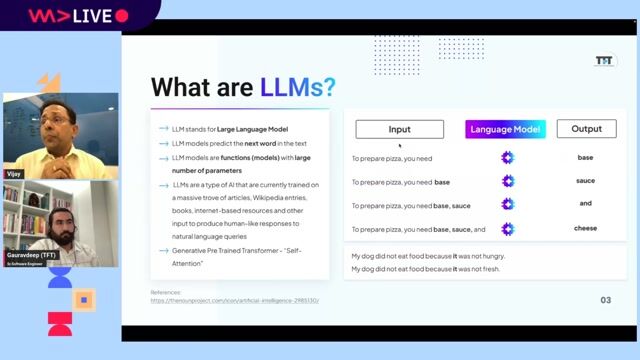

Understanding the fundamental concepts behind LLMs

Exploring foundational LLM questions, such as why they use tokens or struggle with math, is key to understanding their capabilities and limitations.

#4about 2 minutes

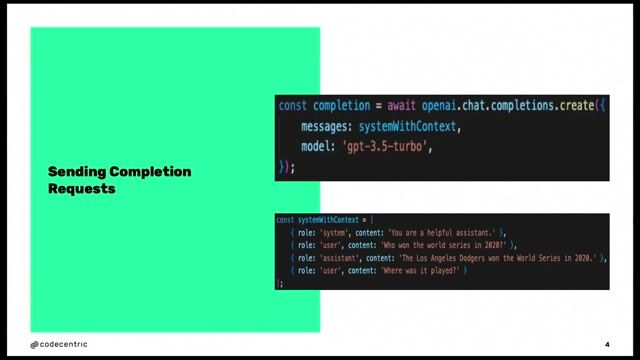

Why LLMs require pre- and post-processing pipelines

Real-world LLM applications are more than a single API call, requiring data pre-processing and output post-processing for reliable results.

#5about 4 minutes

Balancing creativity and structure in LLM outputs

Using a multi-step process, where an initial creative generation is followed by structured extraction, can yield better and more reliable results.

#6about 3 minutes

Mitigating LLM hallucinations with data grounding

Grounding LLM responses with external data from sources like Google Search or a private RAG pipeline is essential for preventing hallucinations.

#7about 3 minutes

Overcoming the challenge of stale data in LLMs

Use techniques like RAG with up-to-date private data or provide the LLM with tools to call external APIs for live information.

#8about 4 minutes

Managing the cost of long context windows

Reduce the cost and latency of large inputs by using techniques like context caching for reusable data and batch generation for parallel processing.

#9about 4 minutes

Ensuring data quality and security in LLM systems

Implement guardrails, PII redaction, and proper data filtering to prevent garbage outputs and protect sensitive information in your LLM applications.

#10about 4 minutes

Exploring the rise of agentic AI systems

Agentic AI involves systems that can act on a user's behalf, but their development requires a strong focus on security and sandboxed environments to be safe.

#11about 4 minutes

The future of LLMs as a seamless user experience

The ultimate success of generative AI will be its seamless and invisible integration into everyday applications, improving the user experience without requiring separate apps.

#12about 2 minutes

Avoiding the chatbot trap with a human handoff

A critical mistake in AI implementation is failing to provide a clear and accessible path for users to connect with a human when the AI cannot resolve their issue.

#13about 3 minutes

How to stay current in the fast-paced field of AI

To keep up with AI developments, follow curated newsletters and credible sources to understand emerging trends and discover new possibilities for your applications.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

03:28 MIN

Why corporate AI adoption lags behind the hype

What 2025 Taught Us: A Year-End Special with Hung Lee

03:15 MIN

The future of recruiting beyond talent acquisition

What 2025 Taught Us: A Year-End Special with Hung Lee

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

03:48 MIN

Automating formal processes risks losing informal human value

What 2025 Taught Us: A Year-End Special with Hung Lee

04:27 MIN

Moving beyond headcount to solve business problems

What 2025 Taught Us: A Year-End Special with Hung Lee

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

03:45 MIN

Preventing exposed API keys in AI-assisted development

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

Featured Partners

Related Videos

50:12

50:12Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

Sam Witteveen

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

1:00:43

1:00:43What’s New with Google Gemini?

Logan Kilpatrick

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

47:25

47:25Google Gemma and Open Source AI Models - Clement Farabet

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

31:12

31:12Using LLMs in your Product

Daniel Töws

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Startup

Charing Cross, United Kingdom

PyTorch

Machine Learning

FRG Technology Consulting

Intermediate

Azure

Python

Machine Learning

Client Server

Humanes de Madrid, Spain

€130K

C++

Java

Python

Machine Learning

+1

Alteam

Cambridge, United Kingdom

£60-70K

Intermediate

API

GIT

D3.js

Scrum

+13

Devoteam

Valdilecha, Spain

Keras

Python

TensorFlow

Google BigQuery

Machine Learning

+1

WorkMotion

£94K

Senior

Python

Docker

FastAPI

Continuous Integration