Ahmed Megahd

Streaming AI Responses in Real-Time with SSE in Next.js & NestJS

#1about 4 minutes

Why streaming AI responses improves user experience

Streaming AI text token-by-token significantly improves user retention and engagement compared to showing a loading screen.

#2about 2 minutes

Comparing SSE, WebSockets, and polling for real-time data

Server-Sent Events (SSE) offer a lightweight, unidirectional alternative to WebSockets for pushing data, consuming half the memory per connection.

#3about 4 minutes

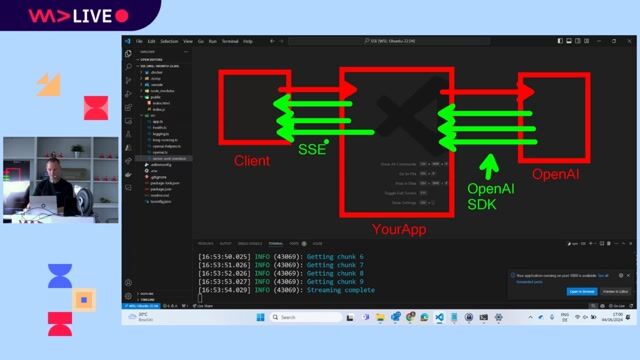

A full-stack architecture for streaming AI responses

The frontend uses the browser's EventSource API to subscribe to a NestJS backend endpoint that streams data from an AI provider.

#4about 2 minutes

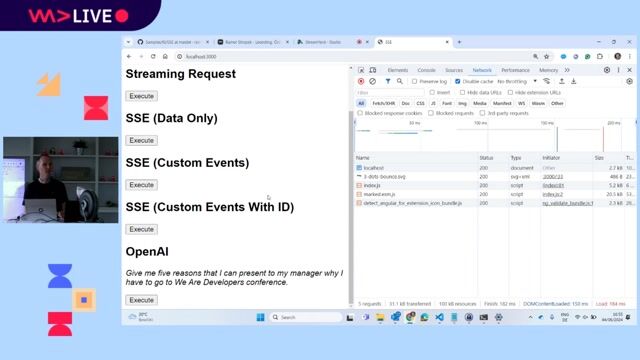

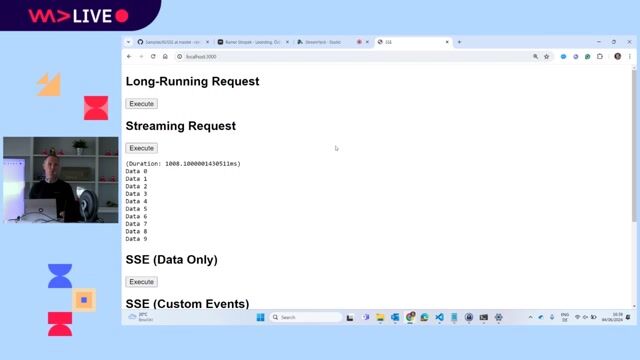

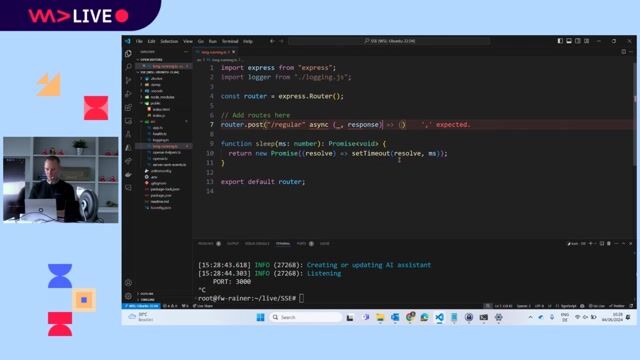

Implementing an SSE endpoint in NestJS for AI streaming

Set the `text/event-stream` content type and use a loop to push data chunks received from the OpenAI or Gemini streaming API to the client.

#5about 2 minutes

Consuming SSE streams in Next.js with EventSource

Use the native `EventSource` object to connect to the streaming endpoint and append incoming data to the component's state for a typewriter effect.

#6about 5 minutes

Using SSE for notifications and real-time file sharing

A code demonstration shows how to manage multiple client connections and push different event types, such as notifications or file data, to all subscribers.

#7about 2 minutes

Preparing an SSE implementation for production environments

Ensure reliability in production by adding authentication guards, rate limiting, keep-alive messages, and configuring proxy buffering in Nginx.

#8about 2 minutes

Scaling SSE applications for thousands of concurrent users

For large-scale applications, progress from a simple load balancer to using Redis Streams for message queuing or a dedicated SSE hub infrastructure.

#9about 2 minutes

Comparing AI providers for optimal streaming performance

AI providers like Groq, Gemini, and OpenAI differ in their streaming approach, offering either token-by-token or chunk-by-chunk responses which impacts perceived speed.

#10about 3 minutes

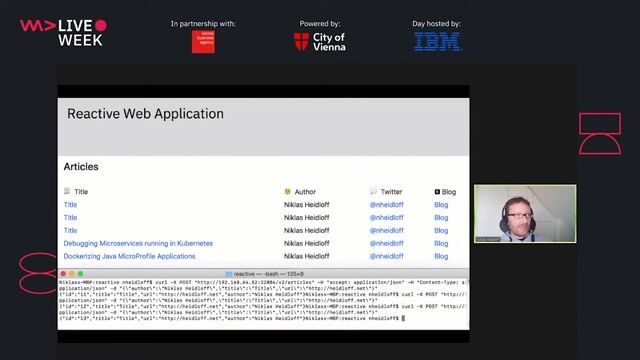

Syncing data from ChatGPT to multiple client applications

A custom GPT action can trigger a backend process that uses SSE to push new data in real-time to a user's browser extension, desktop, and mobile apps simultaneously.

#11about 1 minute

Understanding SSE limitations and its key benefits

Use SSE for unidirectional server-to-client data push, but choose other protocols like WebRTC for video or gRPC for microservices, to leverage its benefits of low latency and better user trust.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

28:56 MIN

Why SSE is relevant again because of OpenAI

Leveraging Server-Sent Events (SSE) for Efficient Data Streaming in UI Development

41:21 MIN

Q&A on SSE implementation and best practices

Leveraging Server-Sent Events (SSE) for Efficient Data Streaming in UI Development

35:40 MIN

Building a streaming client for the OpenAI API

Leveraging Server-Sent Events (SSE) for Efficient Data Streaming in UI Development

25:37 MIN

Streaming data to web clients with SSE

Development of reactive applications with Quarkus

13:25 MIN

Introducing the Server-Sent Events protocol

Leveraging Server-Sent Events (SSE) for Efficient Data Streaming in UI Development

22:33 MIN

Implementing real-time updates with NATS messaging

Scaling: from 0 to 20 million users

33:55 MIN

Audience Q&A on practical micro-frontend challenges

Micro-frontends anti-patterns

25:14 MIN

Q&A on SSR, caching, and accessibility

Reducing the carbon footprint of your website

Featured Partners

Related Videos

53:45

53:45Leveraging Server-Sent Events (SSE) for Efficient Data Streaming in UI Development

Rainer Stropek

25:47

25:47Performant Architecture for a Fast Gen AI User Experience

Nathaniel Okenwa

49:57

49:57Uncharted Territories of Web Performance - Andrew Burnett-Thompson and David Burleigh

Andrew Burnett-Thompson & David Burleigh

31:39

31:39Livecoding with AI

Rainer Stropek

30:36

30:36Prompt API & WebNN: The AI Revolution Right in Your Browser

Christian Liebel

42:10

42:10How Gatsby Cloud's real-time streaming architecture drives <5 second builds

Kyle Mathews

32:27

32:27Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Christian Liebel

33:51

33:51Exploring the Future of Web AI with Google

Thomas Steiner

From learning to earning

Jobs that call for the skills explored in this talk.

![Senior Software Engineer [TypeScript] (Prisma Postgres)](https://wearedevelopers.imgix.net/company/283ba9dbbab3649de02b9b49e6284fd9/cover/oKWz2s90Z218LE8pFthP.png?w=400&ar=3.55&fit=crop&crop=entropy&auto=compress,format)

Senior Software Engineer [TypeScript] (Prisma Postgres)

Prisma

Remote

Senior

Node.js

TypeScript

PostgreSQL

Senior Backend Engineer – AI Integration (m/w/x)

chatlyn GmbH

Vienna, Austria

Senior

JavaScript

AI-assisted coding tools

Back End Developer - New cutting edge AI product (Node.js)

MLR Associates

Charing Cross, United Kingdom

Intermediate

API

Redis

Python

NestJS

MongoDB

+4

Back End Developer - New cutting edge AI product (Node.js)

MLR Associates

Manor Park, United Kingdom

Intermediate

API

Redis

Python

NestJS

MongoDB

+4

NodeJS Software Engineer - Conversational AI

MANGO

Palau-solità i Plegamans, Spain

API

Azure

Redis

Node.js

Salesforce

+6

Full-Stack Engineer - AI Agentic Systems

autonomous-teaming

Potsdam, Germany

Remote

Linux

Redis

React

Python

+7