Roman Alexis Anastasini

Using code generation for test automation – the fancy way

#1about 3 minutes

Setting up NSwag for OpenAPI documentation in .NET

Replace the default Swashbuckle with NSwag to generate OpenAPI specifications for a new .NET web application.

#2about 3 minutes

Automating swagger.json generation on every build

Configure the project file to automatically run the NSwag command-line tool after each build to create an up-to-date swagger.json file.

#3about 4 minutes

Generating a typed C# client for the test project

Use the generated swagger.json file to automatically create a strongly-typed C# HTTP client within the test project.

#4about 4 minutes

Using the generated client for robust API-level tests

Write API-level tests that consume the generated client, ensuring that any API signature changes result in compile-time errors.

#5about 7 minutes

Testing DTOs, validation, and API error handling

Leverage the generated client and DTOs to easily test complex validation rules, serialization formats, and API exception handling.

#6about 4 minutes

Achieving high test coverage with API-level tests

Focusing on API-level tests provides high code coverage and confidence for refactoring while reducing the effort spent on traditional unit tests.

#7about 2 minutes

Key lessons learned from this testing approach

This approach requires writing precise controller code and evolving from slow Docker-based databases to faster in-memory databases for tests.

#8about 1 minute

Q&A: Why API tests can be more efficient than unit tests

API-level tests reduce the developer time spent writing and maintaining numerous small unit tests, especially as the codebase grows.

#9about 1 minute

Q&A: Managing code coverage targets and exceptions

Set a high but realistic code coverage target like 90-95% to avoid writing useless tests for unreachable edge cases.

#10about 2 minutes

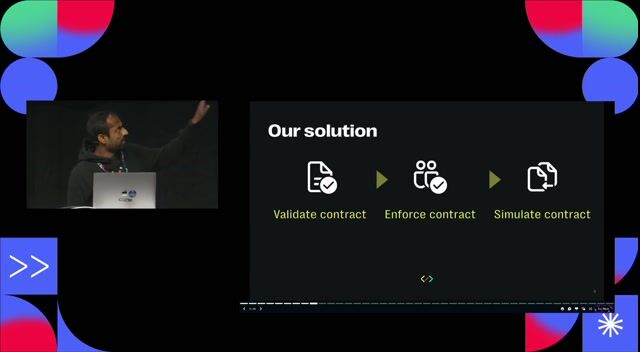

Q&A: Automating checks for breaking API changes

A potential improvement is to add a CI step that tests the new API version against a client generated from a previous API version.

#11about 2 minutes

Q&A: Managing test state and database setup

Use the web application factory to manage test setup, such as spinning up an in-memory database and seeding it with data for each test class.

#12about 2 minutes

Q&A: Mocking external service dependencies in tests

Isolate the API under test by mocking external service calls through interfaces, reserving full integration tests for the CI pipeline.

#13about 3 minutes

Q&A: The accidental discovery of this testing workflow

The idea to use the generated client in tests originated from the initial goal of providing an easy-to-use client for other consumer APIs.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

43:07

43:07How not to test

Golo Roden

30:55

30:55Why you must use TDD now!

Alex Banul

45:24

45:24How will artificial intelligence change the future of software testing?

Evelyn Haslinger

42:34

42:34How To Test A Ball of Mud

Ryan Latta

47:22

47:22Continuous testing - run automated tests for every change!

Christian Kühn

29:31

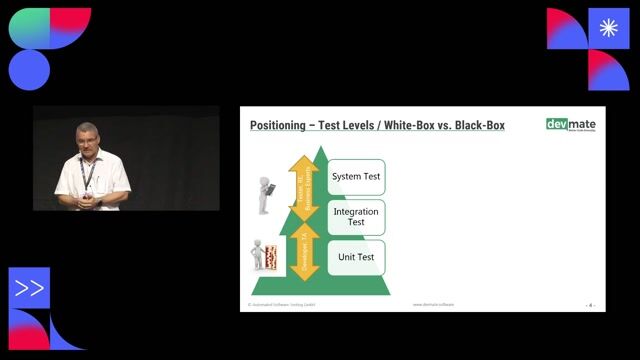

29:31Are you still programming unit tests or already generating?

Johannes Bergsmann & Daniel Bauer

26:02

26:02Contract Testing - How do you tame an external API that constantly breaks your tests

Vincent Hennig & Anupam Krishnamurthy

29:13

29:13Testing .NET applications a Tool box for every developer

Alexandre Borges

From learning to earning

Jobs that call for the skills explored in this talk.

Software Development Engineer in Test (m/w/d)

intersoft GmbH

Hamburg, Germany

Senior

Java

Automated Testing

Test System and Release Engineer (m/w/d)

AKDB Anstalt für kommunale Datenverarbeitung in Bayern

München, Germany

Intermediate

Senior

JavaScript

Automated Testing

Software QA Engineer C# | Testpläne, UnitTests, Testautomation, TFS, Git, BDD | Inhouse (mwd) Software QA Engineer C# | Testpläne, UnitTests, Testautomation, TFS, Git, BDD | Inhouse (mwd)

Vesterling Consulting GmbH

München, Germany

€55-85K

GIT

ASP.NET

Unit Testing

QA Engineer (Manual Testing Focus, Automation Experience a Plus)

Interactivated Solutions Europe

iOS

JIRA

Scrum

Python

Cypress

+3