Alan Mazankiewicz

Fully Orchestrating Databricks from Airflow

#1about 5 minutes

Exploring the core features of the Databricks workspace

A walkthrough of the Databricks UI shows how to create Spark clusters, run code in notebooks, and define scheduled jobs with multi-task dependencies.

#2about 6 minutes

Understanding the fundamentals of Apache Airflow orchestration

Airflow provides powerful workflow orchestration with features like dynamic task generation, complex trigger rules, and a detailed UI for monitoring DAGs.

#3about 5 minutes

Integrating Databricks and Airflow with built-in operators

The DatabricksRunNowOperator and DatabricksSubmitRunOperator allow Airflow to trigger predefined or dynamically defined jobs in Databricks via its REST API.

#4about 3 minutes

Creating a custom operator for full Databricks API control

To overcome the limitations of built-in operators, you can create a generic custom operator by subclassing BaseOperator and using the DatabricksHook to make arbitrary API calls.

#5about 3 minutes

Implementing a custom operator to interact with DBFS

A practical example demonstrates how to use the custom generic operator to make a 'put' request to the DBFS API, including the use of Jinja templates for dynamic paths.

#6about 2 minutes

Developing advanced operators for complex cluster management

For complex scenarios, custom operators can be built to create an all-purpose cluster, wait for it to be ready, submit multiple jobs, and then terminate it.

#7about 5 minutes

Answering questions on deployment, performance, and tooling

The discussion covers running Airflow in production environments like Kubernetes, optimizing Spark performance on Databricks, and comparing Airflow to Azure Data Factory.

#8about 10 minutes

Discussing preferred data stacks and career advice

The speaker shares insights on their preferred data stack for different use cases, offers advice for beginners learning Python, and describes a typical workday as a data engineer.

Related jobs

Jobs that call for the skills explored in this talk.

Featured Partners

Related Videos

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

43:26

43:26PySpark - Combining Machine Learning & Big Data

Ayon Roy

46:43

46:43Convert batch code into streaming with Python

Bobur Umurzokov

43:57

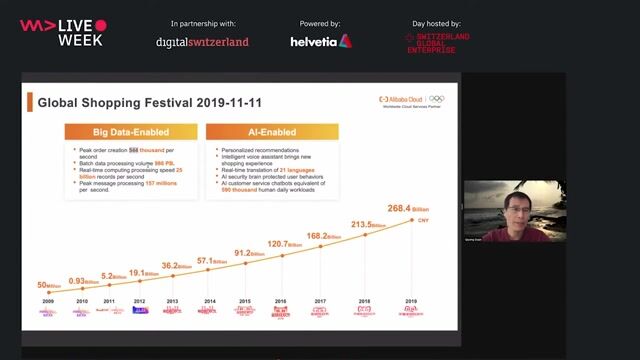

43:57Alibaba Big Data and Machine Learning Technology

Dr. Qiyang Duan

38:50

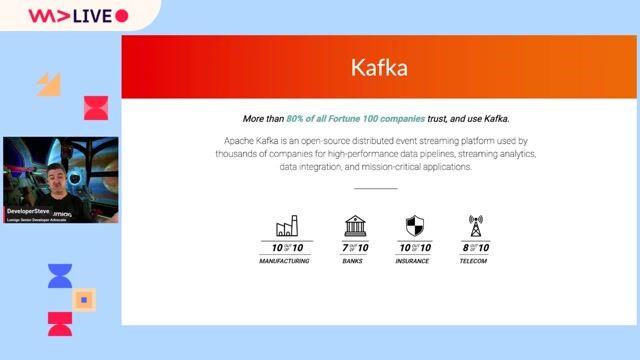

38:50Let's Get Started With Apache Kafka® for Python Developers

Lucia Cerchie

54:29

54:29Tips, Techniques, and Common Pitfalls Debugging Kafka

DeveloperSteve

50:38

50:38Python Data Visualization @ Deepnote (w/ PyViz overview)

Radovan Kavický

26:53

26:53Enjoying SQL data pipelines with dbt

Matthias Niehoff

From learning to earning

Jobs that call for the skills explored in this talk.

Presales Solutions Architect (DS/ML/AI)

Databricks, Inc.

Charing Cross, United Kingdom

€64K

Azure

Spark

Python

Machine Learning

+1

Data Engineer - Databricks

MP DATA

Canton of Boulogne-Billancourt-1, France

ETL

Spark

Continuous Integration

AI & Data Sr. Solutions Engineer (Emerging Enterprise, Startups & Digital Natives)

Databricks

Paris, France

Senior

Java

Scala

Spark

Python

Data analysis

+1

Sr. Product Adoption Architect (Data & AI)

Databricks, Inc.

Charing Cross, United Kingdom

€72K

Senior

Spark

Python