Ekaterina Sirazitdinova

Trends, Challenges and Best Practices for AI at the Edge

#1about 4 minutes

Defining AI at the edge and its industry applications

AI at the edge involves running computations on devices near the data source, transforming industries like manufacturing, retail, and healthcare.

#2about 1 minute

Understanding the unique constraints of edge devices

Edge devices differ from data centers due to their limited compute power, smaller storage capacity, and restricted power consumption.

#3about 2 minutes

Overcoming the primary challenges of edge AI development

Developers must solve for three main challenges: achieving high model accuracy, ensuring real-time throughput, and managing deployment at scale.

#4about 1 minute

Using synthetic data to improve model accuracy

Synthetic data helps improve model accuracy by providing diverse training examples, covering rare corner cases, and reducing expensive manual labeling.

#5about 3 minutes

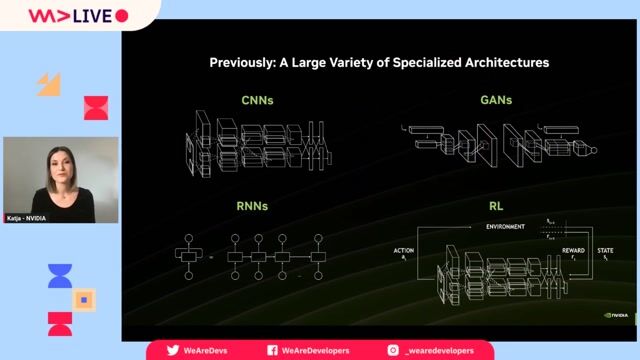

Optimizing models with quantization and network pruning

Model performance can be significantly improved by using quantization to reduce numerical precision and network pruning to remove unnecessary neurons.

#6about 4 minutes

Advanced techniques for boosting inference performance

Further performance gains can be achieved through network graph optimizations, kernel auto-tuning, dynamic tensor memory, and multistream concurrent execution.

#7about 1 minute

NVIDIA's platform for the end-to-end AI workflow

NVIDIA provides a comprehensive software platform to support the entire AI productization cycle, from data collection and training to optimization and deployment.

#8about 2 minutes

Using Replicator and pre-trained models for development

NVIDIA Replicator generates synthetic data for training, while the NGC catalog offers a wide range of pre-trained models to accelerate development.

#9about 2 minutes

Training and fine-tuning models with the TAO Toolkit

The NVIDIA TAO Toolkit is a zero-coding framework that simplifies training, fine-tuning, pruning, and quantization of AI models.

#10about 2 minutes

Deploying models with TensorRT and Triton Inference Server

NVIDIA TensorRT optimizes models for high-performance inference, while Triton Inference Server provides a flexible solution for serving models at scale.

#11about 2 minutes

Building video analytics pipelines with DeepStream SDK

The NVIDIA DeepStream SDK, built on GStreamer, enables the creation of efficient, GPU-accelerated video analytics pipelines with zero memory copies.

#12about 2 minutes

Matching edge AI challenges with NVIDIA's solutions

A summary of how NVIDIA's tools like Replicator, TAO Toolkit, TensorRT, and DeepStream address the core challenges of accuracy, performance, and deployment.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

04:13 MIN

Addressing the growing power consumption of AI computing

The Future of Computing: AI Technologies in the Exascale Era

03:29 MIN

Balancing distributed edge AI with centralized cloud computing

The Future of Computing: AI Technologies in the Exascale Era

01:30 MIN

Overlooked challenges of running AI applications in production

Chatbots are going to destroy infrastructures and your cloud bills

01:31 MIN

Implementing machine learning on resource-constrained edge devices

The Future of Computing: AI Technologies in the Exascale Era

02:51 MIN

Future trends in AI models and data center cooling

AI Factories at Scale

00:51 MIN

The inefficiency of modern computer vision models

Focoos AI: Building the Future of Computer Vision

06:13 MIN

Skills and challenges of working with automotive AI

Developing an AI.SDK

01:24 MIN

Achieving high performance on low-power devices

Focoos AI: Building the Future of Computer Vision

Featured Partners

Related Videos

35:16

35:16How AI Models Get Smarter

Ankit Patel

26:25

26:25Bringing AI Everywhere

Stephan Gillich

30:46

30:46The Future of Computing: AI Technologies in the Exascale Era

Stephan Gillich, Tomislav Tipurić, Christian Wiebus & Alan Southall

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

56:55

56:55Multimodal Generative AI Demystified

Ekaterina Sirazitdinova

22:11

22:11How computers learn to see – Applying AI to industry

Antonia Hahn

25:47

25:47Performant Architecture for a Fast Gen AI User Experience

Nathaniel Okenwa

24:26

24:26AI Factories at Scale

Thomas Schmidt

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Nvidia

Berlin, Germany

C++

Computer Vision

Natural Language Processing

Edgeless Systems GmbH

Berlin, Germany

€80-120K

Senior

API

React

Python

TypeScript

+3

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

Nvidia

Remote

Intermediate

C++

Python

Machine Learning

Software Architecture

Nteractive Consulting & Events Ltd

Staines-upon-Thames, United Kingdom

low-code

Machine Learning