Mario-Leander Reimer

Fifty Shades of Kubernetes Autoscaling

#1about 4 minutes

Why cloud-native systems require multi-layered elasticity

Modern applications need to be anti-fragile and support hyperscale, which requires elasticity at the workload level (horizontal/vertical) and the infrastructure level (cluster scaling).

#2about 5 minutes

How metrics and events drive Kubernetes autoscaling decisions

Autoscaling relies on events for cluster-level actions and a multi-layered metrics API for workload scaling based on resource, custom, or external data sources.

#3about 5 minutes

Implementing horizontal pod autoscaling with different metrics

The Horizontal Pod Autoscaler (HPA) can scale pods based on simple resource metrics like CPU, custom pod metrics, or external metrics from Prometheus.

#4about 2 minutes

Using the vertical pod autoscaler for right-sizing workloads

The Vertical Pod Autoscaler (VPA) can automatically adjust pod resources, but its recommendation mode is most useful for determining optimal CPU and memory settings.

#5about 4 minutes

How the default cluster autoscaler works on GKE

The default cluster autoscaler automatically provisions new nodes when it detects unschedulable pods due to resource constraints, as demonstrated on Google Kubernetes Engine.

#6about 5 minutes

Using Carpenter for fast and flexible cluster scaling on AWS

Carpenter provides a fast and flexible cluster autoscaling solution for AWS EKS, enabling cost optimization by using spot instances for scaled-out nodes.

#7about 1 minute

Exploring KEDA for advanced event-driven autoscaling

KEDA (Kubernetes Event-driven Autoscaling) enables scaling workloads, including to zero, based on events from various sources like message queues or databases.

#8about 1 minute

Summary of Kubernetes autoscaling tools and techniques

A recap of essential autoscaling components including the metric server, HPA, VPA, cluster autoscalers like Carpenter, KEDA, and the descheduler for cluster optimization.

#9about 2 minutes

Q&A on autoscaler reliability and graceful shutdown

Discussion on the production-readiness of autoscalers, the importance of observability, and how to achieve graceful pod termination during scale-down events.

Related jobs

Jobs that call for the skills explored in this talk.

Team Lead DevOps (m/w/d)

Rhein-Main-Verkehrsverbund Servicegesellschaft mbH

Frankfurt am Main, Germany

Senior

Featured Partners

Related Videos

28:55

28:55Serverless Architectures with Spring Cloud Functions and Knative

Ingo Weichsel

21:43

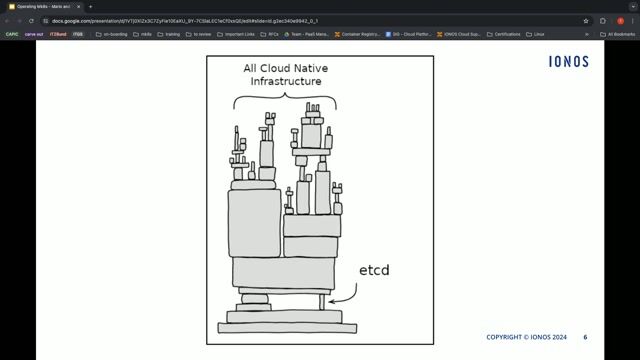

21:43Operating etcd for Managed Kubernetes

Mario Valderrama

42:45

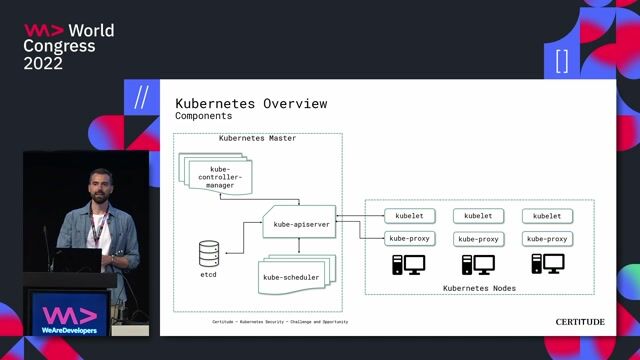

42:45Kubernetes Security - Challenge and Opportunity

Marc Nimmerrichter

27:52

27:52Chaos in Containers - Unleashing Resilience

Maish Saidel-Keesing

57:24

57:24Mastering Kubernetes – Beginner Edition

Hannes Norbert Göring

28:12

28:12Scaling: from 0 to 20 million users

Josip Stuhli

39:20

39:20Developing locally with Kubernetes - a Guide and Best Practices

Dan Erez

28:03

28:03Containers in the cloud - State of the Art in 2022

Federico Fregosi

From learning to earning

Jobs that call for the skills explored in this talk.

DevOps Engineer – Kubernetes & Cloud (m/w/d)

epostbox epb GmbH

Berlin, Germany

Intermediate

Senior

DevOps

Kubernetes

Cloud (AWS/Google/Azure)

Senior Systems/DevOps Developer (f/m/d)

Bonial International GmbH

Berlin, Germany

Senior

Python

Terraform

Kubernetes

Elasticsearch

Amazon Web Services (AWS)

Senior Platform Engineer AI Services (w/m/d)

BWI GmbH

Bonn, Germany

€90-110K

Senior

Python

Gitlab

Kubernetes

Senior Machine Learning Engineer (f/m/d)

MARKT-PILOT GmbH

Stuttgart, Germany

Remote

€75-90K

Senior

Python

Docker

Machine Learning

Cloud Engineer (AWS - Kubernetes)

Keepler Data Tech

Municipality of Madrid, Spain

Remote

€39-46K

Intermediate

Go

Bash

Scrum

+9

DataCenter DevOps Specialist - Schwerpunkt Kubernetes & Automatisierung (m/w/d)

AKDB

Augsburg, Germany

Bash

YAML

DevOps

Python

Ansible

+6

DevOps / Systems Engineer mit Erfahrung in Kubernetes (m/w/d) (Main)

Cloud Solutions

Frankfurt am Main, Germany

Go

Bash

Rust

Linux

Shell

+6