Welcome to this issue of the WeAreDevelopers Live Talk series. This article recaps an interesting talk by Sefik Ilkin Serengil who introduced the audience to his python package called DeepFace.

About the speaker:

Sefik Ilkin Serengil received his MSc in Computer Science from Galatasary University in 2011. He has been working as a software engineer since 2010 and is currently employed as a software engineer at Vorboss. Recently he spoke at the WeAreDevelopers Live Topic Day.

What is DeepFace?

In this talk, Sefik presents his own python package called DeepFace facial recognition. As the name suggests it offers out-of-the-box functionalities and features for facial recognition. This also enables people without in-depth knowledge about the processes behind the library to use its qualities with just a few lines of code. It’s also fully open-sourced and licensed – in other words: you are completely free to use it for both personal and commercial purposes.

As it’s available on the python index the easiest way to install the package is to run a pip install deface command. Once you have installed it you are able to import it and define the different parameters.

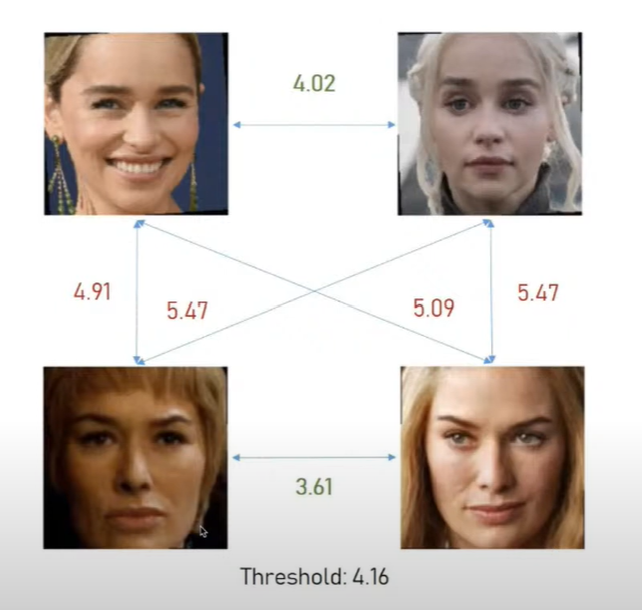

For example, verification is a common task. This expects a face pair with exact image paths. With this method, you can compare the same or different persons and get a decision from the package if they are the same or a different person. You also get some additional information like the distance and threshold.

DeepFace includes many state-of-the-art models for both face recognition and face detection. You can switch between them, but if you don’t declare a specific one with an argument it will use vgg face and opencv as default.

For looking up a specific identity in a database you will need face recognition rather than detection. This will require to run face verification many times. Notice that this can be problematic if the time complexity becomes really big like millions or billions of times. But luckily you don’t have to call a for loop for each of them manually as DeepFace can manage that in the background.

For the facial recognition to work, there are a total of 5 background tasks that DeepFace needs to run. These are: Detect, Align, Normalize, Represent, Verify. We will go through every station one by one.

Detect

The first task is face detection. In this stage, the algorithm tries to find the facial are of the picture given. For this DeepFace contains a portfolio of 5 cutting-edge detectors. These are OpenCV, SSD, Dlib, RetinaFace, and MTCNN. You can choose for your own liking but Sefik advises using RetinaFace or MTCNNif your tasks require high confidence because those are probably the most powerful models. But as they are really slow ones you should consider using OpenCV or SSD when speed is more important.

As the algorithm expects images of the same size DeepFace automatically adds some black pixels to each of them to avoid deformation.

Align

Experiments have shown that the right alignment increases the face recognition accuracy by more than one percent. While this maybe doesn’t sound like much, we already have models with human-level accuracy so we can make a difference also with little signs of progress like this.

It comes in handy, that modern detectors have the ability to recognize facial landmarks like the eyes. So DeepFace detects them and rotates the image until the eye coordinates become a horizontal line.

Normalize

As the faces get detected in a rectangular area there might also get some noise information stored. By normalization, you can get rid of this and just keep the facial information.

Represent

This stage is very important as we use deep neural networks here, but the usage differs a bit. Especially convolutional networks are very good at detecting patterns in visual data, so face recognition is mostly based on those.

Imagine you have a database of 100 identities and each of them have more than one facial image.

What if an extra person who doesn’t appear in your database gets tested? The network will produce an output for it anyway and that’s how you get a generalized model according to Sefik and you don’t need to retrain the model anymore.

In contrary to the 5 detection modes, DeepFace supports 7 recognition ones. These are: VGG-Face, Arc-Face, OpenFace, DeepFace, DeepID, Dlib, and FaceNet.

These are actually deep learning networks with different structures and notice that these already reached and passed the human-level accuracy. Humans actually only have 97.5 percent accuracy and these models surpass this level already.

Verify

Selfik begins his explanation with a quote by the famous Turkish poet Can Yuel:

The longest distance is not to Africa, or China, or India. Not to the planets or the stars that sparkle in the night. The longest distance is between two minds that don’t understand each other...

We represent facial images as vectors in the previous representation stage and expect that vector embeddings of the same person should be more similar to those of different persons. This distance can be expressed with the Euclidean distance formula.

Based on the threshold proclaimed by you the algorithm can choose if the distance is nearer the “same person” or a “different person” as shown in this graph:

And in the following picture, you can see what difference a good threshold makes in recognition:

Big Data

Afterward, Selfik throws the question of what happens when you work with big data. For example, how does Google get results in the reversed image search in seconds? Has it to do with their costly and superior hardware? Selfik starts with another quote, this time from the photojournalist Ara Guler:

If the best camera had taken the best photograph, then the one would be the best novelist who has the best typewriter.

Similarly, query performance times should not be related to the hardware those tech giants have.

To achieve good times there are some things you might want to take into concern. Firstly you can group the items in your database by demographic information because deepface already analyzed gender, emotion, and ethnicity. By storing that information in your database, you can decrease your search space when looking for an identity. But when working with billions of images this won’t be enough. Here the Approximate Nearest Neighbour (A-NN) comes into play. It reduces the time complexity dramatically and is therefore used by companies like Google or Meta. Some of these are Spotify Annoy, Facebook Faiss, NMSLIB, Elasticsearch, Milvus, and Pinecone.

Summary

- DeepFace is a very lightweight framework with which you can use any given functionality with a single line of code. You also don’t need to have any background information regarding face recognition as almost everything is handled in the background.

- DeepFace is very easy to install. This is an advantage over some other popular frameworks as most of them need core c and c plus dependencies to install and compile. As DeepFace is mainly based on TensorFlow you shouldn’t have any troubles with that as well.

- It contains many state-of-the-art face recognition and detector models. The list of those has expanded since the first commit and will further in the future.

- It’s open-source

- A highly committed community with many contributors

Thank you for reading this article. If you are interested in hearing Selfik himself you can do so by following this link: Free Developer Talks & Coding Tutorials | WeAreDevelopers.

.gif?w=720&auto=compress,format)