Kevin Dubois

Infusing Generative AI in your Java Apps with LangChain4j

#1about 3 minutes

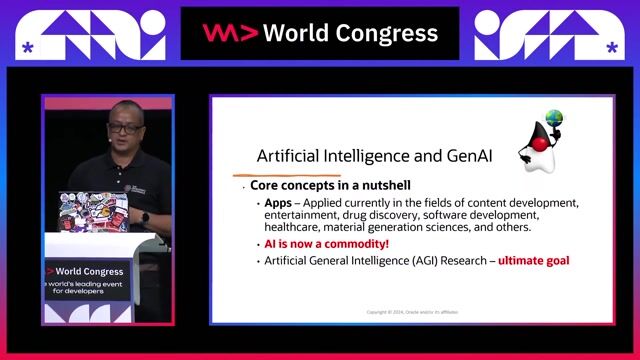

Integrating generative AI into Java applications with LangChain4j

LangChain4j simplifies consuming AI model APIs for Java developers, avoiding the need for deep data science expertise.

#2about 3 minutes

Creating a new Quarkus project with LangChain4j

Use the Quarkus CLI to bootstrap a new Java application and add the necessary LangChain4j dependency for OpenAI integration.

#3about 2 minutes

Using prompts and AI services in LangChain4j

Define AI interactions using the @RegisterAIService annotation, system messages for context, and user messages with dynamic placeholders.

#4about 2 minutes

Managing conversational context with memory

LangChain4j uses memory to retain context across multiple calls, with the @MemoryId annotation enabling parallel conversations.

#5about 2 minutes

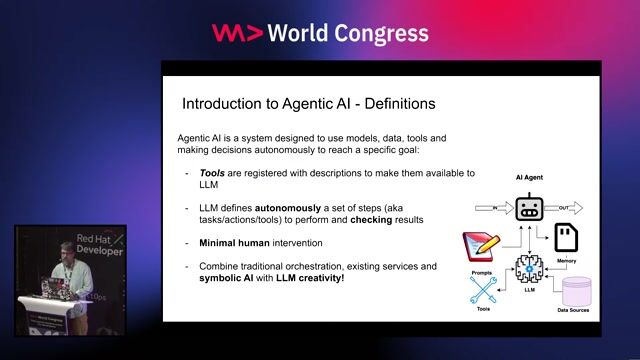

Connecting AI models to business logic with tools

Use the @Tool annotation to expose Java methods to the AI model, allowing it to execute business logic like sending an email.

#6about 5 minutes

Live demo of prompts, tools, and the Dev UI

A practical demonstration shows how to generate a haiku using a prompt and then use a custom tool to send it via email, verified with Mailpit.

#7about 3 minutes

Providing custom knowledge with retrieval-augmented generation (RAG)

Enhance LLM responses with your own business data by using an embedding store or Quarkus's simplified 'Easy RAG' feature.

#8about 6 minutes

Building a chatbot with a custom knowledge base

A chatbot demo uses a terms of service document via RAG to correctly enforce a business rule for booking cancellations.

#9about 2 minutes

Using local models and implementing fault tolerance

Run LLMs on your local machine with Podman AI Lab and make your application resilient to failures using SmallRye Fault Tolerance annotations.

#10about 4 minutes

Demonstrating fault tolerance with a local LLM

A final demo shows an application calling a locally-run model and triggering a fallback mechanism when the model service is unavailable.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

02:20 MIN

Understanding LangChain4j for Java AI applications

Create AI-Infused Java Apps with LangChain4j

01:59 MIN

Why Java is a strong choice for enterprise AI applications

Agentic AI Systems for Critical Workloads

02:54 MIN

Exploring frameworks for building agentic AI applications in Java

Supercharge Agentic AI Apps: A DevEx-Driven Approach to Cloud-Native Scaffolding

04:24 MIN

An overview of the LangChain4j framework for Java

AI Agents Graph: Your following tool in your Java AI journey

04:44 MIN

Demo of an AI assistant using LangChain4j and Quarkus

Create AI-Infused Java Apps with LangChain4j

04:40 MIN

Simplifying GenAI development with the LangChain4J framework

Langchain4J - An Introduction for Impatient Developers

06:57 MIN

Building an AI application using LangChain4j

Java Meets AI: Empowering Spring Developers to Build Intelligent Apps

02:57 MIN

Building a simple theme park chatbot with LangChain4j

AI Agents Graph: Your following tool in your Java AI journey

Featured Partners

Related Videos

29:18

29:18Create AI-Infused Java Apps with LangChain4j

Daniel Oh & Kevin Dubois

31:59

31:59Langchain4J - An Introduction for Impatient Developers

Juarez Junior

31:53

31:53Java Meets AI: Empowering Spring Developers to Build Intelligent Apps

Timo Salm

32:13

32:13Supercharge your cloud-native applications with Generative AI

Cedric Clyburn

30:01

30:01Agentic AI Systems for Critical Workloads

Mario Fusco

31:02

31:02Supercharge Agentic AI Apps: A DevEx-Driven Approach to Cloud-Native Scaffolding

Daniel Oh

32:14

32:14Building AI-Driven Spring Applications With Spring AI

Timo Salm & Sandra Ahlgrimm

41:17

41:17Application Modernization Leveraging Gen-AI for Automated Code Transformation

Syed M Shaaf

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

MedAscend

Killin, United Kingdom

Remote

£52K

Senior

API

React

Docker

+4

Barmer

Remote

API

MySQL

NoSQL

React

+9

Agenda GmbH

Raubling, Germany

Remote

Intermediate

API

Azure

Python

Docker

+10

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3