Kevin Klues

From foundation model to hosted AI solution in minutes

#1about 3 minutes

Introducing the IONOS AI Model Hub for easy inference

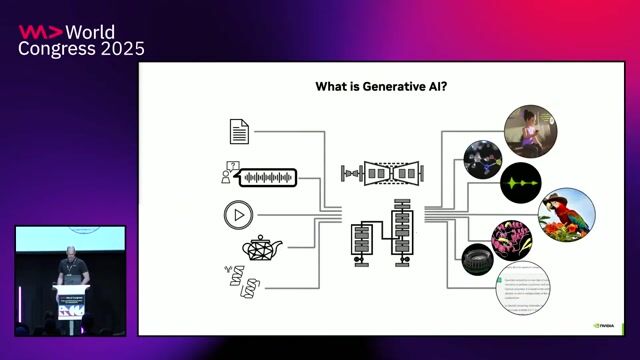

The IONOS AI Model Hub provides a simple REST API for accessing open-source foundation models and a vector database for RAG.

#2about 1 minute

Exploring the curated open-source foundation models available

The platform offers leading open-source models like Meta Llama 3 for English, Mistral for European languages, and Stable Diffusion XL for image generation.

#3about 7 minutes

How to implement RAG with a single API call

Retrieval-Augmented Generation (RAG) is simplified by abstracting vector database lookups and prompt augmentation into one API request using collection IDs and queries.

#4about 1 minute

Building end-to-end AI solutions in European data centers

Combine the AI Model Hub with IONOS Managed Kubernetes to build and deploy full AI applications within German data centers for data sovereignty.

#5about 3 minutes

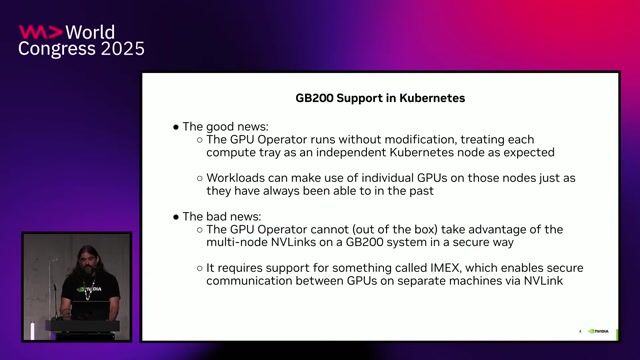

Enabling direct GPU access within managed Kubernetes

The NVIDIA GPU Operator will enable direct consumption of GPU resources within IONOS Managed Kubernetes by automatically installing necessary drivers and components.

#6about 3 minutes

Deploying custom inference workloads with NVIDIA NIMs

Use the GPU Operator to request GPUs in a pod spec and deploy NVIDIA Inference Microservices (NIMs) to run custom, containerized AI models on your own infrastructure.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

07:36 MIN

Highlighting impactful contributions and the rise of open models

Open Source: The Engine of Innovation in the Digital Age

15:54 MIN

Deploying enterprise AI applications with NVIDIA NIM

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

20:32 MIN

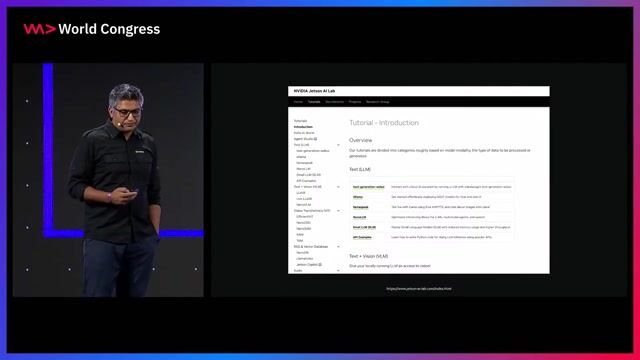

Accessing software, models, and training resources

Accelerating Python on GPUs

18:20 MIN

NVIDIA's platform for the end-to-end AI workflow

Trends, Challenges and Best Practices for AI at the Edge

22:14 MIN

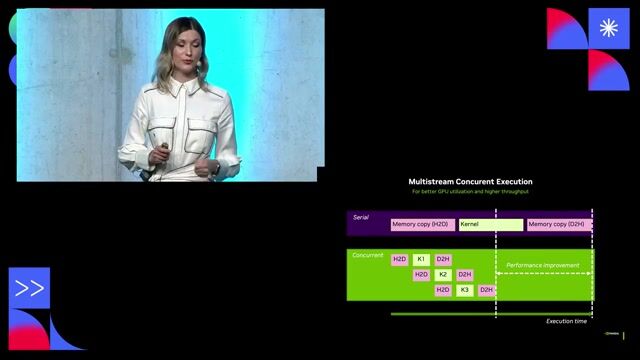

Deploying models with the high-performance ONNX Runtime

Making neural networks portable with ONNX

00:53 MIN

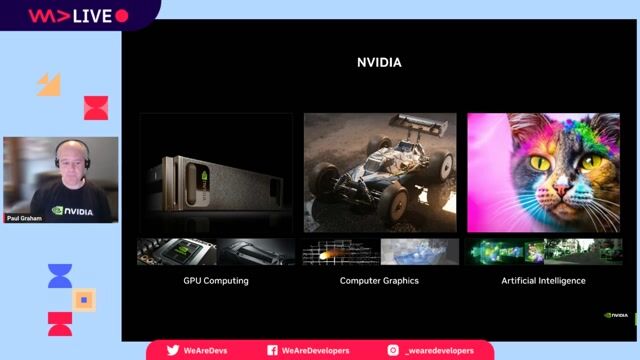

The rise of general-purpose GPU computing

Accelerating Python on GPUs

04:25 MIN

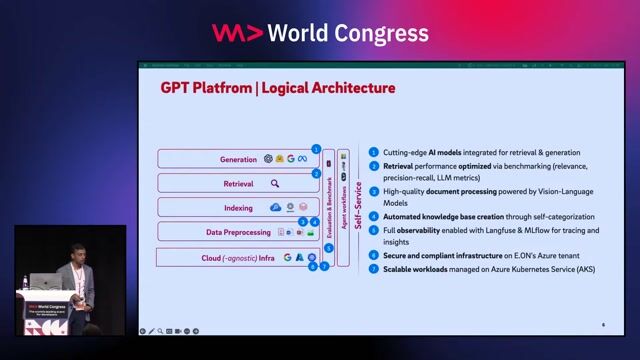

Architecture of a unified data and GenAI platform

Beyond GPT: Building Unified GenAI Platforms for the Enterprise of Tomorrow

10:48 MIN

Integrating GenAI components and execution strategy

Beyond GPT: Building Unified GenAI Platforms for the Enterprise of Tomorrow

Featured Partners

Related Videos

29:52

29:52Your Next AI Needs 10,000 GPUs. Now What?

Anshul Jindal & Martin Piercy

31:05

31:05Open Source AI, To Foundation Models and Beyond

Ankit Patel, Matt White, Philipp Schmid, Lucie-Aimée Kaffee & Andreas Blattmann

32:13

32:13Supercharge your cloud-native applications with Generative AI

Cedric Clyburn

32:19

32:19A Deep Dive on How To Leverage the NVIDIA GB200 for Ultra-Fast Training and Inference on Kubernetes

Kevin Klues

23:01

23:01Efficient deployment and inference of GPU-accelerated LLMs

Adolf Hohl

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

31:38

31:38Developer Experience, Platform Engineering and AI powered Apps

Ignacio Riesgo & Natale Vinto

26:25

26:25Bringing AI Everywhere

Stephan Gillich

From learning to earning

Jobs that call for the skills explored in this talk.

Senior Systems/DevOps Developer (f/m/d)

Bonial International GmbH

Berlin, Germany

Senior

Python

Terraform

Kubernetes

Elasticsearch

Amazon Web Services (AWS)

DevOps Engineer – Kubernetes & Cloud (m/w/d)

epostbox epb GmbH

Berlin, Germany

Intermediate

Senior

DevOps

Kubernetes

Cloud (AWS/Google/Azure)

Cloud Engineer (m/w/d)

fulfillmenttools

Köln, Germany

€50-65K

Intermediate

TypeScript

Google Cloud Platform

Continuous Integration