Nikolai Nikolaev

Let's Get Aggregated: Custom UDAFs in Spark

#1about 2 minutes

Going beyond standard aggregations in Spark

Standard functions like sum and count are useful, but custom aggregations are required for specific business logic and performance on large datasets.

#2about 4 minutes

Understanding the Spark Aggregator interface

The Aggregator interface requires implementing zero, reduce, merge, and finish methods to support Spark's distributed execution model of pre-aggregation and shuffling.

#3about 2 minutes

Analyzing a standard word count solution's inefficiency

A typical word count solution using built-in functions and window functions results in an inefficient execution plan with two separate data shuffles.

#4about 2 minutes

Designing a UDAF for an efficient word count

The high-level design for a custom word count aggregator involves using a local frequency map as a buffer to pre-aggregate data before a single shuffle and merge step.

#5about 5 minutes

A step-by-step implementation of the UDAF methods

This walkthrough covers the Scala implementation of the Aggregator interface, including defining type parameters and coding the zero, reduce, merge, and finish logic.

#6about 1 minute

Analyzing the performance gains of the custom UDAF

Applying the custom aggregator and inspecting its execution plan reveals a significant performance improvement, reducing the process to a single data shuffle.

#7about 2 minutes

Leveraging complex data structures in UDAFs

UDAFs can handle complex, structured data types like case classes for both intermediate buffers and final outputs, enabling sophisticated, multi-part aggregations.

#8about 3 minutes

Key use cases for custom aggregation functions

Custom aggregators are ideal for complex business logic, performance optimization, code reusability across teams, and integration with the Spark SQL API.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

03:14 MIN

Analyzing data with metric and bucket aggregations

Search and aggregations made easy with OpenSearch and NodeJS

03:50 MIN

Understanding Spark's core data APIs and abstractions

PySpark - Combining Machine Learning & Big Data

02:56 MIN

An introduction to the Apache Spark analytics engine

PySpark - Combining Machine Learning & Big Data

02:56 MIN

Navigating the challenges of distributed aggregations

Distributed search under the hood

04:07 MIN

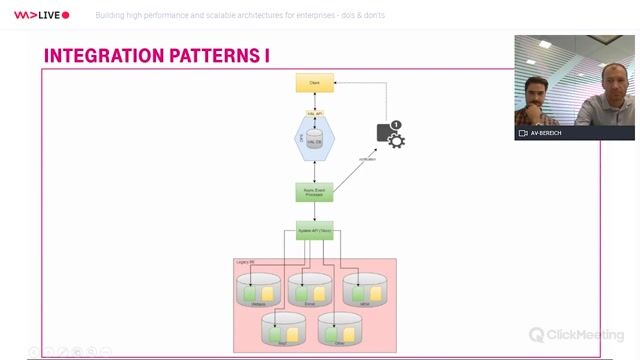

Implementing data aggregation and API management

Building high performance and scalable architectures for enterprises

06:35 MIN

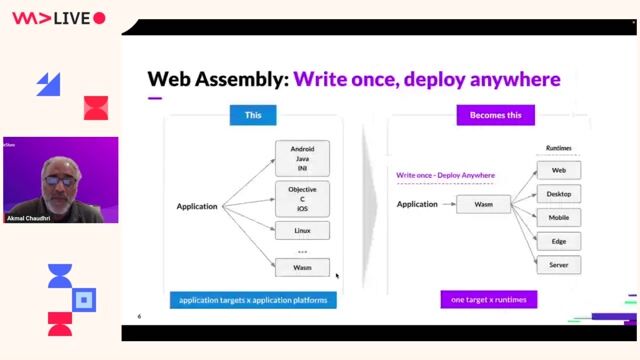

Why use WebAssembly for in-database analytics

Using WebAssembly for in-database Machine Learning

05:53 MIN

Q&A on parallel computing, data versioning, and security

DevOps for Machine Learning

03:04 MIN

Efficient aggregations with probabilistic data structures

Distributed search under the hood

Featured Partners

Related Videos

42:16

42:16Making Data Warehouses fast. A developer's story.

Adnan Rahic

43:26

43:26PySpark - Combining Machine Learning & Big Data

Ayon Roy

35:16

35:16How AI Models Get Smarter

Ankit Patel

28:39

28:39Make Your Data FABulous

Philipp Krenn

39:14

39:14Fully Orchestrating Databricks from Airflow

Alan Mazankiewicz

22:19

22:19Event-Driven Architecture: Breaking Conversational Barriers with Distributed AI Agents

Abhimanyu Selvan

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

31:08

31:08Maximising Cassandra's Potential: Tips on Schema, Queries, Parallel Access, and Reactive Programming

Hartmut Armbruster

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

UCASE CONSULTING

Paris, France

Senior

Spark

Python

Unit Testing

Amazon Web Services (AWS)

epunkt GmbH

Graz, Austria

€63K

Azure

QlikView

Powershell

Scripting (Bash/Python/Go/Ruby)

Schwarz Unternehmenskommunikation GmbH & Co. KG

Berlin, Germany

Senior

ETL

GIT

Python

PySpark

Unit Testing

+3