Christian Liebel

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

#1about 1 minute

Generative AI use cases and cloud provider limitations

Cloud-based AI faces challenges like required internet connectivity, data privacy risks, and high costs, creating a need for local alternatives.

#2about 13 minutes

Running large language models locally with Web LLM

Web LLM enables running multi-gigabyte language models like Llama 3 directly in the browser for offline use, despite initial download and initialization times.

#3about 2 minutes

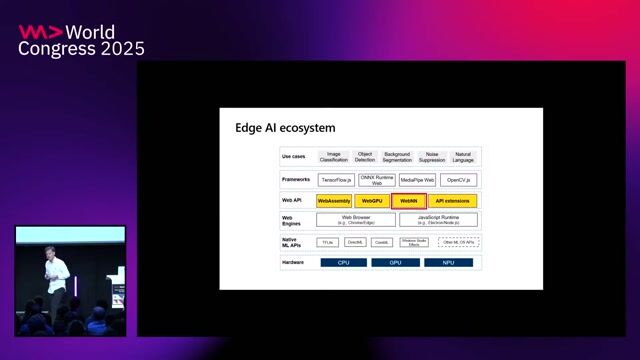

The technology behind in-browser AI execution

In-browser AI performance is accelerated by combining WebAssembly for efficient computation and the new WebGPU API for direct access to the system's GPU.

#4about 4 minutes

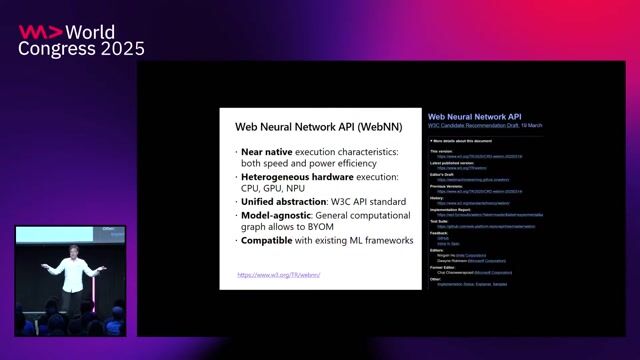

Boosting performance with the upcoming WebNN API

The Web Neural Network (WebNN) API provides access to dedicated Neural Processing Units (NPUs) for even faster, more efficient on-device model inference.

#5about 6 minutes

Solving model duplication with the new Prompt API

The experimental Prompt API addresses the issue of redundant model downloads by allowing websites to access a single, shared OS-level model like Gemini Nano.

#6about 3 minutes

Using the Prompt API for on-device data extraction

A demonstration shows how the Prompt API can use a local model to accurately extract structured data from unstructured text, highlighting its practical application.

#7about 2 minutes

Generating images in the browser with WebSD

WebSD brings text-to-image generation to the browser by running Stable Diffusion models locally using WebGPU, enabling creative AI tasks without cloud dependency.

#8about 1 minute

Weighing the pros and cons of local AI models

Local AI models offer superior privacy, offline availability, and low cost, but come with trade-offs like lower quality, high system requirements, and slower performance.

#9about 1 minute

The future of on-device AI in web development

While cloud-based models are currently superior, the trend towards more compact open-source models and OS-integrated AI suggests a growing role for local AI in specialized web applications.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

01:41 MIN

Two primary approaches for browser-based AI

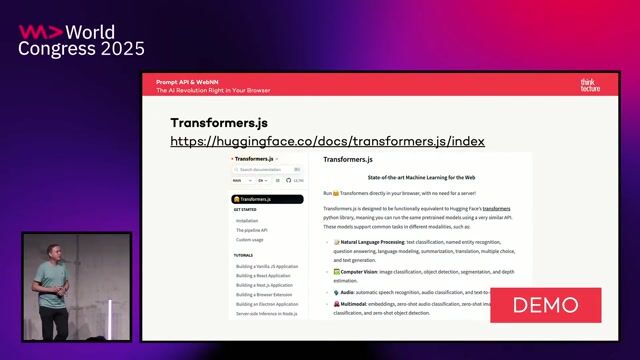

Prompt API & WebNN: The AI Revolution Right in Your Browser

02:08 MIN

The future of on-device AI hardware and APIs

From ML to LLM: On-device AI in the Browser

11:35 MIN

Implementing on-device AI with the Chrome AI API

WeAreDevelopers LIVE – AI vs the Web & AI in Browsers

02:51 MIN

Introducing the Web Neural Network (WebNN) standard

Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

03:24 MIN

Running on-device AI in the browser with Gemini Nano

Exploring Google Gemini and Generative AI

01:29 MIN

Introduction to generative AI in the browser

Generate AI in the Browser with Chrome AI - Raymond Camden

04:04 MIN

Accelerating performance with the WebNN API

Prompt API & WebNN: The AI Revolution Right in Your Browser

04:03 MIN

Leveraging hardware like the CPU, GPU, and NPU

Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

Featured Partners

Related Videos

30:36

30:36Prompt API & WebNN: The AI Revolution Right in Your Browser

Christian Liebel

30:13

30:13Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

Maxim Salnikov

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

33:51

33:51Exploring the Future of Web AI with Google

Thomas Steiner

Generate AI in the Browser with Chrome AI - Raymond Camden

Raymond Camden

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

25:47

25:47Performant Architecture for a Fast Gen AI User Experience

Nathaniel Okenwa

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Barmer

Remote

API

MySQL

NoSQL

React

+9

Advanced Group

München, Germany

Remote

API

C++

Python

OpenGL

+6

Apple

Zürich, Switzerland

Python

PyTorch

Machine Learning

Apple

Zürich, Switzerland

Python

PyTorch

Machine Learning

Odido

The Hague, Netherlands

Intermediate

API

Azure

Flask

Python

Docker

+3